20 – 27 February 2026

HIGHLIGHT OF THE WEEK

AI Summit in Geneva: Ten ways Switzerland can contribute to AI and humanity

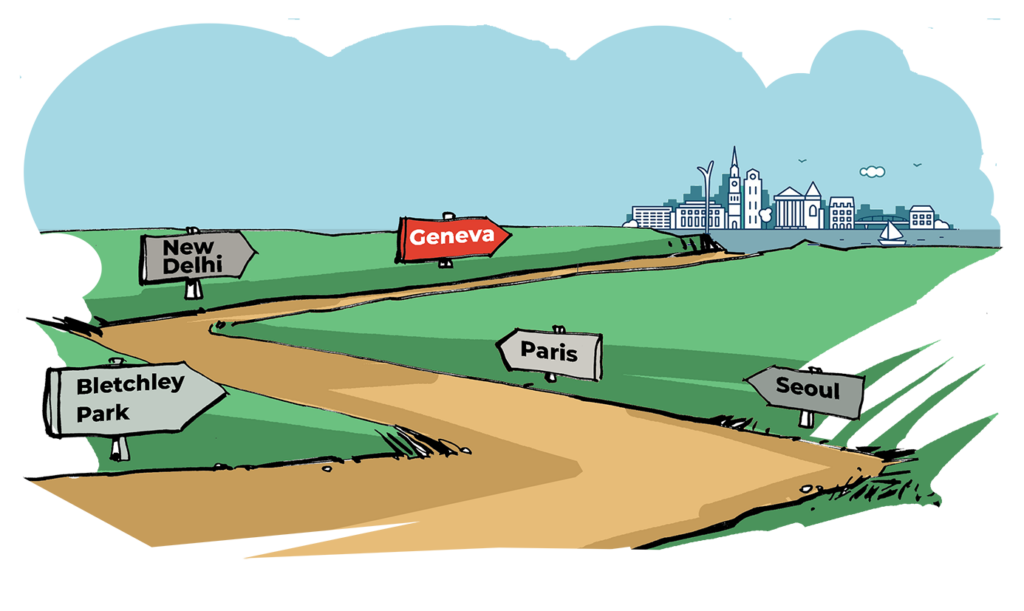

In 2027, Geneva will host the next global AI Summit, arriving at a moment when governments, businesses, and communities worldwide are deep into AI-driven transformation.

Previous hosts brought their own distinctiveness: from Bletchley Park’s focus on existential risk to Seoul’s innovation-security balance, Paris’s economic and societal lens, and New Delhi’s emphasis on development and inclusion. Switzerland now has an opportunity to shape the next phase of AI governance and ensure that the 2027 AI Summit is more than just an event.

We suggest ten signposts on the Road to the 2027 Geneva AI Summit, supported by DiploAI research, training, and policy monitoring via the Digital Watch Observatory.

Innovation. AI is fundamentally about innovation, both technological and, increasingly, societal. The next wave of innovation will involve activating the knowledge of citizens and institutions through data labelling, embedding reinforcement learning into pedagogical practices, and developing knowledge graphs. Switzerland has long ranked among the world’s top innovators, favouring grounded, low-hype developments that address real needs and unexplored niches.

Governance. Existing international governance frameworks are likely to shape AI policy in Geneva, given the city’s concentration of organisations spanning trade, health, telecommunications, labour, and security. The new International Scientific Panel on AI can draw lessons from the Geneva-based IPCC’s experience at the science–diplomacy interface. Switzerland’s bottom-up policymaking model supports citizen inclusion in AI debates, while its cautious, gap-based regulatory tradition aligns with emerging calls for pragmatic, proportionate AI governance.

Subsidiarity. The principle of subsidiarity, central to Swiss societal organisation, holds that decision-making should occur as close as possible to the citizens and communities concerned. Applied to AI, this approach would counter the concentration of power in a few major platforms by rooting AI development in the local communities where knowledge is created through everyday interactions.

EspriTech. AI is prompting renewed reflection on fundamental questions of humanity, free will, and ethics, leading societies to revisit their cultural, religious, and philosophical foundations. Drawing on EspriTech and the intellectual legacy of Geneva’s thinkers, these lessons can help fine-tune debate on AI and humanity.

Trust. Switzerland, as a country with high trust capital, can foster a ‘trust but verify’ approach ahead of the 2027 Summit. Trust can be rebuilt through a fully informed and realistic discussion of AI risks, which have gradually recalibrated from 2023’s focus on existential risks (survival of humanity) to the current primacy of existing risks (education, disinformation, jobs) and growing concerns of exclusion risks (monopolisation of AI by a few actors).

Apprenticeship. The AI apprenticeship model, inspired by the Swiss tradition of learning by doing with mentorship, is emerging as an effective way to train in AI. Ahead of the 2027 Geneva AI Impact Summit, it can strengthen the AI knowledge and capacities of diplomats, civil society, and local communities.

Humanity. The 2027 AI Summit needs to give concrete meaning to the call for AI to serve humanity’s core interests. Switzerland has been ‘walking the talk’ of human-centred society in politics, education, social care, and the economy. This centuries-long experience can help fine-tune the critical connections between AI and human civilisation by both sharing some lessons learned and experimenting with new approaches and practices for the AI era.

Institutions. Institutions are an important carrier of societal memory and knowledge. AI should be considered a creative change agent that can, among other things, preserve institutional memory and strengthen the capacity to respond to societal needs.

Mutilateralism. The AI Summit can help clarify the purpose of international organisations in the AI era. AI can, for example, help foster a new level of legitimacy for international processes by ensuring that contributions to public consultations are properly traced and reflected in policy documents.

Sovereignty. As geopolitical tensions rise, questions of AI and digital sovereignty are becoming more relevant, highlighting the need for agency, self-determination, and responsible management of the knowledge that drives AI rather than isolation. Ahead of the 2027 Summit, Swiss experience can support more informed discussions on the technical, legal, and knowledge dimensions of AI sovereignty within an interdependent framework.

Read the full text at our dedicated web page.

IN OTHER NEWS LAST WEEK

This week in AI governance

The EU. The European Commission has confirmed it will again delay publishing guidance on high-risk AI systems under the EU AI Act. The guidelines were due by 2 February 2026, but will now follow a revised timeline. The delay marks the second missed deadline and adds to broader implementation setbacks surrounding the EU AI Act.

The USA. The White House has proposed a ratepayer protection pledge, encouraging major technology companies — including Microsoft, Amazon, Meta, and Anthropic — to cover the additional electricity costs associated with their AI infrastructure and, in some cases, to invest in dedicated energy generation rather than relying solely on the public grid. A meeting at the White House in March is expected to formalise commitments from companies, many of which have already signalled support or made voluntary pledges.

DPAs. Data protection authorities from 61 jurisdictions and the European Data Protection Supervisor (EDPS) issued a joint statement warning about AI tools that generate realistic images of identifiable individuals without consent. They raised concerns about privacy, dignity, and child safety, noting that such technologies—often embedded in social media—enable non-consensual intimate imagery and other harmful content. Authorities stressed that AI systems must comply with data protection laws and that certain uses may constitute criminal offences. Organisations were urged to implement safeguards, ensure transparency, enable swift content removal, and engage proactively with regulators to protect fundamental rights.

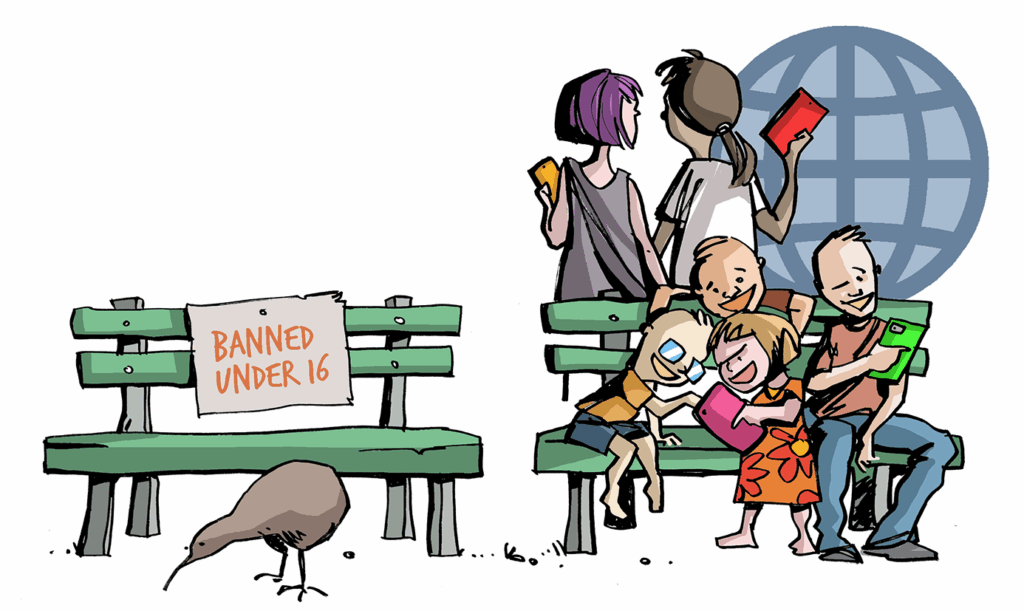

Child safety online: The backlash against companies continues

In Brazil, a new legislative proposal—Bill No. 730/2026—has been introduced to the Chamber of Deputies. The proposal mandates age-verification mechanisms aligned with Brazil’s data protection law (Law No. 13.709/2018), prioritising data minimisation, pseudonymisation, and limited retention. It also bans direct or indirect monetisation involving children under 14 and requires prior judicial authorisation for remunerated artistic work by adolescents aged 14–18, subject to safeguards. If adopted, the bill would formalise baseline compliance requirements for platforms operating in the Brazilian market.

The data protection authority of Türkiye has opened a new review into how major social media platforms manage children’s personal data. The Personal Data Protection Authority is reviewing how children’s personal data is processed on TikTok, Instagram, Facebook, YouTube, X and Discord and what safeguards are in place. Separately, the ruling Justice and Development Party (AKP) is expected to introduce a family package that would require identity verification for every account through phone numbers or the e-Devlet system. Children under 15 would not be allowed to create profiles, and further limits could apply to users under 18.

Meanwhile, enforcement action in the UK underscores the financial and reputational risks of non-compliance. The UK privacy watchdog fined Reddit £20 million for unlawfully processing children’s personal data and failing to protect under-13 users. The regulator found Reddit lacked ‘robust age assurance mechanisms’ and relied on easily bypassed self-declaration, meaning it had no lawful basis to handle children’s data and exposed them to potentially harmful content. Reddit also did not complete a required data protection impact assessment before 2025. The fine is the largest issued by the ICO over children’s privacy. Reddit plans to appeal.

The social media addiction trial in LA continues, with the plaintiff’s psychiatrist, Virginia Burke, taking the stand. Burke testified that plaintiff Kaley’s social media use contributed to her mental health issues, citing online bullying. However, Burke noted that Kaley also enjoyed creating and sharing video art, though she was frustrated when others claimed credit for it. Burke stated that social media addiction is not yet a widely recognised diagnosis in psychiatry and is absent from the latest Diagnostic and Statistical Manual, the key text for US mental health professionals.

Meanwhile, Meta, which is one of the defendants in the LA trial, announced that Instagram would begin alerting parents if their teenage children repeatedly search for terms related to suicide or self-harm on the platform. The new feature, rolling out first in the USA, UK, Australia, and Canada, sends notifications via email, text, WhatsApp or in-app messages to parents enrolled in Instagram’s parental supervision tools, along with expert resources to help with sensitive conversations. Instagram already blocks such content and directs users to support resources; similar alerts for teens’ interactions with AI are planned later this year.

US orders diplomats to push back against global data sovereignty laws

An internal US State Department cable seen by Reuters directs US diplomats to actively oppose foreign data sovereignty and data localisation laws and to promote a more assertive US international data policy.

The directive, signed on 18 February, also encourages advocacy for the Global Cross‑Border Privacy Rules Forum (CBPR) as an alternative mechanism supporting data flow with privacy protections.

The cable, signed on 18 February, argues that such regulations could disrupt global data flows, raise costs, create unnecessarily burdensome compliance requirements, and hamper cloud and AI services.

The big picture. This move underscores rising tensions with Europe’s regulatory push around privacy and digital sovereignty and reflects a move toward defending US tech interests abroad.

Argentina debates the protection of cognitive sovereignty

A draft law on Cognitive Sovereignty and Protection of Human Attention was introduced to the Argentine Chamber of Deputies. This draft proposes a regulatory framework to safeguard cognitive autonomy, human attention, and the holistic development of children and adolescents from algorithmic recommendation systems on mass-reach digital platforms.

It recognises cognitive autonomy as a protected legal good, essential to the exercise of fundamental rights under the National Constitution and international human rights treaties.

The draft defines mass-reach digital platforms, algorithmic recommendation systems, addictive design techniques, and user consent requirements, emphasising transparency, opt-in personalisation, and accessible non-algorithmic alternatives, such as chronological feeds.

For minors, the law mandates ‘safe by default’ platform settings, limits on session duration, notifications, autoplay, and visible social metrics, with stricter prohibitions for users under 13, including bans on behavioural profiling, targeted advertising, and addictive design patterns. Platforms must conduct impact assessments for new features that affect minors and register their systems with the National Registry, subject to technical audits and annual transparency reports.

The law creates a civil and collective responsibility regime, coordinated by the Agencia de Acceso a la Información Pública, which oversees compliance, sanctions, and corrective measures.

Why does it matter? This law transforms the fight against Big Tech by shifting the legal battlefield from data privacy to cognitive freedom, treating addictive algorithms as a public health threat rather than just a business model.

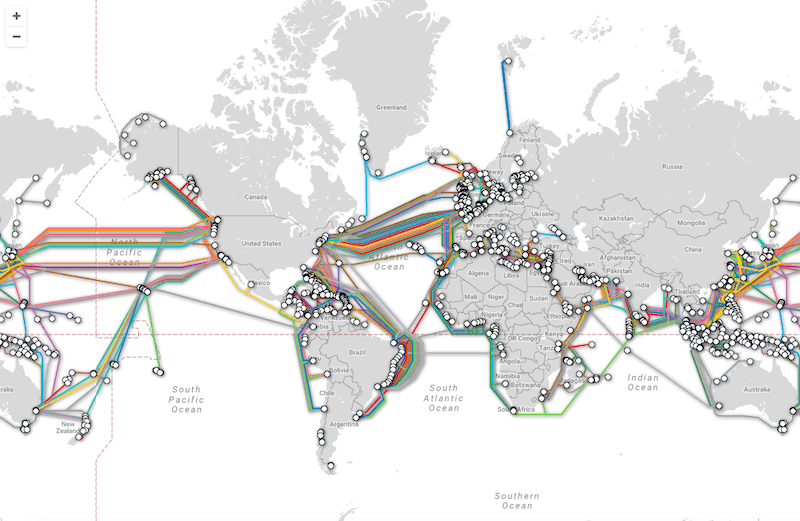

Historic transatlantic cable recovered

Engineers are retrieving TAT‑8, the first fibre-optic transatlantic cable, from the Atlantic seabed more than three decades after it revolutionised global communications.

Laid in 1988 by a consortium of AT&T, British Telecom, and France Telecom, TAT‑8 carried optical signals between the USA, the UK, and France, offering speeds of roughly 280 Mbit/s and proving the viability of long-distance fibre technology. Though retired in 2002 after an irreparable fault, the cable has remained submerged until now.

Why does it matter? The operation clears the seabed for new infrastructure, and reclaims glass fibre, copper, and steel components for recycling at a time of global metal shortages.

EU proposes extending ‘Roam Like at Home’ to Western Balkans

The European Commission has proposed opening negotiations to bring Albania, Bosnia and Herzegovina, Kosovo, Montenegro, North Macedonia, and Serbia into the EU’s ‘Roam Like at Home’ regime. If implemented, citizens and businesses would be able to make calls, send texts, and use mobile data across borders at domestic rates, both when visiting the EU and when EU citizens travel in the region. The move would build on existing voluntary arrangements between some operators and complement the Western Balkans’ regional roaming agreement.

What’s next? The Commission has adopted proposals for negotiating mandates and is now seeking approval from the European Council to begin formal talks.

Cabo Verde unveils centralised government portal

The government of Cabo Verde has launched Gov.CV, a unified digital portal designed to centralise public services and streamline interactions between the state, citizens, and businesses. Gov.CV replaces multiple isolated systems with a single entry point, aiming to simplify administrative procedures and improve interoperability among government services. The new portal promises enhanced efficiency, better communication between public service entities, increased security, and real-time tracking of administrative procedures

Why does it matter? Previously, citizens often had to navigate several platforms and repeatedly submit the same documents. By consolidating services, the government expects reductions in processing times, fewer redundancies, and a more transparent user experience. The initiative marks a significant step in Cabo Verde’s digital transformation and modernisation of public administration.

LOOKING AHEAD

The Geneva Dialogue masterclass on 4 March opens the first thematic cycle of 2026, dedicated to the security and governance of open source software. Its purpose is to establish a shared analytical baseline: how open source software (OSS) functions as a systemic dependency; how security responsibilities are distributed across maintainers, vendors, users, and public authorities; and where current governance approaches struggle to manage risk, accountability, and resilience at scale. The session is designed to bridge policy and technical perspectives and to frame the key questions that will be explored in depth during the subsequent scenario-based consultation. Registration for the event is open.

The Group of Governmental Experts on Emerging Technologies in the Area of Lethal Autonomous Weapons Systems (GGE on LAWS) will meet in Geneva from 2–6 March. Over 10 days, states will continue efforts to formulate—by consensus—a set of elements of an instrument and other possible measures addressing lethal autonomous weapons systems, drawing on legal, military, and technical expertise.

READING CORNER

The New Delhi AI Summit promised inclusion but exposed fractures in global AI governance. From weak commitments to geopolitical alliances, what really emerged beneath the rhetoric? What does this mean for global AI governance?