12-19 December 2025

HIGHLIGHT OF THE WEEK

From review to recalibration: What the WSIS+20 outcome means for global digital governance

The WSIS+20 review, conducted 20 years after the World Summit on the Information Society, concluded in New York with the adoption of a high-level outcome document by the UN General Assembly. The review assesses progress toward building a people-centred, inclusive, and development-oriented information society, highlights areas needing further effort, and outlines measures to strengthen international cooperation.

A major institutional decision was to make the Internet Governance Forum (IGF) a permanent UN body. The outcome also includes steps to strengthen its functioning: broadening participation—especially from developing countries and underrepresented communities—enhancing intersessional work, supporting national and regional initiatives, and adopting innovative and transparent collaboration methods. The IGF Secretariat is to be strengthened, sustainable funding ensured, and annual reporting on progress provided to UN bodies, including the Commission on Science and Technology for Development (CSTD).

Negotiations addressed the creation of a governmental segment at the IGF. While some member states supported this as a way to foster more dialogue among governments, others were concerned it could compromise the IGF’s multistakeholder nature. The final compromise encourages dialogue among governments with the participation of all stakeholders.

Beyond the IGF, the outcome confirms the continuation of the annual WSIS Forum and calls for the United Nations Group on the Information Society (UNGIS) to increase efficiency, agility, and membership.

WSIS action line facilitators are tasked with creating targeted implementation roadmaps linking WSIS action lines to Sustainable Development Goals (SDGs) and Global Digital Compact (GDC) commitments.

UNGIS is requested to prepare a joint implementation roadmap to strengthen coherence between WSIS and the Global Digital Compact, to be presented to CSTD in 2026. The Secretary-General will submit biennial reports on WSIS implementation, and the next high-level review is scheduled for 2035.

The document places closing digital divides at the core of the WSIS+20 agenda. It addresses multiple aspects of digital exclusion, including accessibility, affordability, quality of connectivity, inclusion of vulnerable groups, multilingualism, cultural diversity, and connecting all schools to the internet. It stresses that connectivity alone is insufficient, highlighting the importance of skills development, enabling policy environments, and human rights protection.

The outcome also emphasises open, fair, and non-discriminatory digital development, including predictable and transparent policies, legal frameworks, and technology transfer to developing countries. Environmental sustainability is highlighted, with commitments to leverage digital technologies while addressing energy use, e-waste, critical minerals, and international standards for sustainable digital products.

Human rights and ethical considerations are reaffirmed as fundamental. The document stresses that rights online mirror those offline, calls for safeguards against adverse impacts of digital technologies, and urges the private sector to respect human rights throughout the technology lifecycle. It addresses online harms such as violence, hate speech, misinformation, cyberbullying, and child sexual exploitation, while promoting media freedom, privacy, and freedom of expression.

Capacity development and financing are recognised as essential. The document highlights the need to strengthen digital skills, technical expertise, and institutional capacities, including in AI. It invites the International Telecommunication Union to establish an internal task force to assess gaps and challenges in financial mechanisms for digital development and to report recommendations to CSTD by 2027. It also calls on the UN Inter-Agency Working Group on AI to map existing capacity-building initiatives, identify gaps, and develop programs such as an AI capacity-building fellowship for government officials and research programmes.

Finally, the outcome underscores the importance of monitoring and measurement, requesting a systematic review of existing ICT indicators and methodologies by the Partnership on Measuring ICT for Development, in cooperation with action line facilitators and the UN Statistical Commission. The Partnership is tasked with reporting to CSTD in 2027. Overall, the CSTD, ECOSOC, and the General Assembly maintain a central role in WSIS follow-up and review.

The final text reflects a broad compromise and was adopted without a vote, though some member states and groups raised concerns about certain provisions.

IN OTHER NEWS LAST WEEK

This week in AI governance

El Salvador. El Salvador has partnered with xAI to launch the world’s first nationwide AI-powered education programme, deploying the Grok model across more than 5,000 public schools to deliver personalised, curriculum-aligned tutoring to over one million students over the next two years. The initiative will support teachers with adaptive AI tools while co-developing methodologies, datasets and governance frameworks for responsible AI use in classrooms, aiming to close learning gaps and modernise the education system. President Nayib Bukele described the move as a leap forward in national digital transformation.

BRICS. Talks on AI governance within the BRICS bloc have deepened as member states seek to harmonise national approaches and shared principles to ethical, inclusive and cooperative AI deployment. Still premature to talk about the creation of an AI-BRICS, Deputy Foreign Minister Sergey Ryabkov, Russia’s BRICS sherpa.

Pax Silica. A diverse group of nations has announced Pax Silica, a new partnership aimed at building secure, resilient, and innovation-driven supply chains for the technologies that underpin the AI era. These include critical minerals and energy inputs, advanced manufacturing, semiconductors, AI infrastructure and logistics. Analysts warn that diverging views may emerge if Washington pushes for tougher measures targeting China, potentially increasing political and economic pressure on participating nations. However, the USA, which leads the platform, clarified that the platform will focus on strengthening supply chains among its members rather than penalising non-members, like China.

UN AI Resource Hub. The UN AI Resource Hub has gone live as a centralised platform aggregating AI activities and expertise across the UN system. Presented by the UN Inter-Agency Working Group on AI. This platform has been developed through the joint collaboration of UNDP, UNESCO and ITU. It enables stakeholders to explore initiatives by agency, country and SDGs. The hub supports inter-agency collaboration, capacity for UN member states, and enhanced coherence in AI governance and terminology.

ByteDance inks US joint-venture deal to head off a TikTok ban

ByteDance has signed binding agreements to shift control of TikTok’s US operations to a new joint venture majority-owned (80.1%) by American and other non-Chinese investors, including Oracle, Silver Lake and Abu Dhabi-based MGX.

In exchange, ByteDance retains a 19.9% minority stake, in an effort to meet US national security demands and avoid a ban under the 2024 divest-or-ban law.

The deal is slated to close on 22 January 2026, and US officials previously cited an implied valuation of approximately $14 billion, although the final terms have not been disclosed.

TikTok CEO Shou Zi Chew told staff the new entity will independently oversee US data protection, algorithm and software security, and content moderation, with Oracle acting as the ‘trusted security partner’ hosting US user data in a US-based cloud and auditing compliance.

China edges closer to semiconductor independence with EUV prototype

Chinese scientists have reportedly built a prototype extreme ultraviolet (EUV) lithography machine, a technology long monopolised by ASML — the Dutch company that is the world’s sole supplier of EUV systems and a central chokepoint in global semiconductor manufacturing.

EUV machines enable the production of the most advanced chips by etching ultra-fine circuits onto silicon wafers, making them indispensable for AI, advanced computing and modern weapons systems.

The Chinese prototype is already generating EUV light, though it has not yet produced working chips.

The project reportedly involved former ASML engineers who reverse-engineered key elements of EUV systems, suggesting China may be closer to advanced chip-making capability than Western policymakers and analysts had assumed.

Officials are targeting chip production by 2028, with insiders pointing to 2030 as a more realistic milestone.

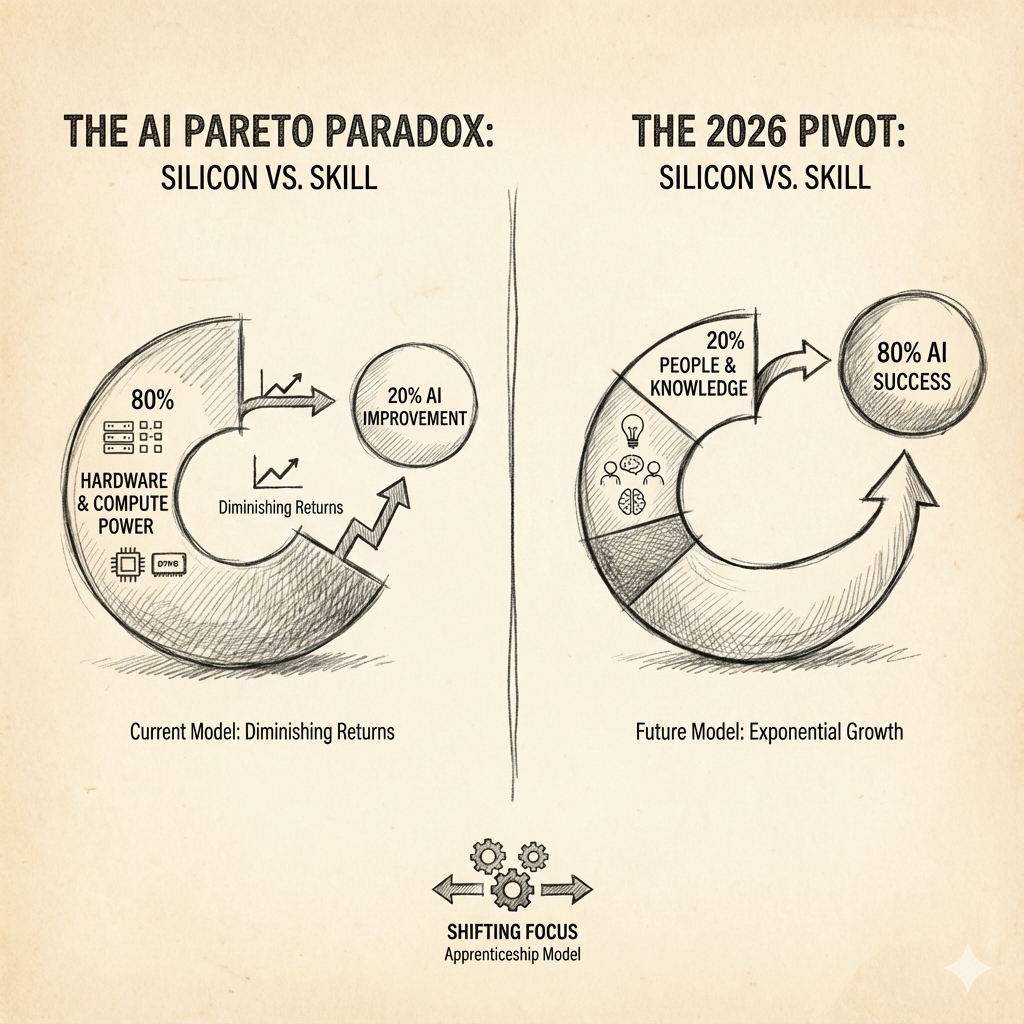

USA launches tech force to boost federal AI and advanced tech skills

The Trump administration has unveiled a new initiative, branded the US Tech Force, aimed at rebuilding the US government’s technical capacity after deep workforce reductions, with a particular focus on AI and digital transformation.

The programme reflects growing concern within the administration that federal agencies lack the in-house expertise needed to deploy and oversee advanced technologies, especially as AI becomes central to public administration, defence, and service delivery.

According to the official TechForce.gov website, participants will work on high-impact federal missions, addressing large-scale civic and national challenges. The programme positions itself as a bridge between Silicon Valley and Washington, encouraging experienced technologists to bring industry practices into government environments.

Supporters argue that the approach could quickly strengthen federal AI capacity and reduce reliance on external contractors. Critics, however, warn of potential conflicts of interest and question whether short-term deployments can substitute for sustained investment in the public sector workforce.

Brussels targets ultra-cheap imports

The EU member states will introduce a new customs duty on low-value e-commerce imports, starting 1 July 2026. Under the agreement, a customs duty of €3 per item will be applied to parcels valued at less than €150 imported directly into the EU from third countries.

This marks a significant shift from the previous regime, under which such low-value goods were generally exempt from customs duties.

The temporary duty is intended to bridge the gap until the EU Customs Data Hub, a broader customs reform initiative designed to provide comprehensive import data and enhance enforcement capacity, becomes fully operational in 2028.

The Commission framed the measure as a necessary interim solution to ensure fair competition between EU-based retailers and overseas e-commerce sellers. The measure also lands squarely in the shadow of platforms such as Shein and Temu, whose business models are built on shipping vast volumes of ultra-low-value parcels.

USA reportedly suspends Tech Prosperity Deal with UK

The USA has reportedly suspended the implementation of the Tech Prosperity Deal with the UK, pausing a pact originally agreed during President Trump’s September state visit to London.

The Tech Prosperity Deal was designed to strengthen collaboration in frontier technologies, with a strong emphasis on AI, quantum, and the secure foundations needed for future innovation, and included commitments from major US tech firms to invest in the UK.

According to the Financial Times, Washington’s decision to suspend the deal reflects growing frustration with London’s stance on broader trade issues beyond technology. U.S. officials reportedly wanted the UK to make concessions on non-tariff barriers, particularly regulatory standards affecting food and industrial goods, before advancing the tech agreement

Neither government has commented yet.

LOOKING AHEAD

Digital Watch Weekly will take a short break over the next two weeks. Thank you for your continued engagement and support.