September 2025 in retrospect

As the UNGA80 spotlighted AI and digital cooperation, the world’s attention turned to how technology is reshaping diplomacy, democracy, and power.

This edition brings you our top analyses and storylines from across the digital sphere — from open-source AI debates to social media’s growing identity crisis.

UNGA80 spotlight — Global leaders put AI and digital cooperation at the centre of diplomacy.

The open source imperative — Why openness is becoming a strategic asset in the AI race.

The rise of AI slop — As social media turns more artificial, platforms face a crisis of authenticity.

TikTok’s American makeover — A rebrand under scrutiny reveals deeper tensions over trust and control.

Chips and sovereignty — From globalisation to guarded autonomy in the semiconductor race.

Nepal’s Discord democracy — How a banned platform became an unlikely ballot box.

The digital playground — Governments weigh fences and curfews for young users online.

Last month in Geneva — Highlights from events shaping international digital governance.

Snapshot: The developments that made waves in September

ARTIFICIAL INTELLIGENCE

The Global Dialogue on AI Governance was launched to foster open, inclusive discussions on issues such as safe and trustworthy AI, digital divides, ethics, human rights, transparency, accountability, interoperability, and open-source development.

The UN Secretary-General has opened applications for a 40-member Independent International Scientific Panel on AI, mandated under the Global Digital Compact to deliver annual evidence-based assessments of AI’s opportunities, risks, and impacts to the Global Dialogue on AI Governance and the General Assembly.

A coalition of global experts and leaders has launched the Global Call for AI Red Lines, an initiative that advocates for clear red lines to govern the development and deployment of AI.

Albania introduced the world’s first AI-powered public official, named Diella. Appointed to oversee public procurement, the virtual minister represents an attempt to use technology itself to create a more transparent and efficient government, with the goal of ensuring procedures are ‘100% incorruptible.’

Kazakhstan will establish a Ministry of Artificial Intelligence and Digital Development to drive its goal of becoming a fully digital nation within three years, as part of the forthcoming Digital Kazakhstan strategy.

Italy became the first EU country to pass a national AI law, introducing detailed rules to govern the development and use of AI technologies across key sectors such as health, work, and justice.

The Mexican government is preparing a law to regulate the use of AI in dubbing, animation, and voiceovers to prevent unauthorised voice cloning and safeguard creative rights.

The EU is set to unveil its ‘Apply AI strategy’ to boost homegrown AI as a strategic asset for competitiveness, security, and resilience, reducing reliance on US and Chinese technology..

TECHNOLOGIES

The USA, Japan, and South Korea held trilateral meetings in Seoul and Tokyo to advance quantum cooperation, focusing on securing ecosystems against cyber, physical, and intellectual property threats.

The USA and the UK have signed a Technology Prosperity Deal to strengthen collaboration in frontier technologies, with a strong emphasis on AI, quantum, and the secure foundations needed for future innovation.

Researchers at the University of Pennsylvania have demonstrated that quantum and classical networks can share existing fibre infrastructure using standard Internet Protocol, a breakthrough with broad implications for governance, infrastructure, and the future of digital societies.

European ministers have signed the Declaration of the Semicon Coalition, calling for an EU Chips Act 2.0 to strengthen semiconductor resilience, innovation, and competitiveness through collaboration, investment, skills development, sustainability, and global partnerships.

The USA proposed producing only half of American chips in Taiwan and moving the rest to the US, but Taiwan rejected the idea, saying it was never part of official discussions.

INFRASTRUCTURE

Meta has announced Candle, a new submarine cable system designed to enhance digital connectivity across East and Southeast Asia. The 8,000-kilometre network will link Japan, Taiwan, the Philippines, Indonesia, Malaysia, and Singapore by 2028, offering a record 570 terabits per second (Tbps) of capacity.

CYBERSECURITY

China has enacted one of the world’s toughest cybersecurity reporting laws, mandating major infrastructure providers and all network operators to report serious cyber incidents within one hour.

Brazil is set to adopt its first national cybersecurity law, the Cybersecurity Legal Framework, which will create a National Cybersecurity Authority to centralise oversight and strengthen protection for citizens and businesses.

Cybercriminal group Radiant has dropped its extortion attempt against Kido Schools and issued an apology, claiming all child data was deleted, but experts doubt the sincerity of the move.

Japanese beer maker Asahi Group Holdings has halted production at its main plant following a cyberattack that caused major system failures.

Australia has issued regulatory guidance for its upcoming ban on social media use by individuals under 16, effective 10 December 2025, which requires platforms to verify ages, remove underage accounts, and block re-registration attempts. The current ban already includes Facebook, TikTok, YouTube, and Snapchat. The head of Australia’s eSafety Commissioner, Julie Inman Grant, has written to 16 more companies, including WhatsApp, Reddit, Twitch, Roblox, Pinterest, Steam, Kick, and Lego Play, to ‘self-assess’ whether they fall under the ban’s remit.

Greek Prime Minister Kyriakos Mitsotakis has indicated that Greece may consider banning social media use for children under the age of 16.

French lawmakers are proposing new rules to curb teen social media use, including automatic nighttime curfews disabling accounts for 15- to 18-year-olds between 10 p.m. and 8 a.m. to address mental health concerns.

The US Federal Trade Commission (FTC) has launched an investigation into the safety of AI chatbots, focusing on their impact on children and teenagers.

OpenAI has introduced a specialised version of ChatGPT tailored for teenagers, incorporating age-prediction technology to restrict access to the standard version for users under 18. The company has also introduced new parental controls, providing families with greater oversight of how their teens use the platform.

Meta is expanding teen-specific Facebook and Messenger accounts with stricter default privacy settings and controls to create a safer, more age-appropriate online environment.

ECONOMIC

Apple has asked the European Commission to repeal the Digital Markets Act (DMA), arguing it forces a choice between weakening device security or withholding features from EU users, citing delayed launches of services like Live Translation, iPhone Mirroring, and enhanced location tools.

The EU and Indonesia have finalised a Comprehensive Economic Partnership and Investment Protection Agreement, focusing on technology, digitalisation, and sustainability. This agreement eliminates tariffs on 98.5% of trade, expands market access, and secures critical raw materials for strategic industries.

The UAE has signed the Multilateral Competent Authority Agreement under CARF, planning implementation by 2027 to enable automatic crypto tax data sharing and strengthen sector oversight and global transparency.

The People’s Bank of China has opened a digital RMB hub in Shanghai to expand global use and strengthen financial market services.

HUMAN RIGHTS

The European Data Protection Board (EDPB) has adopted guidelines clarifying how the Digital Services Act (DSA) interacts with the General Data Protection Regulation (GDPR). The aim is to ensure consistency across rules that touch on issues like transparency of recommender systems, protection of minors, and online advertising.

China’s cyberspace regulator has proposed that major online platforms establish independent expert oversight committees to monitor data practices and security, with noncompliance risking intervention by provincial authorities.

Ghana has launched a year-long national privacy campaign to build public awareness and trust, complemented by professional initiatives like the Ghana Association of Privacy Professionals and recognition for certified Data Protection Officers.

Social media platform Discord has reported a data breach caused by a compromised third-party service provider, exposing personal information of users who had contacted its support and Trust & Safety teams.

LEGAL

The European Commission has fined Google nearly $3.5 billion for abusing its dominance in digital advertising by favouring its own AdX platform in ad-serving and buying tools, breaching EU antitrust rules.

The AI startup, Anthropic, has agreed to pay $1.5 billion to settle a copyright lawsuit accusing the company of using pirated books to train its Claude AI chatbot.

Amazon has agreed to pay $2.5 billion to settle FTC charges that it deceptively enrolled 35 million customers in Prime without clear consent and made cancellation deliberately difficult.

Elon Musk’s xAI has sued OpenAI over alleged technology poaching, while OpenAI asked a judge to dismiss the case as baseless and part of Musk’s ongoing harassment.

Apple and OpenAI have asked a federal judge to dismiss Musk’s claim that they colluded to disadvantage xAI’s Grok chatbot, arguing their partnership is non-exclusive and that xAI has not shown any real competitive harm.

Europe’s General Court has sided with Meta and TikTok in their challenge to the EU’s Digital Services Act supervisory fee, ruling that the 0.05% levy was calculated unfairly and placed a disproportionate financial burden on the companies.

SOCIOCULTURAL

After briefly banning social media, Nepal lifted the restrictions. Nepalis turned to Discord to elect their new prime minister.

An executive order by US President Donald Trump set the stage for a new American-led company to take 80% ownership of TikTok, leaving ByteDance and its Chinese investors with a minority stake under 20%.

The UK government has announced plans to make digital ID mandatory for proving the right to work by the end of the current Parliament, expected no later than 2029.

A Dutch court has ruled that Meta must allow Facebook and Instagram users to set a permanent chronological feed as the default feed, making the option easily accessible and retaining it across app restarts.

YouTube will reinstate accounts previously banned for repeatedly spreading misinformation about COVID-19 and the 2020 US presidential election, marking a further rollback of its moderation policies.

Meta and OpenAI have launched social media apps featuring feeds of AI-generated videos, raising concerns about AI slop.

DEVELOPMENT

The UNDP will launch a Government Blockchain Academy in 2026 to train public officials in blockchain, AI, and emerging technologies, supporting tech-driven economic growth and sustainable development.

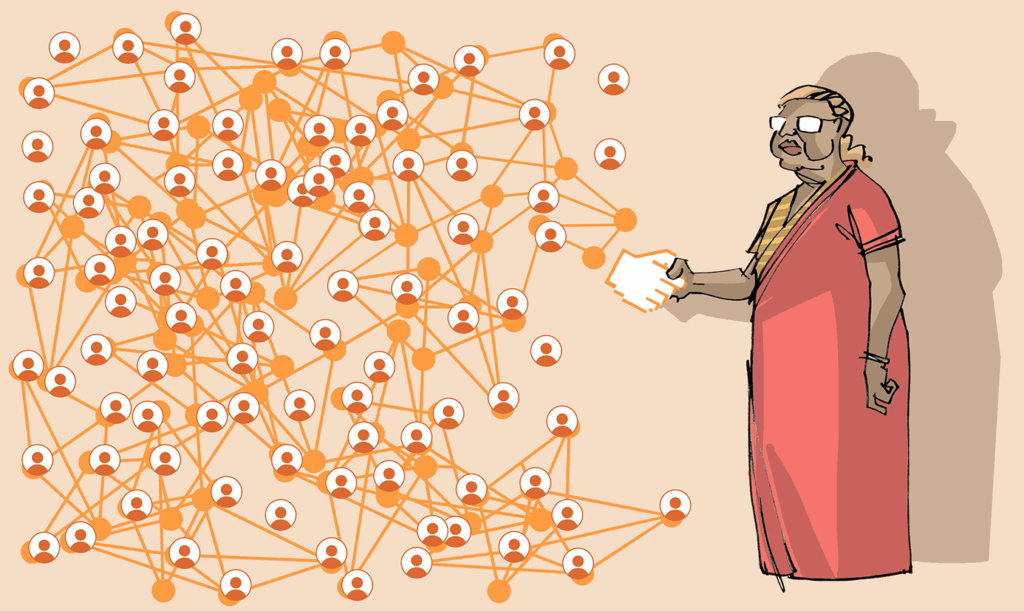

The 2025 Digital Participation Platforms Guide was released to help governments and civil society design and manage digital platforms that enhance transparency and civic engagement.

UNGA80 turns spotlight on digital issues and AI governance

Technology was everywhere at this year’s UN General Assembly. Whether in the General Debate, side events on digital prosperity, or the launch of a new dialogue on AI governance, governments and stakeholders confronted the urgent question of how to ensure that digital transformation serves humanity. Here are the key moments.

Digital Cooperation Day: From principles to implementation in global digital governance

On 22 September, the UN Office for Digital and Emerging Technologies (ODET) hosted a Digital Cooperation Day, marking the first anniversary of the Global Digital Compact. The event brought together leaders from governments, business, academia, and civil society to discuss how to shift the focus from principle-setting to the implementation of digital governance. Discussions covered inclusive digital economies, AI governance, and digital public infrastructure, with sessions on privacy, human rights in data governance, and the role of technology in sustainable development and climate action. Panels also explored the impact of AI on the arts and innovation, while roundtables highlighted strategies for responsible and equitable use of technology. The Digital Cooperation Day is set to become an annual platform for reviewing progress and addressing new challenges in international digital cooperation.

Digital@UNGA 2025: Digital for Good – For People and Prosperity

On 23 September, the International Telecommunication Union (ITU) and the UN Development Programme (UNDP) hosted Digital@UNGA 2025: Digital for Good – For People and Prosperity. The anchor event spotlighted digital technologies as tools for inclusion, equity, and opportunity. Affiliate sessions throughout the week explored trust, rights, and universal connectivity, while side events examined issues ranging from AI for the SDGs and digital identity to green infrastructure, early-warning systems, and space-based connectivity. The initiative sought to showcase digital tools as a force for healthcare, education, and economic empowerment, and to inspire action and dialogue towards an equitable and empowering digital future for all.

Security Council debate on AI

The UN Security Council held a high-level debate on AI, highlighting the technology’s promise and its urgent risks for peace and security. The debate, chaired by the Republic of Korea President Lee Jae Myung, underscored a shared recognition that AI offers enormous benefits, but without strong global cooperation and governance, it could deepen divides, destabilise societies, and reshape warfare in dangerous ways.

The launch of the Global Dialogue on AI Governance

A major highlight was the High level Meeting to Launch Global Dialogue on AI Governance on 25 September.

Senior leaders outlined how AI could drive economic growth and development, particularly in the Global South, while plenary discussions saw stakeholders present their perspectives on building agile, responsive and inclusive international AI governance for humanity. A youth representative closed the session, underscoring younger generations’ stake in shaping AI’s future.

The Global Dialogue on AI Governance is tasked, as decided by the UN General Assembly this August, with facilitating open, transparent and inclusive discussions on AI governance. The dialogue is set to have its first meeting in 2026, along with the AI for Good Summit in Geneva.

Launch of open call for Independent International Scientific Panel on AI

The UN Secretary-General has launched an open call for candidates to join the Independent International Scientific Panel on Artificial Intelligence. Agreed by member states in September 2024 as part of the Global Digital Compact, the 40-member Panel will provide evidence-based scientific assessments on AI’s opportunities, risks, and impacts. Its work will culminate in an annual, policy-relevant – but non-prescriptive – summary report presented to the Global Dialogue, along with up to two updates per year to engage with the General Assembly plenary. Following the call for nominations, the Secretary-General will recommend 40 members for appointment by the General Assembly.

The General Debate of the UNGA80

The General Debate opened on 23 September under the theme ‘Better together: 80 years and more for peace, development and human rights’. While leaders addressed a broad spectrum of global challenges, digital and AI governance were recurring concerns.

Technology must remain a servant of humanity, not its master. Debates underscored the need to align rapid technological change with global governance, with countries calling for stronger international cooperation and responsible approaches to the development and use of technology. Delegations emphasised that digital technologies must serve humanity – advancing development, human rights, and democracy – while warning of growing risks posed by AI misuse, disinformation, hybrid warfare, cyber threats, and the governance of critical minerals exploitation.

Member states voiced both optimism and concern: they called for ethical, human-centred and responsible AI governance, stronger safeguards for peace and security, and rules and ethical standards to manage risks, including in military applications. Calls for a global AI framework were echoed in various statements, alongside broader appeals for inclusive digital cooperation, accelerated technology transfer, and investment in infrastructure, literacy, and talent development. Several leaders welcomed the new AI mechanisms established by the UNGA.

Alongside the warnings, governments stressed the promise of digital technologies for development, health, education, productivity, and the green energy transition. Statements highlighted the importance of inclusion, equitable access, connectivity, digital literacy, and capacity-building, especially for developing countries, noting that AI can boost economic autonomy and long-term prosperity if governed responsibly. Delegations also underlined the need to actively address the digital divide through investment in infrastructure, skills, and technology transfer, with many emphasising that the benefits of this new era must be shared fairly with all.

A recurring theme was that bridging technological and social divides requires mature multilateralism and reinforced international cooperation. Speakers warned that digital disruption is deepening geopolitical divides, with smaller and developing nations demanding a voice in shaping emerging governance regimes. Some also noted that advancing secure, rights-based, and human-centric technologies, protecting against cybercrime, and managing the influence of global tech corporations are essential for global stability.

There were also some calls for universal guardrails, international norms, and new frameworks to address risks linked to cybercrime, disinformation, repression, hybrid warfare, and the mental health of youth.

The discussions underscored a common thread: while digital innovation offers extraordinary opportunities for development, inclusion, and growth, its risks demand shared standards, global cooperation, and a commitment to human dignity.

The bigger picture: A comprehensive coverage of UNGA80 can be found on our dedicated web page.

The General Debate at the 80th session of the UN General Assembly brings together high-level representatives from across the globe to discuss the most pressing issues of our time. The session took place against the backdrop of the UN’s 80th anniversary, serving as a moment for both reflection and a forward-looking assessment of the organisation’s role and relevance.

The strategic imperative of open source AI

This year’s open-source pivot is probably the most consequential AI development since the launch of ChatGPT in November 2022. On 20 January, DeepSeek released its open-source reasoning system, an ‘AI Sputnik moment,’ in Marc Andreessen’s words. Then, on 23 July 2025, the Trump administration made open-source a US strategic priority in ‘Winning the Race: America’s AI Action Plan.’

This AI shift has been counterintuitive. Chinese companies historically favoured proprietary software, and Republicans were rarely open-source champions. Why did both powers shift course? Because open-source has moved from an ideological preference to a strategic priority. The logic is simple: players must adapt to the open-source wave or risk watching global AI standards tilt decisively towards their rival.

What are the strengths of open-source AI?

Open-source AI has emerged as a powerful force, with strengths across speed, cost, motivation, integration, and efficiency.

Its speed comes from collective development: millions of models are constantly tested and improved by a global community, enabling innovation far faster than closed corporate labs.

Cost is another advantage, as expenses are distributed across contributors and companies, reducing financial barriers and shifting focus from brute-force computing to smarter architectures.

The motivation of younger developers also drives progress, since open ecosystems attract talent seeking purpose, learning, and collaboration.

Crucially, integration is easier in open systems: their transparency and adaptability make them better suited for embedding AI into industries, education, and workflows, fueling a diverse ecosystem of tools and agents.

Finally, open-source showcases size efficiency. Smaller, well-designed models like Mistral 7B can match or surpass larger ones, lowering hardware demands and making advanced AI broadly accessible.

The ascendant open source paradigm

We are witnessing a major shift towards acceptance of open-source AI as not only ethically favourable but, even more importantly, a strategically superior solution for the future of AI. Openness wins not because it is a moral ideal, but because it delivers superior interoperability, resilience, and innovation at scale. AI open-source shift provides hope that societal values can be aligned with corporate interests.

This text was adapted from Jovan Kurbalija’s analysis ‘The strategic imperative of open source AI’. Read the original below.

This year, open-source AI transformed from an ideological preference into a strategic imperative, a shift echoing a clear lesson from digital history: open systems win. Just as the open protocols of the internet and the collaborative Linux operating system outcompeted closed, proprietary rivals, today’s open AI models are set to dominate. Openness is no longer just a principle; it is the winning strategy.

The rise of AI slop: When social media turns more artificial

On 25 September, Meta quietly introduced Vibes, a new short-form video feed in the Meta AI app, wholly powered by AI. Rather than spotlighting real creators or grassroots content, the feed is built around synthetic content.

On 30 September, OpenAI revealed Sora, a companion app centred on AI-created short videos, complete with ‘cameo’ features letting people insert their own faces (with permission) into generative scenes.

From the outside, both Vibes and Sora look like competitive copies of TikTok or Reels — only their entire content pipeline is synthetic.

They are the first dedicated firehoses of what has been officially termed ‘AI slop.’ This phrase, added to the Cambridge Dictionary in July 2025 and defined as ‘content on the internet that is of very low quality, especially when it is created by AI,’ perfectly captures the core concern.

Across the tech world, reactions ranged from bemused to alarmed. Because while launching a new social media product is hardly radical, creating a platform whose entire video ecosystem is synthetic — devoid of human spark — is something else entirely.

Why is it concerning? Because it blurs the line between real and fake, making it hard to trust what you see. It can copy creators’ work without permission and flood feeds with shallow, meaningless videos that grab attention but add little value. Algorithms exploit user preferences, while features like synthetic cameos can be misused for bullying or identity abuse. And then there’s also the fact that AI clips typically lack human stories and emotion, eroding authenticity.

What’s next? Ultimately, this shift to AI-generated content raises a philosophical question: What is the purpose of our shared digital spaces?

As we move forward, perhaps we need to approach this new landscape more thoughtfully — embracing innovation where it serves us, but always making space for the authentic, the original, and the human.

For now, Vibes and Sora have not yet been rolled out worldwide. Given the tepid response from early adopters, their success is far from guaranteed. Ultimately, their fate hinges entirely on the extent to which people will use them.

TikTok’s great American makeover

With an executive order, US President Donald Trump brought the protracted TikTok drama to a climax, paving the way for a new company – led by American investors who will own 80% of the platform – to take control of the app. TikTok’s (soon to be former) parent company, ByteDance, and its Chinese investors will retain a minority stake of less than 20%.

A new US-led joint venture will oversee the app’s algorithm, code, and content moderation, while all American user data will be stored on Oracle-run servers in the USA. The venture will have a seven-member board, six of whom are American experts in cybersecurity and national security.

Media reports that the US investor group is led by software giant Oracle, while prominent backers include private equity firm Silver Lake, media moguls Rupert and Lachlan Murdoch, and Dell’s CEO Michael Dell.

The crux of the matter: All US user data will be stored on Oracle-run servers in the USA. Software updates, algorithms, and data flows will face strict monitoring, with recommendation models retrained and overseen by US security partners to guard against manipulation.

The US government has long argued that the app’s access to US user data poses significant risks, as ByteDance is possibly subject to the Chinese 2017 National Intelligence Law, which requires any Chinese entity to support, assist, and cooperate with state intelligence work – including, possibly, the transfer of US citizens’ TikTok data to China. On the other hand, TikTok and ByteDance maintained that TikTok operates independently and respects user privacy.

In early 2024, the US Congress, citing national security risks, passed a law requiring ByteDance, TikTok’s Chinese parent company, to divest control of the app or face a ban in the USA. The law, which had bipartisan support in Congress, was later upheld by the Supreme Court.

However, the administration under President Trump has been repeatedly postponing enforcement via executive orders.

Economic and trade negotiations with China have been central to the delay. As the fourth round of talks in Madrid coincided with the latest deadline, Trump opted to extend the deadline again on 16 September — this time until 16 December 2025 — giving TikTok more breathing room.

What’s next? There are still some details to be hashed out, such as whether US users will need to install a new app altogether. Nevertheless, this agreement marks a significant step in resolving one of the most high-profile tech-policy disputes of the decade. Additionally, the executive order allows 120 days for the deal to be finalised.

The bottom line: For millions of American users, the political wrangling is background noise. The real change will be felt in their feeds – whether the new, American-guarded TikTok can retain the chaotic creativity that made it a cultural force.

Chips and sovereignty: From globalisation to guarded autonomy

The semiconductor industry is undergoing a profound geopolitical shift. Once emblematic of globalisation and interdependence, chips are now treated as strategic assets, driving states to recalibrate policies around sovereignty, resilience, and control.

China’s recent actions underscore this trend. Its Ministry of Commerce has initiated an anti-dumping investigation into US analogue chips, accusing US firms of ‘lowering and suppressing’ prices in ways that hurt domestic producers. It covers legacy chips built on older 40nm-plus process nodes – not the cutting-edge AI accelerators that dominate geopolitical debates, but the everyday workhorse components that power smart appliances, industrial equipment, and automobiles. These mature nodes account for a massive share of China’s consumption, with US firms supplying more than 40% of the market in recent years.

For China’s domestic industry, the probe is an opportunity. Analysts say it could force foreign suppliers to cede market share to local firms, which are concentrated in Jiangsu and other industrial provinces. At the same time, there are reports that China is asking tech companies to stop purchasing Nvidia’s most powerful processors.

And speaking of Nvidia, the company is in the crosshairs again, as China’s State Administration for Market Regulation (SAMR) issued a preliminary finding that Nvidia violated antitrust law linked to its 2020 acquisition of Mellanox Technologies. Depending on the outcome of the investigation, Nvidia could face penalties.

Meanwhile, Washington is tightening its own grip. Last month, the government also moved into unprecedented territory by considering equity stakes in domestic chipmakers in exchange for CHIPS Act grants. Intel is at the centre of this experiment, with $8.87 billion in grant money potentially converted into a 10% government stake – though negotiations remain unclear. Critics warn such ownership risks politicising the industry, noting that Trump’s claims to have ‘saved Intel’ may be more theatre than strategy.

In addition to this, the White House is preparing to impose sweeping tariffs, with imports of semiconductors facing rates of nearly 100%, and exemptions granted to companies that manufacture or commit to manufacturing in the US. Chinese firms, such as SMIC and Huawei, are expected to be hit the hardest. The USA will also require annual license renewals for South Korean firms Samsung and SK Hynix to supply advanced chips to Chinese factories – a reminder that even America’s allies are caught in the middle.

Nvidia announced a $5 billion investment in Intel to co-develop custom chips with the company. Together, these moves reflect Washington’s broader effort to strengthen its semiconductor leadership amid growing competition from China.

Amid negotiations with Taiwan, US Commerce Secretary Howard Lutnick floated a proposal that only half of America’s chips should be produced in Taiwan, relocating the other half to the USA, to reduce dependence on a single foreign supplier. But Taiwan’s Vice Premier Cheng Li-chiun dismissed the idea outright, stating that such terms were never part of formal talks and would not be accepted. While Taiwan is willing to deepen commercial ties with the US, it refuses to relinquish control over the advanced semiconductor capabilities that underpin its geopolitical leverage.

The EU is also asserting tighter control over its technological assets. EU member states have called for a revised and more assertive EU Chips Act, arguing that Europe must treat semiconductors as a strategic industry on par with aerospace and defence. The signatories – representing all 27 EU economies – warn that while competitors like the USA and Asia are rapidly scaling public investment, Europe risks falling behind unless it strengthens its domestic ecosystem across R&D, design, manufacturing, and workforce development.

The proposed ‘second-phase Chips Act’ is built around three strategic objectives:

- Prosperity, through a competitive and innovation-led semiconductor economy

- Indispensability, by securing key control points in the value chain

- Resilience, to guarantee supply for critical sectors during geopolitical shocks.

The EU’s message is clear: Europe intends not just to participate in the semiconductor industry, but to shape it on its own terms, backed by coordinated investment, industrial alliances, and international partnerships that reinforce — rather than dilute — strategic autonomy.

The bottom line: The age of supplier nations is over; the age of semiconductor sovereignty has begun. The message is the same around the globe: chips are too critical to trust to someone else.

Nepal’s Discord democracy: How a banned platform became a ballot box

In a historic first for democracy, a country has chosen its interim prime minister via a messaging app.

In early September, Nepal was thrown into turmoil after the government abruptly banned 26 social media platforms, including Facebook, YouTube, X, and Discord, citing failure to comply with registration rules.

The move sparked outrage, particularly among the country’s Gen Z, who poured into the streets, accusing officials of corruption.

As the ban took effect, the country’s digitally literate youth quickly adapted their strategies:

- VPNs’ demand exploded. Proton’s censorship observatory recorded an 8,000% surge over baseline in Nepal sign-ups beginning 4 September, consistent with independent tech press findings.

- Alt-messaging and community hubs: With legacy apps dark, Discord emerged as a ‘virtual control room,’ a natural fit for a generation raised in multiplayer servers. Despite the ban, the movement’s core group (Hami Nepal) organised on Discord and Instagram. Several Indian outlets and the Times of India claimed that more than 100,000 users converged in sprawling voice and text channels to debate leadership choices during the transition.

- Peer-to-peer and ‘mesh’ apps: Encrypted, Bluetooth-based tools, prominently Bitchat, covered by mainstream and crypto-trade press, saw a burst of downloads as protest organisers prepared for intermittent internet access and cellular throttling. The appeal was simple: it works offline, hops device-to-device, and is harder to block.

- Locally registered holdouts: Because TikTok and Viber had registered with the Ministry of Communication and Information Technology (MoCIT), they remained online and quickly became funnels for updates, citizen journalism and short-form explainers about where to assemble and how to avoid police cordons.

The protests, however, quickly turned deadly. Within days, the social media ban was lifted.

On 12 September, the Discord community organised a digital poll for an interim prime minister, with former Supreme Court Chief Justice Sushila Karki emerging as the winner.

Karki was sworn in the same evening. On her recommendation, the President has dissolved parliament, and new elections are scheduled for 5 March 2026, after which Karki will step down.

Zooming out. On platforms like Discord, Nepali Gen Z encountered a form of digital democracy that felt more egalitarian than physical spaces, precisely because of the anonymity it affords. This has turned Discord into a political arena where participants can speak freely without fear of retaliation and take part in an unprecedentedly ‘democratic’ process on equal footing. Nepalis turned to Discord to debate the country’s political future, fact-check rumours and collect nominations for the country’s future leaders.

Yet, this very openness also makes democratic practice more difficult: The absence of accountability can deepen polarisation and fuel misinformation, as was the case in Nepal, where false claims circulated about protest leaders being foreign citizens, while pro-monarchy groups operated in parallel Discord channels.

However temporary or symbolic, the episode underscored how digital platforms can become political arenas when traditional ones falter. When official institutions lose legitimacy, people will instinctively repurpose the tools at their disposal to build new ones. The events are likely to offer lessons for other governments grappling with the role of censorship during times of unrest.

The digital playground gets a fence and a curfew

In response to rising concerns over the impact of AI and social media on teenagers, governments and tech companies are implementing new measures to enhance online safety for young users.

Australia has released its regulatory guidance for the incoming nationwide ban on social media access for children under 16, effective 10 December 2025. The legislation requires platforms to verify users’ ages and ensure that minors are not accessing their services. Platforms must detect and remove underage accounts, communicating clearly with affected users. Platforms are also expected to block attempts to re-register. It remains uncertain whether removed accounts will have their content deleted or if they can be reactivated once the user turns 16.

Greek Prime Minister Kyriakos Mitsotakis has indicated that Greece may consider banning social media use for children under the age of 16. Speaking at a UN event in New York, ‘Protecting Children in the Digital Age’, as part of the 80th UN General Assembly, he warned that unchecked social media exposure constitutes the largest uncontrolled experiment on children’s minds. He highlighted national initiatives such as the ban on mobile phones in schools and the launch of parco.gov.gr, which offers age verification and parental controls. Mitsotakis emphasised that enforcement challenges should not hinder action and called for international cooperation to address the increasing risks to children online.

French lawmakers are proposing stricter regulations on teen social media use, including mandatory nighttime curfews. A parliamentary report suggests that social media accounts for 15- to 18-year-olds should be automatically disabled between 10 p.m. and 8 a.m. to help combat mental health issues. This proposal follows concerns about the psychological impact of platforms like TikTok on minors.

In the USA, the Federal Trade Commission (FTC) has launched an investigation into the safety of AI chatbots, focusing on their impact on children and teenagers. Seven firms, including Alphabet, Meta, OpenAI and Snap, have been asked to provide information about how they address risks linked to ΑΙ chatbots designed to mimic human relationships. Not long after, grieving parents have testified before the US Congress, urging lawmakers to regulate AI chatbots after their children died by suicide or self-harmed following interactions with these tools.

OpenAI has introduced a specialised version of ChatGPT tailored for teenagers, incorporating age-prediction technology to restrict access to the standard version for users under 18. Where uncertainty exists, it will assume the user is a teenager. If signs of suicidal thoughts appear, the company says it will first try to alert parents. Where there is imminent risk and parents cannot be reached, OpenAI is prepared to notify the authorities. This initiative aims to address growing concerns about the mental health risks associated with AI chatbots, while also raising concerns related to issues such as privacy and freedom of expression.

Alongside this, OpenAI has introduced new parental controls, providing families with greater oversight of how their teens use the platform. Parents can link accounts with their children and manage settings through a simple dashboard, while stronger safeguards filter harmful content and restrict roleplay involving sex, violence, or extreme beauty ideals. Families can also fine-tune features such as voice mode, memory, and image generation, or set quiet hours when ChatGPT cannot be accessed.

Meta, meanwhile, is expanding its rollout of teen-specific accounts for Facebook and Messenger. These accounts include features designed to provide a more age-appropriate environment, such as stricter default privacy settings and limits on unwanted interactions. The company says these safeguards are part of a broader effort to balance connectivity with protection for young users.

The intentions are largely good, but a patchwork of bans, curfews, and algorithmic surveillance just underscores that the path forward is unclear. Meanwhile, the kids are almost certainly already finding the loopholes.

Last month in Geneva: Developments, events and takeaways

The digital governance scene has been busy in Geneva in September. Here’s what we have tried to follow.

ITU Council Working Groups (CWGs)

At the International Telecommunication Union (ITU), Council Working Groups (CWGs) met between 8 and 19 September to advance various tracks of work. The CWG on WSIS and SDGs looked at the work undertaken by ITU with regard to the implementation of WSIS outcomes and the Agenda 2030, and to discuss issues related to the ongoing WSIS+20 review process. The Expert Group on ITRs continued working on the final report it needs to submit to the ITU Council in response to the task it was given to review the International Telecommunication Regulations (ITRs), considering evolving global trends, tech developments, and current regulatory practices. A draft version of the report notes that members have divergent views on whether the ITRs need revision and even on their overall relevance; there also doesn’t seem to be a consensus on whether and how the work on revising the ITRs should continue.

On another topic, the CWG on international internet-related public policy issues held an open consultation on ensuring meaningful connectivity for landlocked developing countries. There were also divergent debates on a proposal to improve the work of the CWG and to ‘facilitate discussions within the [CWG] regarding international internet governance’.

Outer Space Security Conference

The UN Institute for Disarmament Research (UNIDIR) hosted the Outer Space Security Conference on 9-10 September, bringing together diplomats, policy makers, private actors, experts from the military sectors and others to look at ways in which to shape a secure, inclusive and sustainable future for outer space. Some of the issues discussed revolved around the implications of using emerging technologies such as AI and autonomous systems in the context of space technology and the cybersecurity challenges associated with such uses.

CSTD WG on data governance

The third meeting of the UN CSTD on data governance (WGDG) took place on 15-16 September. The focus of this meeting was on the work being carried out in the four working tracks of the WGDG: 1. principles of data governance at all levels; 2. interoperability between national, regional and international data systems; 3. considerations of sharing the benefits of data; 4. facilitation of safe, secure and trusted data flows, including cross border data flows.

WGDG members reviewed the synthesis reports produced by the CSTD Secretariat, based on the responses to questionnaires proposed by the co-facilitators of working tracks. The WGDG decided to postpone the deadline for contributions to 7 October. More information can be found in the ‘call for contributions’ on the website of the WGDG.

Keep track of WGDG debates via our dedicated page on the Digital Watch Observatory.

WTO Public Forum 2025

WTO’s largest outreach event, the WTO Public Forum, took place from 17 to 18 September under the Theme ‘Enhance, Create and Preserve’. Digital issues were high on the agenda this year, with sessions dedicated to AI and trade, digital resilience, the moratorium on customs duties on electronic transmissions, and e-commerce negotiations, for example. Other issues were also salient, such as the uncertainty created by rising tariffs and the need for WTO reform. During the Forum, the WTO launched the 2025 World Trade Report, under the title ‘Making trade and AI work together to the benefit of all’. The report explores AI’s potential to boost global trade, particularly through digitally deliverable services. It argues that AI can lower trade costs, improve supply-chain efficiency, and create opportunities for small firms and developing countries, but warns that without deliberate action, AI could deepen global inequalities and widen the gap between advanced and developing economies.

Human Rights Council

The Human Rights Council started its 60th session on 8 September, and two reports stood out for us on its agenda. The first was a report on the human rights implications of new and emerging technologies in the military domain on 18 September. Prepared by the Human Rights Council Advisory Committee, the report recommends, among other measures, that ‘states and international organizations should consider adopting binding or other effective measures to ensure that new and emerging technologies in the military domain whose design, development or use pose significant risks of misuse, abuse or irreversible harm – particularly where such risks may result in human rights violations – are not developed, deployed or used’.

The second is a report on privacy in the digital age by the Office of the High Commissioner on Human Rights. It looks at challenges and risks with regard to discrimination and the unequal enjoyment of the right to privacy associated with the collection and processing of data, and offers some recommendations on how to prevent digitalisation from perpetuating or deepening discrimination and exclusion. Among these are a recommendation for states to protect individuals from human rights abuses linked to corporate data processing and to ensure that digital public infrastructures are designed and used in ways that uphold the rights to privacy, non-discrimination and equality.