eSafety Commissioner has launched a major evaluation of Australia’s Social Media Minimum Age to understand how platforms are applying the requirement and what effects it is having on children, young people and families.

The study aims to deliver robust evidence about both intended and unintended impacts as the national debate on youth, wellbeing and digital environments intensifies.

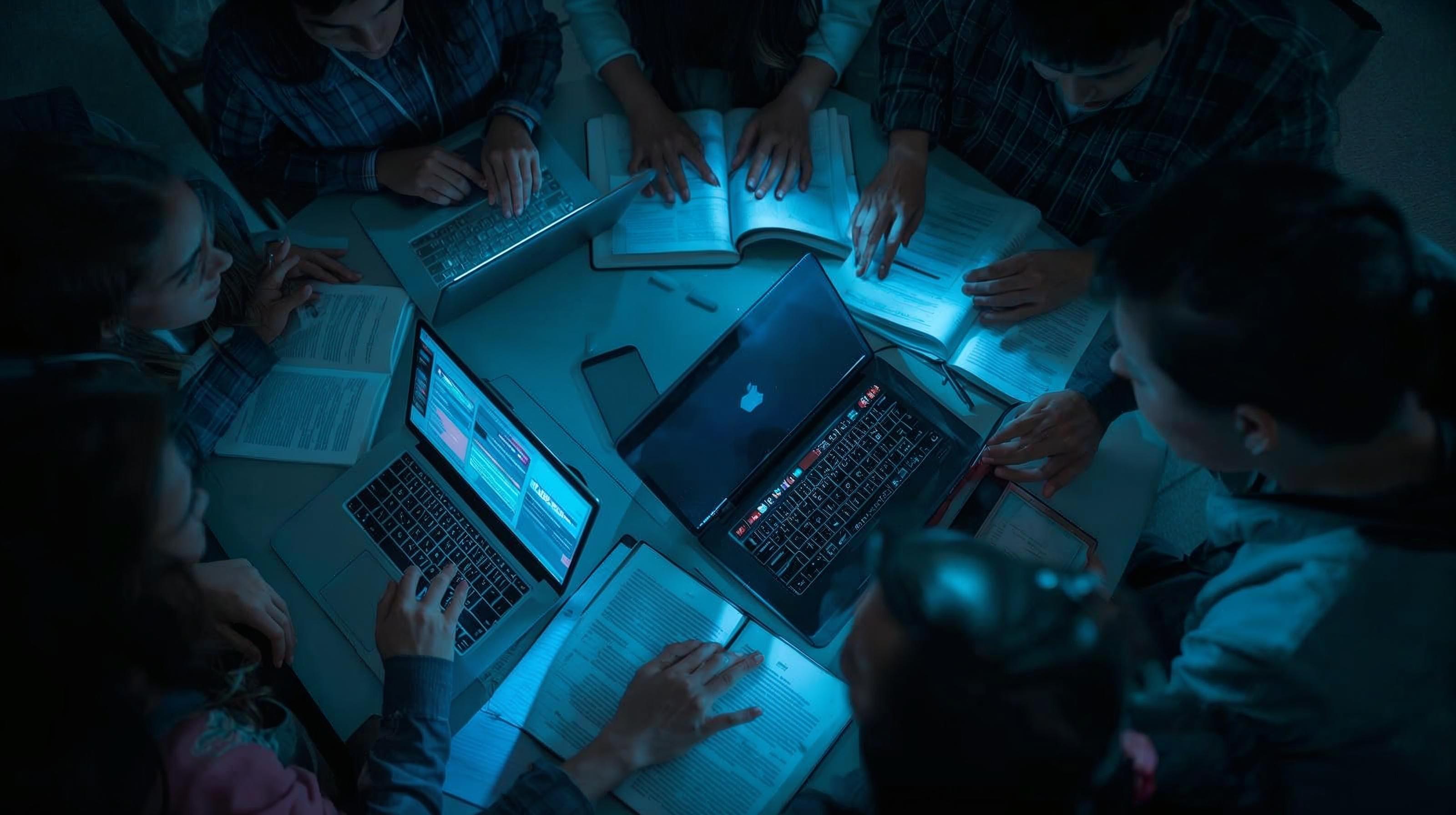

Over more than two years, the research will follow more than four thousand children and families in Australia, combining surveys, interviews, group discussions and privacy-protected smartphone tracking.

Administrative data from national literacy assessments and health systems will be linked to deepen understanding of online behaviour, wellbeing and exposure to risk. All research materials are publicly available through the Open Science Framework to maintain transparency.

The project is led by eSafety’s Research and Evaluation team in partnership with the Stanford University Social Media Lab and an Academic Advisory Group of specialists in mental health, youth development and digital technologies.

Young people themselves are shaping the study through the eSafety Youth Council, ensuring that the interpretation reflects lived experience rather than external assumptions. Full ethics approval underpins the methodology, which meets strict standards of integrity and privacy.

Findings will be released from late 2026 onward, with early reports analysing the experiences of children under sixteen.

The results will inform a legislative review conducted by Australia’s Department of Infrastructure, Transport, Regional Development, Communications, Sport and the Arts.

eSafety expects the evaluation to become a major evidence source for policymakers, researchers and communities as the global conversation on minors and social media regulation continues.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!