Google Research has outlined how it tackles three major domains where foundational AI and science research are applied for tangible global effect, under a framework the team calls the ‘magic cycle’.

The three focus areas highlighted are fighting cancer with AI, quantum computing for medicines and materials, and understanding Earth at scale with Earth AI.

One of the flagship tools is DeepSomatic, an AI system developed to detect genetic variants in cancer cells that previous techniques missed. The tool partnered with a children’s hospital to identify ten new variants in childhood leukaemia samples. Significantly, DeepSomatic was applied to a brain cancer type it had never encountered before and still flagged likely causal variants.

Google Research is exploring the frontiers with its service chip (Willow) and algorithms like Quantum Echoes to simulate molecular behaviours with precision that classical computers struggle to reach. These efforts target improved medicines, better batteries and advanced materials by capturing quantum-scale phenomena.

Aiming to model complex interconnected systems, from weather and infrastructure to population vulnerability, the Earth AI initiative seeks to bring disparate geospatial data into unified systems. For example, predicting which communities are most at risk in a storm requires combining meteorological, infrastructure and socioeconomic data.

Google Research states that across these domains, research and applied work feed each other: foundational research leads to tools, which, when deployed, reveal new challenges that drive fresh research, the ‘magic cycle’.

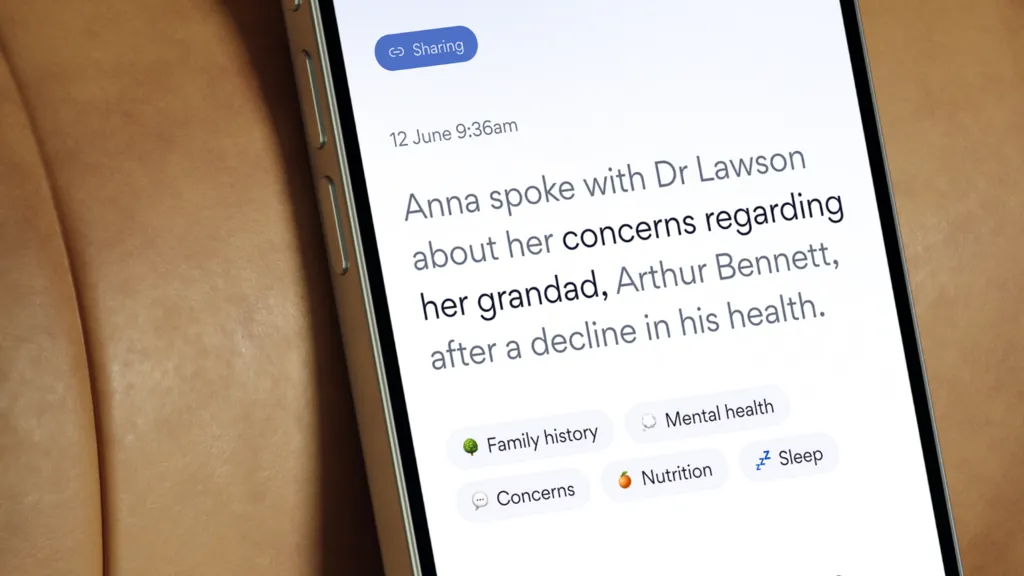

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!