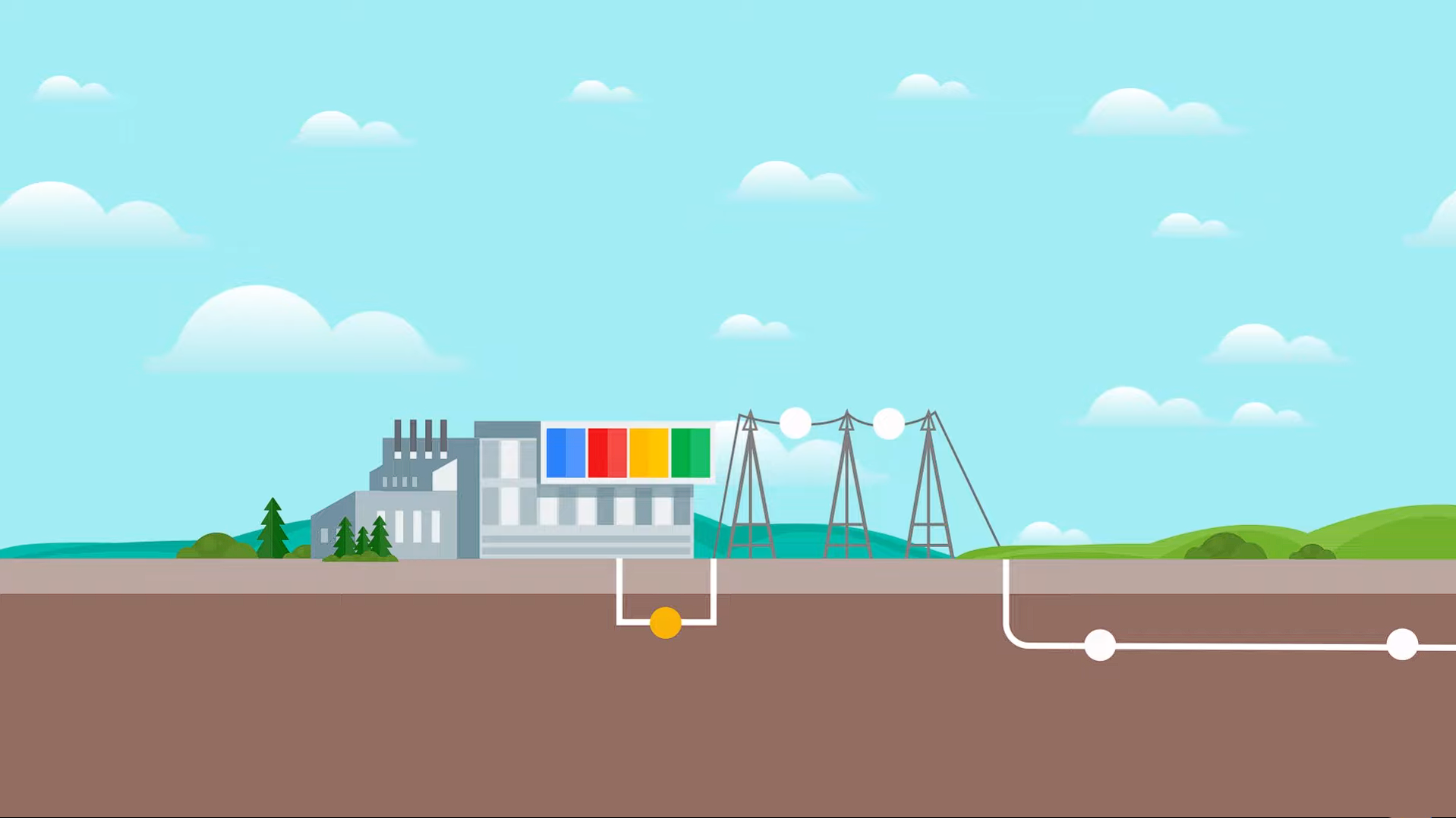

Google has become the first major tech firm to sign formal agreements with US electric utilities to ease grid pressure. The deals come as data centres drive unprecedented energy demand, straining power infrastructure in several regions.

The company will work with Indiana Michigan Power and Tennessee Valley Authority to reduce electricity usage during peak demand. These arrangements will help divert power to general utilities when needed.

Under the agreements, Google will temporarily scale down its data centre operations, particularly those linked to energy-intensive AI and machine learning workloads.

Google described the initiative as a way to speed up data centre integration with local grids while avoiding costly infrastructure expansion. The move reflects growing concern over AI’s rising energy footprint.

Demand-response programmes, once used mainly in heavy manufacturing and crypto mining, are now being adopted by tech firms to stabilise grids in return for lower energy costs.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!