Nokia has announced a $4 billion expansion of its US research, development, and manufacturing operations to accelerate AI-ready networking technologies. The move builds on Nokia’s earlier $2.3 billion US investment via Infinera and semiconductor manufacturing plans.

The expanded investment will support mobile, fixed access, IP, optical, data centre networking, and defence solutions. Approximately $3.5 billion will be allocated for R&D, with $500 million dedicated to manufacturing and capital expenditures in Texas, New Jersey, and Pennsylvania.

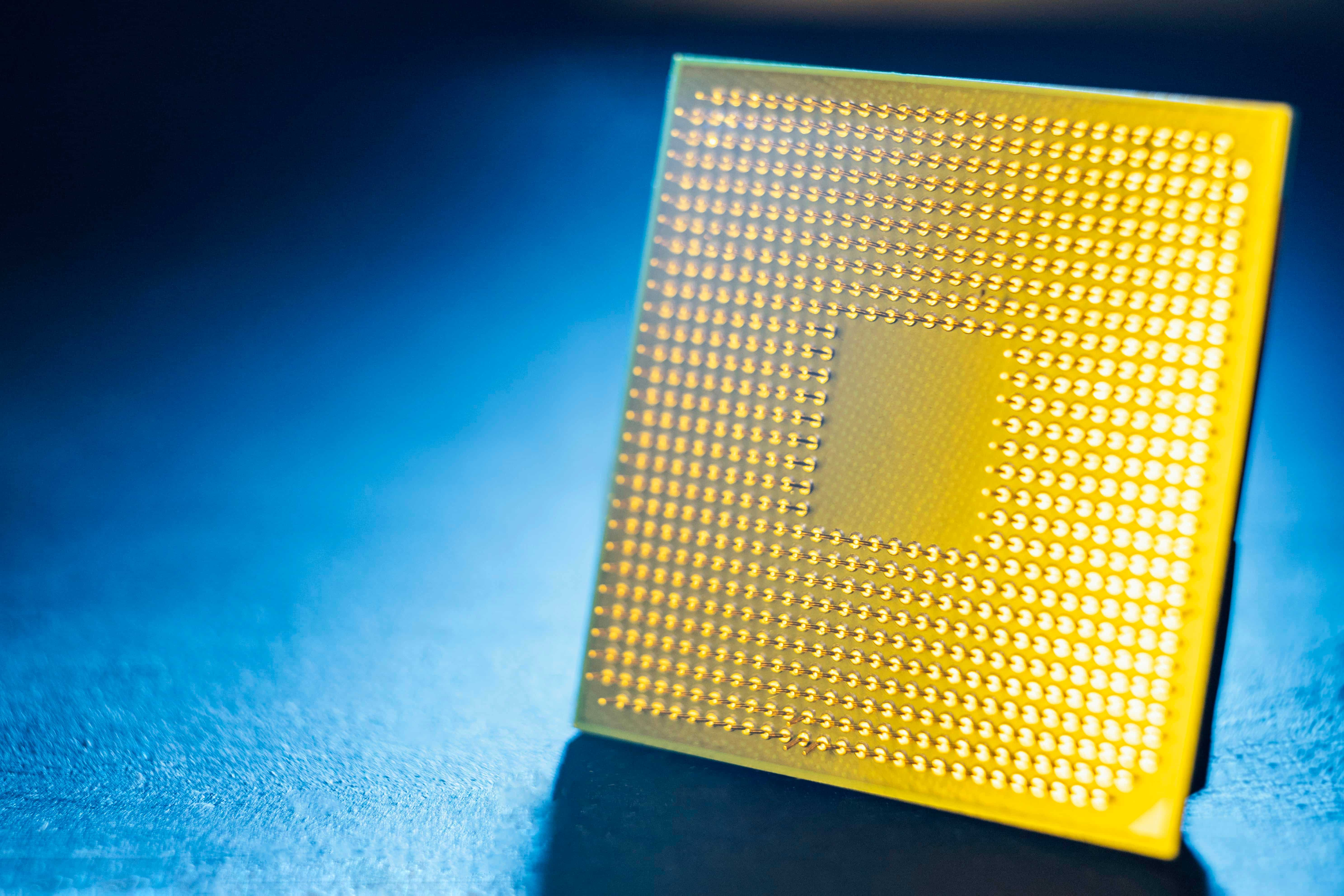

Nokia aims to advance AI-optimised networks with enhanced security, productivity, and energy efficiency. The company will also focus on automation, quantum-safe networks, semiconductor testing, and advanced material sciences to drive innovation.

Officials highlight the strategic impact of Nokia’s US investment. Secretary of Commerce Howard Lutnick praised the plan for boosting US tech capacity, while CEO Justin Hotard said it would secure the future of AI-driven networks.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!