AI is rapidly reshaping what it means to work as a software developer, and the shift is already visible inside organisations that build and run digital products every day. In the blog ‘Why the software developer career may (not) survive: Diplo’s experience‘, Jovan Kurbalija argues that while AI is making large parts of traditional coding less valuable, it is also opening a new professional lane for people who can embed, configure, and improve AI systems in real-world settings.

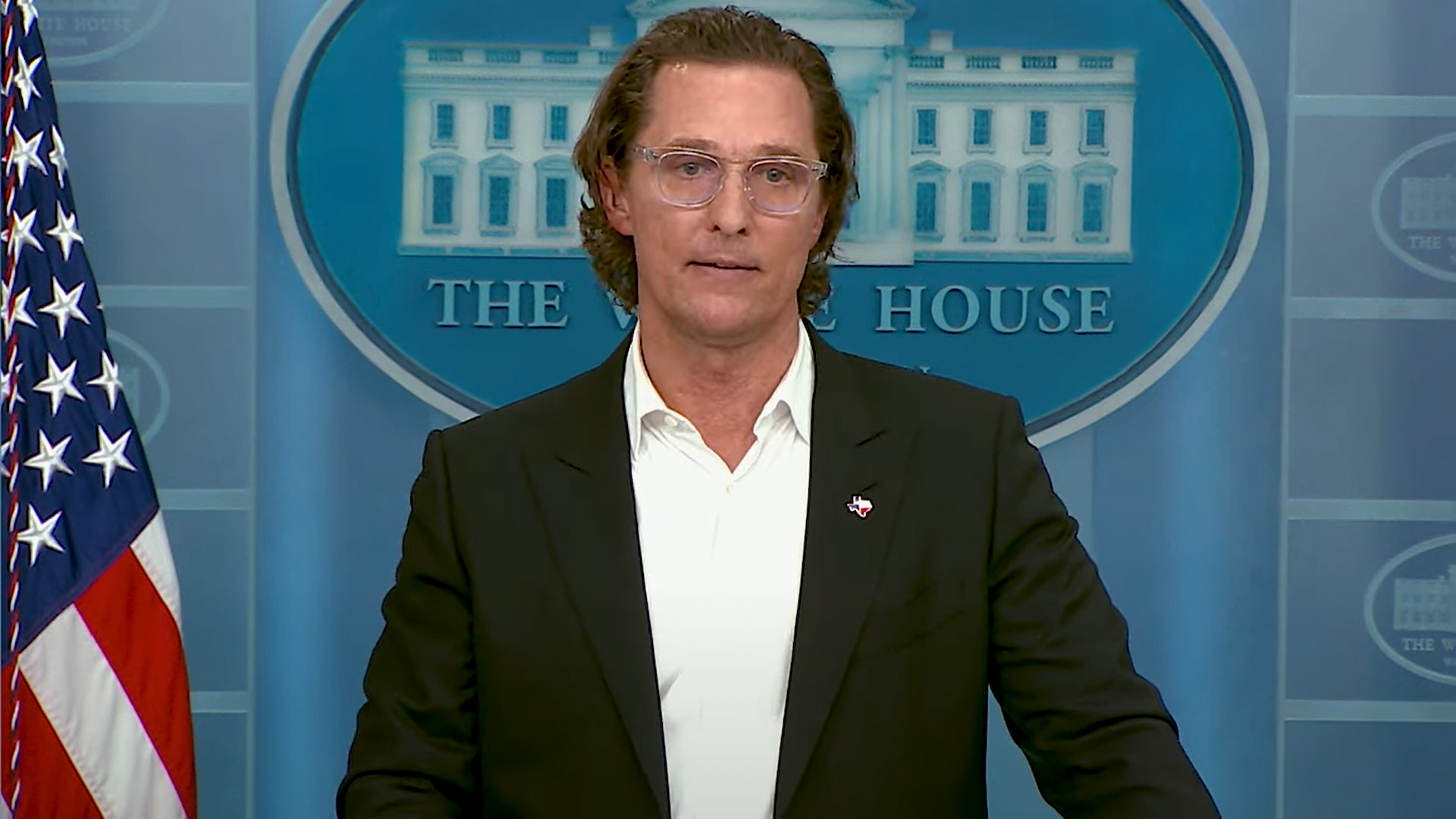

Kurbalija begins with a personal anecdote, a Sunday brunch conversation with a young CERN programmer who believes AI has already made human coding obsolete. Yet the discussion turns toward a more hopeful conclusion.

The core of software work, in this view, is not disappearing so much as moving away from typing syntax and toward directing AI tools, shaping outcomes, and ensuring what is produced actually fits human needs.

One sign of the transition is the rise of describing apps in everyday language and receiving working code in seconds, often referred to as ‘vibe coding.’ As AI tools take over boilerplate code, basic debugging, and routine code review, the ‘bad news’ is clear: many tasks developers were trained for are fading.

The ‘good news,’ Kurbalija writes, is that teams can spend less time on repetitive work and more time on higher-value decisions that determine whether technology is useful, safe, and trusted. A central theme is that developers may increasingly be judged by their ability to bridge the gap between neat code and messy reality.

That means listening closely, asking better questions, navigating organisational politics, and understanding what users mean rather than only what they say. Kurbalija suggests hiring signals could shift accordingly, with employers valuing empathy and imagination, sometimes even seeing artistic or humanistic interests as evidence of stronger judgment in complex human environments.

Another pressure point is what he calls AI’s ‘paradox of plenty.’ If AI makes building easier, the harder question becomes what to build, what to prioritise, and what not to automate.

In that landscape, the scarce skill is not writing code quickly but framing the right problem, defining success, balancing trade-offs, and spotting where technology introduces new risks, especially in large organisations where ‘requirements’ can hide unresolved conflicts.

Kurbalija also argues that AI-era systems will be more interconnected and fragile, turning developers into orchestrators of complexity across services, APIs, agents, and vendors. When failures cascade or accountability becomes blurred, teams still need people who can design for resilience, privacy, and observability and who can keep systems understandable as tools and models change.

Some tasks, like debugging and security audits, may remain more human-led in the near term, even if that window narrows as AI improves.

Transformation of Diplo is presented as a practical case study of the broader shift. Kurbalija describes a move from a technology-led phase toward a more content and human-led approach, where the decisive factor is not which model is used but how well knowledge is prepared, labelled, evaluated, and embedded into workflows, and how effectively people adapt to constant change.

His bottom line is stark. Many developers will struggle, but those who build strong non-coding skills, communication, systems thinking, product judgment, and comfort with uncertainty may do exceptionally well in the new era.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!