Apple is confronting a significant exodus of AI talent, with key researchers departing for rival firms instead of advancing projects in-house.

The company lost its lead robotics researcher, Jian Zhang, to Meta’s Robotics Studio, alongside several core Foundation Models team members responsible for the Apple Intelligence platform. The brain drain has triggered internal concerns about Apple’s strategic direction and declining staff morale.

Instead of relying entirely on its own systems, Apple is reportedly considering a shift towards using external AI models. The departures include experts like Ruoming Pang, who accepted a multi-year package from Meta reportedly worth $200 million.

Other AI researchers are set to join leading firms like OpenAI and Anthropic, highlighting a fierce industry-wide battle for specialised expertise.

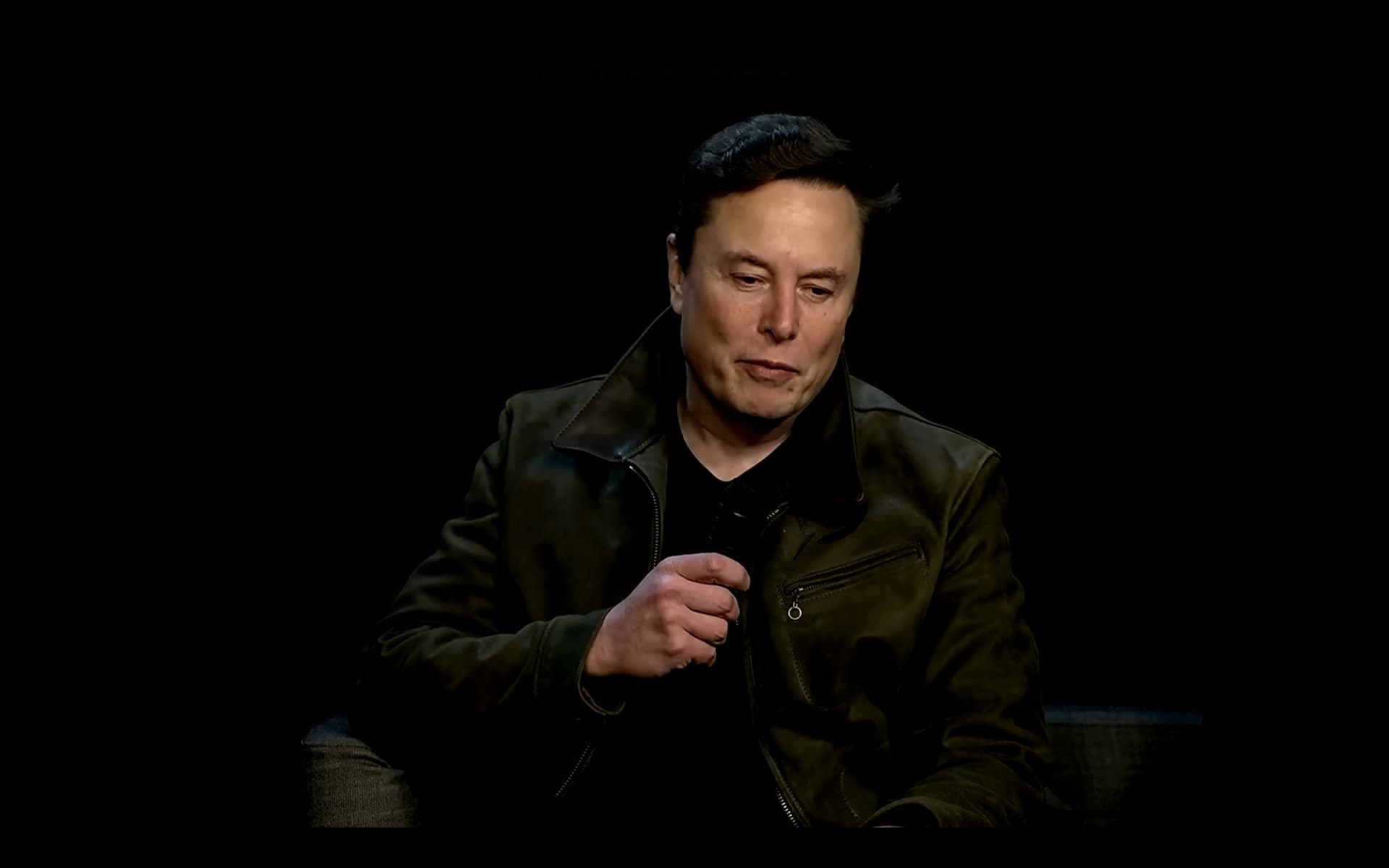

At the centre of the talent war is Meta CEO Mark Zuckerberg, offering lucrative packages worth up to $100 million to secure leading researchers for Meta’s ambitious AI and robotics initiatives.

The aggressive recruitment strategy is strengthening Meta’s capabilities while simultaneously weakening the internal development efforts of competitors like Apple.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!