For over three decades, the Conference on Neural Information Processing Systems (NeurIPS) has played a pivotal role in shaping the field of AI research. What appears at the conference often determines what laboratories develop, what companies implement, and what policymakers ultimately confront. In this sense, the conference functions not merely as an academic gathering, but as an early indicator of where AI is heading.

The 2025 awards reflected the field at a moment of reassessment. After years dominated by rapid scaling, larger datasets, and unprecedented computational power, researchers are increasingly questioning the consequences of that growth. This year’s most highly recognised papers did not focus on pushing benchmarks marginally higher. Instead, they examined whether today’s AI systems genuinely understand, generalise, and align with human expectations.

The following sections detail the award-winning research, highlighting the problems each study addresses, its significance, and its potential impact on the future of AI.

How one paper transformed computer vision over the period of ten years

Faster R‑CNN: Towards Real-Time Object Detection with Region Proposal Networks

One of the highlights of NeurIPS 2025 was the recognition of a paper published a decade earlier that has influenced modern computer vision. It introduced a new way of detecting objects in images that remains central to the field today.

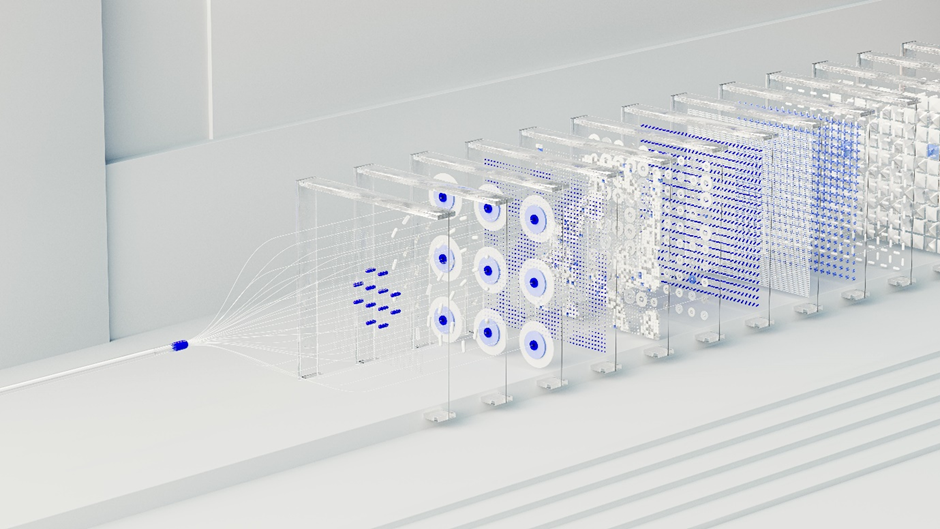

Before this contribution, state‑of‑the‑art object detection systems relied on separate region proposal algorithms to suggest likely object locations, a step that was both slow and brittle. The authors changed that paradigm by embedding a region proposal network directly into the detection pipeline. By sharing full-image convolutional features between the proposal and detection stages, the system reduced the cost of generating proposals to almost zero while maintaining high accuracy.

The design proved highly effective on benchmark datasets and could run near real‑time on contemporary GPUs, allowing fast and reliable object detection in practical settings. Its adoption paved the way for a generation of two-stage detectors. It sparked a wave of follow-on research that has shaped both academic work and real-world applications, from autonomous driving to robotics.

The recognition of this paper, more than a decade after its publication, underscores how enduring engineering insights can lay the foundation for long-term progress in AI. Papers that continue to influence research and applications years after they first appeared offer a helpful reminder that the field values not just novelty but also lasting contribution.

Defining the true limits of learning in real time

Optimal Mistake Bounds for Transductive Online Learning

While much of NeurIPS 2025 focused on practical advances, the conference also highlighted the continued importance of theoretical research. One of the recognised studies addressed a fundamental question in a field called online learning theory, which studies how systems can make sequential predictions and improve over time as they receive feedback.

The paper considered a system known as a learner, meaning any entity that makes predictions on a series of problems, and examined how much it can improve if it has access to the problems in advance but does not yet know the correct answers for them, referred to as labels.

The study focused on a method called transductive learning, in which the learner can take into account all upcoming problems without knowing their labels, allowing it to make more accurate predictions. Through precise mathematical analysis, the authors derived tight limits on the number of mistakes a learner can make in this setting.

By measuring problem difficulty using the Littlestone dimension, they demonstrated precisely how transductive learning reduces errors compared to traditional step-by-step online learning, thereby solving a long-standing theoretical problem.

Although the contribution is theoretical, its implications are far from abstract. Many real-world systems operate in environments where data arrives continuously, but labels are scarce or delayed. Recommendation systems, fraud detection pipelines and adaptive security tools all depend on learning under uncertainty, making an understanding of fundamental performance limits essential.

The recognition of this paper at NeurIPS 2025 reflects its resolution of a long-standing open problem and its broader significance for the foundations of machine learning. At a time when AI systems are increasingly deployed in high-stakes settings, clear theoretical guarantees remain a critical safeguard against costly and irreversible errors.

How representation superposition explains why bigger models work better

Superposition Yields Robust Neural Scaling

By constructing a simplified model informed by real data characteristics, the authors demonstrated that when superposition is strong, loss decreases in a predictable manner as the model size increases. Under strong superposition, overlapping representations produce a loss that scales inversely with model dimension across a broad range of data distributions.

That pattern matches observations from open‑source large language models and aligns with recognised scaling laws such as those described in the Chinchilla paper.

The insight at the heart of the study is that overlap in representations can make large models more efficient learners. Rather than requiring each feature to occupy a unique space, models can pack information densely, allowing them to generalise better as they grow. Such an explanation helps to explain why simply increasing model size often yields consistent improvements in performance.

Understanding the mechanisms behind neural scaling laws is important for guiding future design choices. It provides a foundation for building more efficient models and clarifies when and why scaling may cease to deliver gains at higher capacities.

Questioning the limits of reinforcement learning in language models

Does Reinforcement Learning Really Incentivise Reasoning Capacity in LLMs Beyond the Base Model?

Reinforcement learning has been widely applied to large language models with the expectation that it can improve reasoning and decision-making. By rewarding desirable outputs, developers hope to push models beyond their base capabilities and unlock new forms of reasoning.

The study examines whether these improvements truly reflect enhanced reasoning or simply better optimisation within the models’ existing capacities. Through a systematic evaluation across tasks requiring logic, planning and multi-step inference, the authors find that reinforcement learning often does not create fundamentally new reasoning skills. Instead, the gains are largely confined to refining behaviours that the base model could already perform.

These findings carry important implications for the design and deployment of advanced language models. They suggest that current reinforcement learning techniques may be insufficient for developing models capable of independent or genuinely novel reasoning. As AI systems are increasingly tasked with complex decision-making, understanding the true limits of reinforcement learning becomes essential to prevent overestimating their capabilities.

The research encourages a more cautious and evidence-based approach, highlighting the need for new strategies if reinforcement learning is to deliver beyond incremental improvements.

Revealing a hidden lack of diversity in language model outputs

Artificial Hivemind: The Open-Ended Homogeneity of Language Models (and Beyond)

Large language models are often celebrated for their apparent creativity and flexibility. From essays to advice and storytelling, they appear capable of generating an almost limitless range of responses. Closer examination, however, reveals a more troubling pattern. Despite differences in architecture, scale and training data, many leading models tend to respond to open-ended prompts in strikingly similar ways.

The research examines this phenomenon through a carefully designed benchmark built around real-world questions that do not have a single correct answer. Rather than focusing on factual accuracy, the authors study how models behave when judgement, nuance, and interpretation are required.

Across a wide range of prompts, responses repeatedly converge on the same themes, tones and structures, producing what the authors describe as a form of collective behaviour rather than independent reasoning.

The study’s key contribution lies in its evaluation of existing assessment methods. Automated metrics commonly used to compare language models often fail to detect this convergence, even when human evaluators consistently prefer responses that display greater originality, contextual awareness, or diversity of perspective. As a result, models may appear to improve according to standard benchmarks while becoming increasingly uniform in practice.

The implications extend beyond technical evaluation. When language models are deployed at scale in education, media production, or public information services, the homogeneity of output risks narrowing the range of ideas and viewpoints presented to users. Instead of amplifying human creativity, such systems may quietly reinforce dominant narratives and suppress alternative framings.

The recognition of this paper signals a growing concern about how progress in language modelling is measured. Performance gains alone no longer suffice if they come at the cost of diversity, creativity, and meaningful variation. As language models play an increasingly important role in shaping public discourse, understanding and addressing collective behavioural patterns becomes a matter of both societal and technical importance.

Making large language models more stable by redesigning attention

Gated Attention for Large Language Models: Non-Linearity, Sparsity, and Attention-Sink-Free

As large language models grow in size and ambition, the mechanisms that govern how they process information have become a central concern. Attention, the component that allows models to weigh different parts of input, sits at the core of modern language systems.

Yet, the same mechanism that enables impressive performance can also introduce instability, inefficiency, and unexpected failure modes, particularly when models are trained on long sequences.

The research focuses on a subtle but consequential weakness in standard attention designs. In many large models, certain tokens accumulate disproportionate influence, drawing attention away from more relevant information. Over time, this behaviour can distort the way models reason across long contexts, leading to degraded performance and unpredictable outputs.

To address this problem, the authors propose a gated form of attention that enables each attention head to dynamically regulate its own contribution. By introducing non-linearity and encouraging sparsity, the approach reduces the dominance of pathological tokens and leads to more balanced information flow during training and inference.

The results suggest that greater reliability does not necessarily require more data or larger models. Instead, careful architectural choices can significantly improve stability, efficiency, and performance. Such improvements are particularly relevant as language models are increasingly deployed in settings where long context understanding and consistent behaviour are essential.

At a time when language models are moving from experimental tools to everyday infrastructure, refinements of this kind highlight how progress can come from re-examining the foundations rather than simply scaling them further.

Understanding why models do not memorise their data

Why Diffusion Models Don’t Memorise: The Role of Implicit Dynamical Regularisation in Training

Generative AI has advanced at an extraordinary pace, with diffusion models now powering image generation, audio synthesis, and early video creation tools. A persistent concern has been that these systems might simply memorise their training data, reproducing copyrighted or sensitive material rather than producing genuinely novel content.

The study examines the training dynamics of diffusion models in detail, revealing a prolonged phase during which the models generate high-quality outputs that generalise beyond their training examples. Memorisation occurs later, and its timing grows predictably with the size of the dataset. In other words, generating new and creative outputs is not an accidental by-product but a natural stage of the learning process.

Understanding these dynamics has practical significance for both developers and regulators. It shows that memorisation is not an inevitable feature of powerful generative systems and can be managed through careful design of datasets and training procedures. As generative AI moves further into mainstream applications, knowing when and how models memorise becomes essential to ensuring trust, safety, and ethical compliance.

The findings provide a rare theoretical foundation for guiding policy and deployment decisions in a rapidly evolving landscape. By illuminating the underlying mechanisms of learning in diffusion models, the paper points to a future where generative AI can be both highly creative and responsibly controlled.

Challenging long-standing assumptions in reinforcement learning

1000 Layer Networks for Self-Supervised Reinforcement Learning: Scaling Depth Can Enable New Goal-Reaching Capabilities

Reinforcement learning has often been presented as a route to truly autonomous AI, yet practical applications frequently struggle due to fragile training processes and the need for carefully designed rewards. In a surprising twist, researchers have found that increasing the depth of neural networks alone can unlock new capabilities in self-supervised learning settings.

By constructing networks hundreds of layers deep, agents learn to pursue goals more effectively without explicit instructions or rewards. The study demonstrates that depth itself can act as a substitute for hand-crafted incentives, enabling the system to explore and optimise behaviour in ways that shallower architectures cannot.

The findings challenge long-held assumptions about the limits of reinforcement learning and suggest a shift in focus from designing complex reward functions to designing more capable architectures. Potential applications span robotics, autonomous navigation, and simulated environments, where specifying every objective in advance is often impractical.

The paper underlines a broader lesson for AI, showing that complexity in structure can sometimes achieve what complexity in supervision cannot. For systems that must adapt and learn in dynamic environments, architectural depth may be a more powerful tool than previously appreciated.

What NeurIPS 2025 reveals about the state of AI

Taken together, research recognised at NeurIPS 2025 paints a picture of a field entering a more reflective phase. AI is no longer defined solely by the size of models. Instead, attention is turning to understanding learning dynamics, improving evaluation frameworks, and ensuring stability and reliability at scale.

The year 2025 did not simply reward technical novelty; it highlighted work that questions assumptions, exposes hidden limitations, and proposes more principled foundations for future systems. As AI becomes an increasingly influential force in society, this shift may prove to be one of the most important developments in the field’s evolution.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!