French President Emmanuel Macron told the AI Impact Summit in New Delhi that Europe would remain a safe space for AI innovation and investment. Speaking in New Delhi, he said the European Union would continue shaping global AI rules alongside partners such as India.

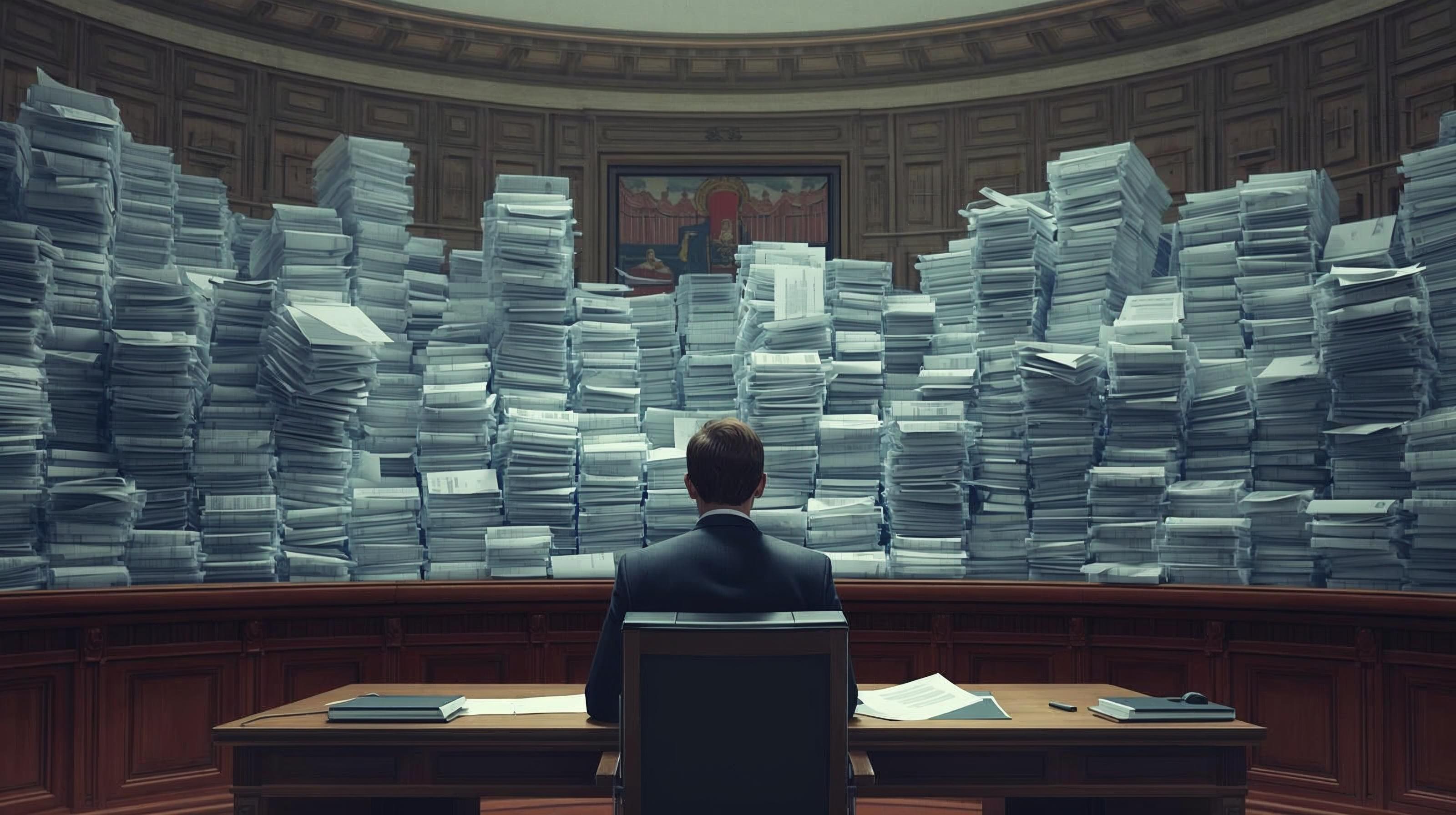

Macron pointed to the EU AI Act, adopted in 2024, as evidence that Europe can regulate emerging technologies and AI while encouraging growth. In New Delhi, he claims that oversight would not stifle innovation but ensure responsible development, but not much evidence to back it up.

The French leader said that France is doubling the number of AI scientists and engineers it trains, with startups creating tens of thousands of jobs. He added in New Delhi that Europe aims to combine competitiveness with strong guardrails.

Macron also highlighted child protection as a G7 priority, arguing in New Delhi that children must be shielded from AI driven digital abuse. Europe, he said, intends to protect society while remaining open to investment and cooperation with India.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!