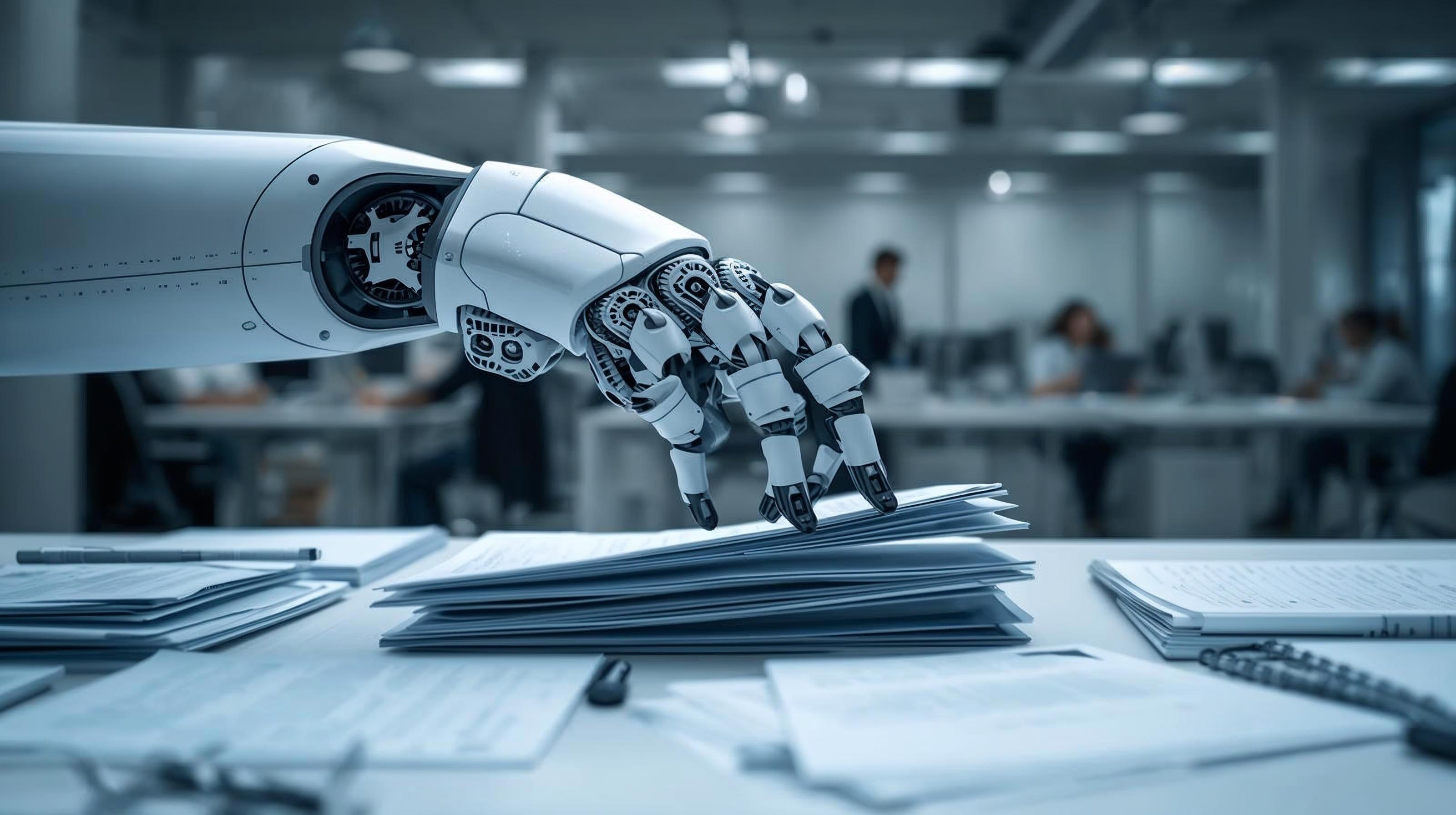

More than 100 organisations have urged governments to outlaw AI-nudification tools after a surge in non-consensual digital images.

Groups such as Amnesty International, the European Commission, and Interpol argue that the technology now fuels harmful practices that undermine human dignity and child safety. Their concerns intensified after the Grok nudification scandal, where users created sexualised images from ordinary photographs.

Campaigners warn that the tools often target women and children instead of staying within any claimed adult-only environment. Millions of manipulated images have circulated across social platforms, with many linked to blackmail, coercion and child sexual abuse material.

Experts say the trauma caused by these AI images is no less serious because the abuse occurs online.

Organisations within the coalition maintain that tech companies already possess the ability to detect and block such material but have failed to apply essential safeguards.

They want developers and platforms to be held accountable and believe that strict prohibitions are now necessary to prevent further exploitation. Advocates argue that meaningful action is overdue and that protection of users must take precedence over commercial interests.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!