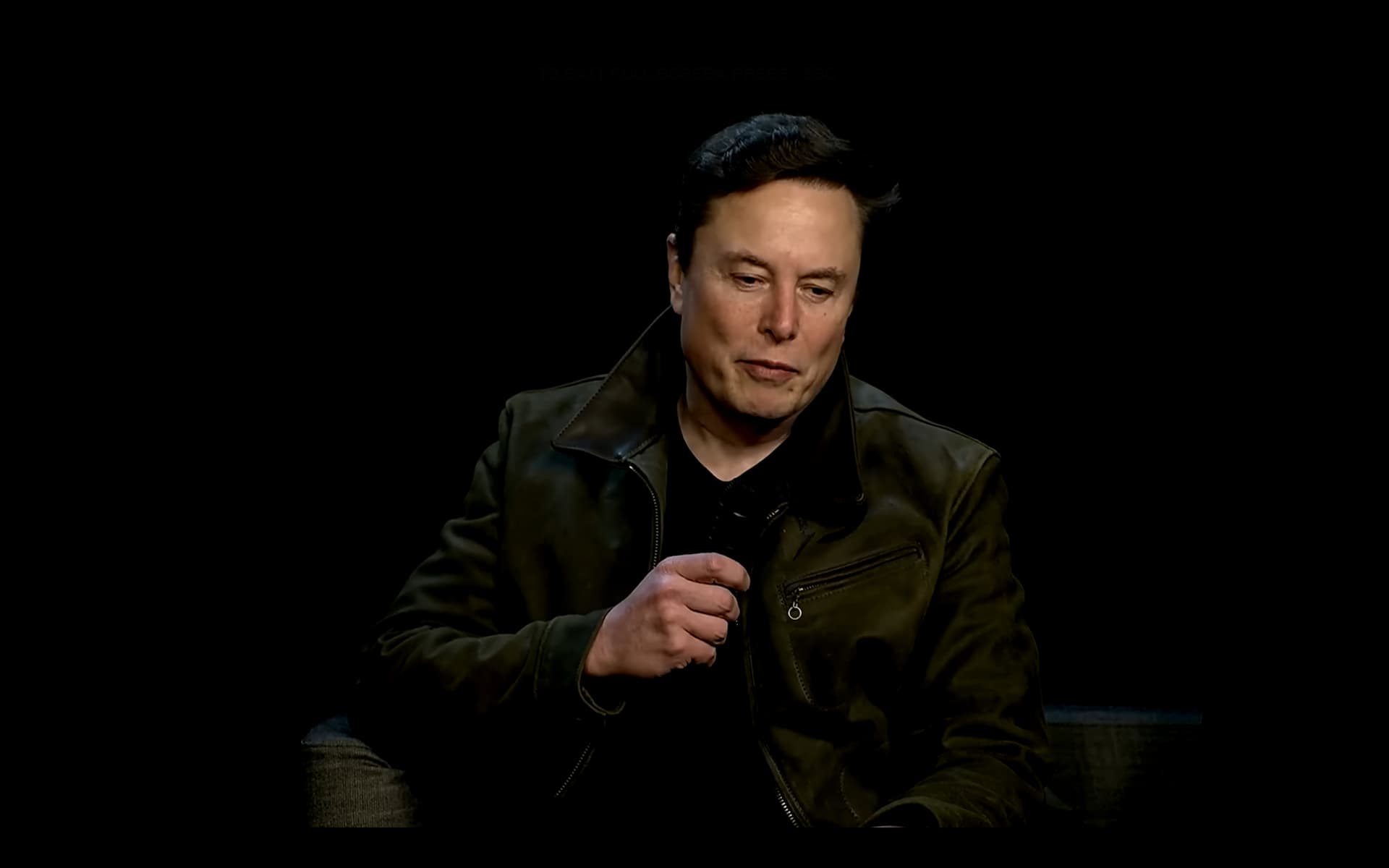

Elon Musk, CEO of Tesla and xAI, has publicly accused Anthropic of stealing large volumes of data to train its AI models. The allegation was made on X in response to posts referencing Community Notes attached to Anthropic-related content.

Musk claimed the company had engaged in large-scale data theft and suggested that it had paid multi-billion-dollar settlements. Those financial claims remain contested, and no official confirmation has been provided to substantiate the figures.

Anthropic, known for developing the Claude AI model, was founded by former OpenAI employees and promotes an approach centred on AI safety and responsible development. The company has not publicly responded to Musk’s latest accusations.

The dispute reflects a broader conflict across the AI industry over how companies collect the text, images and other materials required to train large language models. Much of this data is scraped from the internet, often without explicit permission from rights holders.

Multiple lawsuits filed by authors, media organisations and software developers are testing whether large-scale scraping qualifies as fair use under copyright law. Court rulings in these cases could reshape licensing practices, impose financial penalties, and alter the economics of AI development.

Would you like to learn more about AI, tech, and digital diplomacy? If so, ask our Diplo chatbot!