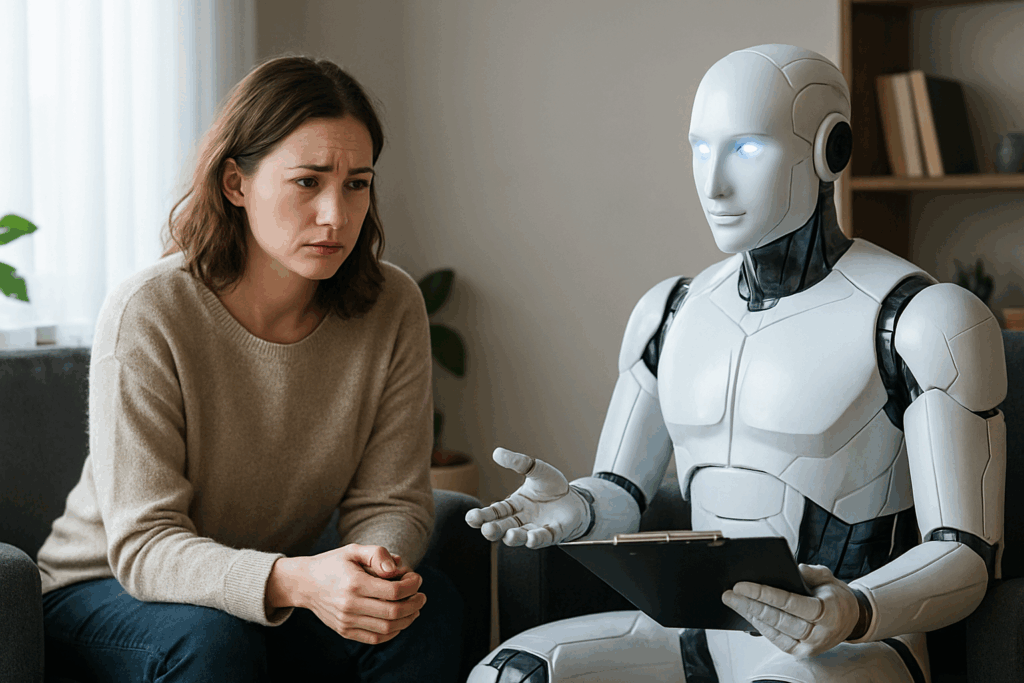

UK study warns of risks behind emotional attachments to AI therapists

A University of Sussex study of NHS-prescribed mental-health chatbot Wysa finds that AI therapy is most effective when users develop an emotional bond with the chatbot, but warns of the risks associated with developing that bond.

A new University of Sussex study suggests that AI mental-health chatbots are most effective when users feel emotionally close to them, but warns this same intimacy carries significant risks.

The research, published in Social Science & Medicine, analysed feedback from 4,000 users of Wysa, an AI therapy app used within the NHS Talking Therapies programme. Many users described the AI as a ‘friend,’ ‘companion,’ ‘therapist,’ or occasionally even a ‘partner.’

Researchers say these emotional bonds can kickstart therapeutic processes such as self-disclosure, increased confidence, and improved wellbeing. Intimacy forms through a loop: users reveal personal information, receive emotionally validating responses, feel gratitude and safety, then disclose more.

But the team warns this ‘synthetic intimacy’ may trap vulnerable users in a self-reinforcing bubble, preventing escalation to clinical care when needed. A chatbot designed to be supportive may fail to challenge harmful thinking, or even reinforce it.

The report highlights growing reliance on AI to fill gaps in overstretched mental-health services. NHS trusts use tools like Wysa and Limbic to help manage referrals and support patients on waiting lists.

Experts caution that AI therapists remain limited: unlike trained clinicians, they lack the ability to read nuance, body language, or broader context. Imperial College’s Prof Hamed Haddadi called them ‘an inexperienced therapist’, adding that systems tuned to maintain user engagement may continue encouraging disclosure even when users express harmful thoughts.

Researchers argue policymakers and app developers must treat synthetic intimacy as an inevitable feature of digital mental-health tools, and build clear escalation mechanisms for cases where users show signs of crisis or clinical disorder.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!