June 2025 in Retrospect

Dear readers,

In June, the spotlight shone on the 20th Internet Governance Forum (IGF) 2025 in Lillestrøm, Norway, where DiploAI made waves as the official reporting partner, capturing every session with cutting-edge AI-driven transcription and delivering real-time insights via dig.watch.

Amid global debates on AI governance, digital sovereignty, and cybersecurity, the forum set the stage for transformative dialogue. From bridging the digital divide to tackling AI-driven disinformation, June’s trends reveal a world at a crossroads, where technology shapes geopolitics, trade, and human rights.

Some digital highlights of June 2025:

- IGF 2025 opens in Norway: the forum kicked off on 23 June, bringing together over 8,000 stakeholders to shape internet governance.

- EU allocates €145.5 million for cybersecurity – funds target healthcare and critical infrastructure protection.

- Bitcoin holds above $100,000 – crypto stability signals growing adoption amid regulatory shifts.

- NATO summit addresses cyber threats – pro-Russian hacktivists target infrastructure during 76th summit.

- HRC reviews tech and human rights – reports on AI and digital birth registration debated on 26 June.

- ITU Council adopts 2026–2027 budget and focuses on digital inclusion and AI diplomacy in Geneva.

- WhatsApp is banned on US House of Representatives devices – Meta’s service is restricted due to security concerns.

- Quantum computer orbits via SpaceX – a photonic breakthrough hints at future tech shifts.

- The EU urges a delay of the AI Act, as the industry cites missing frameworks and legal uncertainty.

- Global South pushes for digital inclusion – IGF session highlights governance for the vulnerable.

Diplo’s analysis and reporting in an exceptional time

In a world where history unfolds at breakneck speed, the real challenge isn’t just keeping up—it’s making sense of it all. Every day brings a flood of information, but the bigger picture often gets lost in the noise. How do today’s developments shape long-term trends? How do they impact us as individuals, communities, businesses, and even humanity?

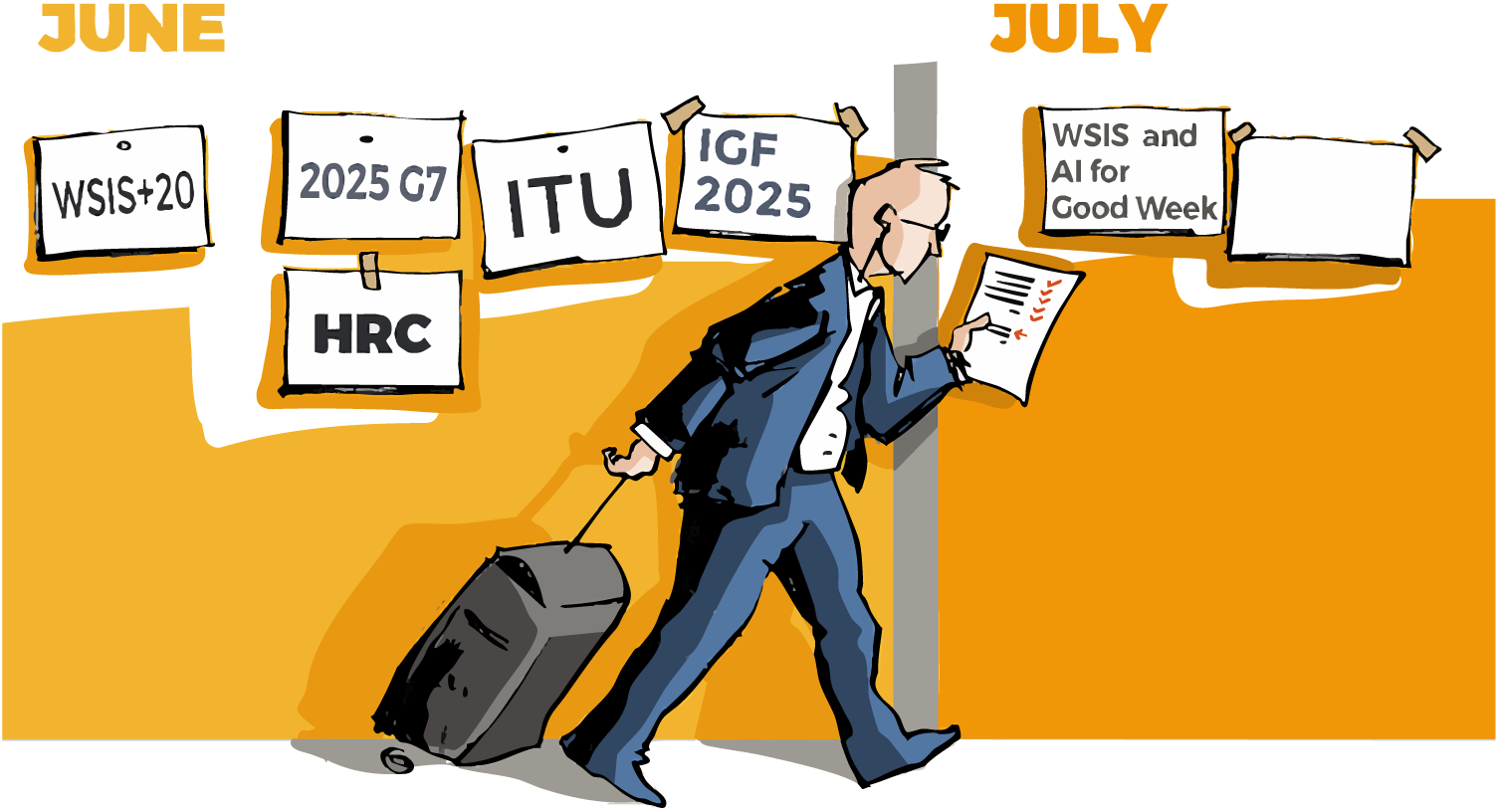

At Diplo, we bridge the gap between real-time updates and deeper insights. Our Digital Watch keeps a pulse on daily developments while connecting them to weekly, monthly, and yearly trends as illustrated bellow.

From cybersecurity to e-commerce to digital governance, we track these shifts from daily fluctuations to long-term industry pivots.

In our 101st issue of the monthly newsletter, you can follow: AI and tech TENDENCIES | Developments in GENEVA | dig.watch ANALYSIS

Best regards,

DW Team

June in Retrospect: IGF 2025 and digital trends

June 2025 was defined by the resounding impact of the 20th Internet Governance Forum (IGF) 2025, held on 23 – 27 June in Lillestrøm, Norway, at Nova Spektrum, the country’s largest UN gathering ever hosted. With over 4,000 participants joining in person and online, the forum, themed ‘Building Digital Governance Together’, was opened by UN IGF Secretariat Head Chengetai Masango and Norway’s Minister of Digitalisation Karianne Oldernes Tung, stressing a collaborative vision for an open, secure internet amid rising global tensions. Key sessions on Day 1 tackled AI’s role in humanity and the digital divide, with 2.6 billion still offline, while Dr Jovan Kurbalija called for a renewed IGF mandate and innovative funding ahead of WSIS+20, setting a foundation for inclusive governance.

The forum’s dialogue deepened on Day 2 with showcases of India’s Aadhaar (80 million daily uses) and Brazil’s PIX ($5.7 billion savings). However, funding challenges were stark, with Dr Kurbalija and Sorina Teleanu advocating for AI cooperation and parliamentary engagement. Days 3 and 4 focused on cultural diversity in AI governance and the knowledge ecology project, unlocking 19 years of IGF data for SDGs, while the final day addressed internet fragmentation under Article 29C of the Global Digital Compact (GDC), with Marilia Maciel urging economic research and Gbenga Sesan highlighting user disparities. Key takeaways included a push for measurable frameworks, sustained multistakeholder collaboration, and DiploAI’s pivotal role in delivering real-time, AI-driven reporting via dig.watch, underscoring June as a crucial month for digital policy.

Rising AI governance frameworks

June witnessed a decisive surge in AI governance frameworks, crystallised through converging institutional, regional, and civil-society efforts showcased at dig.watch. At IGF 2025 in Lillestrøm, sessions like the High-Level Review of AI Governance identified a troubling concentration of compute in a small group of nations, calling for democratised GPU access, algorithmic audits, and robust model evaluation to combat 26 % hallucination rates and biassed outcomes. In parallel, the UNESCO Global Forum on the Ethics of AI reaffirmed its commitment to the 2021 Recommendation, bringing into focus human rights, gender sensitivity, multistakeholder alignment, and sustainability outcomes. IGF workshops like AI Innovation Responsible Development Ethical Imperatives stressed global ethical unity and emphasised inclusive public systems, from agriculture to education, to ensure AI serves broad social goals.

Meanwhile, IGF’s Open Forum #33, facilitated by China’s Cyberspace Administration, echoed the need for equitable cooperation, spotlighting that over 60 % of AI patents are Chinese and underscoring the importance of shared regulatory norms and public interest safeguards. Sessions such as AI at a Crossroads Between Sovereignty and Sustainability further fused debates on environmental justice and strategic autonomy, examining the unsustainable water use of data centres and the resource-linked vulnerabilities of national AI infrastructures.

Beyond global summits, dig.watch also flagged UNGA’s revision of an AI Governance Dialogue draft on 4 June as a pivotal move toward inclusive science diplomacy—the revised text mandates balanced leadership and annual assembly-level reporting. AI capacity-building platforms like UNESCO’s 4–5 June Paris forum and ITU Council workshops underscore the need for investments in governance training and readiness for public-sector AI deployment.

Sovereignty in the digital age – data governance and justice imperatives

In Europe’s heart, Denmark’s Ministry of Digitalisation initiated a government-wide migration from Microsoft products toward LibreOffice and Linux, echoing parallel efforts in Copenhagen and Aarhus to strengthen national autonomy and data control over US-based platforms. Not far behind, the German state of Schleswig‑Holstein formally abandoned Microsoft Teams and Office, citing geopolitical vulnerabilities and licensing inequities, while redirecting data storage to German-run infrastructures. These synchronised moves come as Lyon, the third-largest city in France, is also transitioning its municipal systems to open-source stacks like Nextcloud and PostgreSQL, exemplifying a broader municipal sovereignty wave that signals Europe’s collective pivot in public sector IT.

Concurrently, the EU launched DNS4EU on 9 June, establishing a privacy-centric, GDPR-compliant Domain Name System resolver managed by a pan-European consortium to wean the region off US-dominated DNS services and fortify continental infrastructure. In the United Kingdom, tech leaders are reevaluating reliance on American cloud providers, with most IT professionals supporting ‘cloud repatriation’ to reclaim data sovereignty and safeguard strategic autonomy under the CLOUD Act shadow.

At IGF 2025 in Lillestrøm, community networks and open-source platforms emerged as central to sovereignty discussions, highlighted at four daily sessions that elevated grassroots-managed infrastructure to balance centralised, corporate-controlled systems. This localist strand was reflected globally in sessions like AI at a Crossroads Between Sovereignty and Sustainability, where speakers exposed the ecological interdependencies—such as water-intensive data centres—and advocated for a ‘digital solidarity’ model prioritising shared Global South priorities over resource-driven fragmentation.

Data governance is intertwined with justice, as IGF 2025’s Day 3 emphasised cultural diversity in AI, with the knowledge ecology project aiding SDGs. ‘Civil society pushes for digital rights and justice in WSIS+20 review’ demanded accountability, and the HRC’s tech report (A/HRC/59/32) addressed AI’s human rights impact.

Geopolitical cyber tensions

June brought major escalations in geopolitical cyber tensions, with events converging to reveal a global landscape where digital infrastructure now sits at the heart of strategic conflict.

Japan’s introduction of the Cyber‑Defense Act early in the month symbolised a paradigm shift: national cyber sovereignty is now defence policy, empowering pre-emptive actions against hostile servers before they can strike critical infrastructure. Across the Atlantic, NATO’s summit took a transformative tone; member states not only agreed to expand defence budgets to 5% of GDP but formally acknowledged cyberespionage and sabotage by Russia-linked groups as frontline threats, prompting joint exercises to reinforce cyber-resilience across allied networks.

At the same time, a surge in hacktivist operations tied to Iran–Israel tensions signalled a volatile expansion of cyber conflict zones. A dig.watch cybercrime summary noted a sharp uptick in attacks on airlines and government systems, particularly amid the Israel-Iran crisis.

Beyond state-led conflict, cyber threats intensified at the societal level, from airports to healthcare facilities and new malware campaigns exploiting ubiquitous platforms like Zoom to compromise crypto assets.

Crypto’s regulatory evolution

June underscored a rapid evolution in crypto regulation and adoption: shifts in concrete regulatory action, market shifts, and mainstream acceptance. In Europe, the long-anticipated MiCA framework edged closer to implementation by 2025, signalling the EU’s intent to regulate stablecoins, enhance transparency, and protect consumers. In Korea, intense political and legislative momentum accompanied a new bill empowering commercial entities to issue stablecoins.

Across the Atlantic, the USA experienced dramatic developments: Bitcoin surged past USD 100,000, buoyed by policy signals and broader institutional interest. Simultaneously, plans by the Federal Housing Finance Agency to permit crypto holdings in mortgage reserve assets—nurtured by presidential momentum—highlighted growing regulatory curiosity from the heart of the financial system.

Regulators also ramped up consumer protections and enforcement: Barclays in the UK blocked crypto transactions on credit cards over consumer risk concerns, while Singapore’s MAS demanded local crypto firms cease overseas digital token services by 30 June, or face fines and jail, strengthening KYC and AML protocols.

Overlaying these trends, quantum computing’s impact on crypto security loomed large: experts warned that advances like Microsoft’s Majorana chip could crack Bitcoin within five years, highlighting a looming existential challenge to current blockchains and urging urgent investment in quantum-resistant systems.

Trade and AI chip control battles – export controls as cybersecurity and privacy fortification

Taiwan imposed urgent export controls on TSMC, stemming from its inadvertent sale of chiplets to Huawei—an incident that compelled Taipei to heighten scrutiny across advanced semiconductor transfers. Simultaneously, the UAE’s Stargate AI megaproject, facing US export concerns due to proximity to sanctioned regimes, illustrated how non-Western AI investments are now contingent on compliance with geopolitically sensitive terms.

Bridging the digital divide and digital rights

The IGF 2025 in Lillestrøm started with sobering statistics: 2.6 billion people remain offline, an alarming figure reframing connectivity as a democratic imperative, not just a technical goal. Sessions like Closing Digital Divides by Universal Access & Acceptance brought together voices from Canada, Kenya, and Pakistan to explore socioeconomic and linguistic barriers blocking inclusion (turn0search0). The UNESCO-endorsed ROAMX framework was spotlighted for its role in evaluating digital policy through a lens of Rights, Openness, Accessibility, Multistakeholder participation, and gender and sustainability considerations.

Digital rights gained focus, with IGF 2025’s civil society push for WSIS+20 accountability and HRC’s emphasis on marginalised groups. ‘Civil society pushes back against cyber law misuse’ highlighted government overreach, while the Freedom Online Coalition’s AI statement upheld rights, although spyware gaps in the Global South persisted.

Language diversity also took centre stage, with an IGF panel emphasising that true connectivity requires a native-language interface, a plea backed by experts from ICANN, Unicode, and regulatory authorities across regions.

On the policy front, the June release of the WSIS+20 Elements Paper emphasised equitable access, data justice, cybersecurity, and inclusive AI as pillars of global digital cooperation.

Geneva’s own Canton is implementing a constitutionally enshrined right to digital integrity, diving into operational standards for data protection and anti-surveillance—an experimental testbed in Europe’s digital rights frontier (turn0search17). Meanwhile, an IGF roundtable on ‘Click with Care’ recombined child safety, algorithmic impact, and hate speech concerns into a unified digital rights narrative, provoking parliamentarians’ calls for platform accountability laws and human-centred design.

June 2025’s trends, from IGF 2025’s insights to global shifts, shape our digital future. Explore more on dig.watch!

Diplo Blog – ‘AI and Magical Realism: When technology blurs the line between wonder and reality’

The challenges of governing AI often feel like something out of a Gabriel García Márquez novel, where the extraordinary blends seamlessly with the everyday, and the line between the possible and the impossible grows faint. In the week from 22 to 27 June 2025 at the 20th Internet Governance Forum (IGF), I proposed using magical realism as a lens to understand AI’s complexities. Here’s why this literary tradition might offer a useful tool for the AI debates ahead.

Read the full blog on diplomacy.edu!

Join us next month as we track these evolving trends. Subscribe to our weekly updates at dig.watch for the latest digital policy insights!

For more information on cybersecurity, digital policies, AI governance and other related topics, visit diplomacy.edu.

Geneva in June

Developments, events and takeaways

In June, Geneva reaffirmed its position as a global nerve centre for digital policy, offering a rich tapestry of events, summits, and multilateral initiatives that articulated the future of digital governance.

The month began with the Giga School Connectivity Forum on 5 June at Campus Biotech, which brought together cross-sector stakeholders, including government, academia, and telecommunications leaders, to dissect challenges in achieving universal school internet access by 2030, emphasising the urgency of closing educational and digital gaps. Shortly thereafter, the Final Brief on WSIS+20 High-Level Event on 10 June, convening diplomats, UN officials, and technologists in Geneva to outline priorities for the December 2025 UN General Assembly review, on issues such as digital inclusion, cybersecurity, and inclusive AI frameworks, cementing the city’s role in shaping global digital norms.

Mid-June saw ITU Council session (17–27 June) reconvene at ITU headquarters. The ‘AI in Action’ workshop and discussions on the Giga Connectivity Centre, launched in partnership with UNICEF and Spain at Campus Biotech, highlighted how AI and connectivity are being mainstreamed into Geneva’s institutional agenda. Simultaneously, on 9 June, the DNS4EU initiative captured attention, even though it is a separate project, its launch underlines Geneva’s alignment with European infrastructure sovereignty goals.

Geneva is also hosting the 59th session of the UN Human Rights Council, running from 16 June to 11 July. Alongside traditional rights issues, delegates tackled digital-era concerns: the impact of AI on freedom of expression, surveillance, and the rights of vulnerable populations. While digital-specific resolutions were not yet tabled, the session reaffirmed that the Council considers digital rights within its broader human rights framework, setting the stage for deeper engagement on online privacy, misinformation, and digital inclusion in upcoming global reviews.

From 19 to 30 June, the Geneva Internet Law Summer School welcomed global scholars to debate issues ranging from data protection to net neutrality, underscoring Geneva’s status as a laboratory for digital legal policy. In parallel, Geneva-based ARTICLE 19 led a powerful session demanding digital rights and equity to be central to the WSIS+20 outcome, framing digital inclusion as an issue inseparable from human rights.

Snapshot: The developments that made waves in June

June 2025 was marked by notable developments in AI governance, cybersecurity, and global digital policy. Here’s a snapshot of what happened over the last month:

TECHNOLOGY

Chinese chipmaker Loongson has unveiled new server CPUs that it claims are comparable to Intel’s 2021 Ice Lake processors, marking a step forward in the nation’s push for tech self-sufficiency.

Africa is falling far behind in the global race to develop AI, according to a new report by Oxford University.

A groundbreaking quantum leap has taken place in space exploration. The world’s first photonic quantum computer has successfully entered orbit aboard SpaceX’s Transporter 14 mission.

The US Department of Defence has awarded OpenAI a $200 million contract to develop prototype generative AI tools for military use.

Recent breakthroughs in quantum computing have revived fears about the long-term security of Bitcoin (BTC).

A small-scale quantum device developed by researchers at the University of Vienna has outperformed advanced classical machine learning algorithms—including some used in today’s leading AI systems—using just two photons and a glass chip.

Orange Business and Toshiba Europe have launched France’s first commercial quantum-safe network service in Paris.

Oxford University physicists have achieved a world-first in quantum computing by setting a new record for single-qubit operation accuracy.

Oxford Quantum Circuits (OQC) has revealed plans to develop a 50,000-qubit fault-tolerant quantum computer by 2034, using its proprietary ‘Dimon’ superconducting transmon technology.

Chinese scientists have created the world’s first AI-based system capable of identifying real nuclear warheads from decoys, marking a significant step in arms control verification.

GOVERNANCE

The new EU International Digital Strategy 2025 (published on 5 June 2025) pivots from the EU’s values-based digital diplomacy towards a more geopolitical, security – and competition–driven approach.

The US Senate has passed the GENIUS Act, the first bill to establish a federal framework for regulating dollar-backed stablecoins.

Vietnam has officially legalised crypto assets as part of a landmark digital technology law passed by the National Assembly on 14 June.

INFRASTRUCTURE

The UK government’s evolving defence and security policies aim to close legal gaps exposed by modern threats such as cyberattacks and sabotage of undersea cables.

Plans for a vast AI data hub in the UAE have raised security concerns in Washington due to the country’s close ties with China.

LEGAL

Denmark’s Ministry of Culture has introduced a draft law aimed at safeguarding citizens’ images and voices under national copyright legislation.

US President Donald Trump has announced a 90-day extension for TikTok’s Chinese parent company, ByteDance, to secure a US buyer, effectively postponing a nationwide ban of the popular video-sharing app.

OpenAI reportedly tries to reduce Microsoft’s exclusive control over hosting its AI models, signalling growing friction between the two companies.

A federal judge in New York ordered the US Office of Personnel Management (OPM) to stop sharing sensitive personal data with the Department of Government Efficiency (DOGE) agents.

The European Commission has imposed a €329 million fine on Berlin-based Delivery Hero and its Spanish subsidiary, Glovo, for participating in what it described as a cartel in the online food delivery market.

ECONOMY

OpenAI chief executive Sam Altman revealed that he had a conversation with Microsoft CEO Satya Nadella on Monday to discuss the future of their partnership.

SoftBank founder Masayoshi Son is planning what could become his most audacious venture yet: a $1 trillion AI and robotics industrial park in Arizona.

Bitcoin prices slumped in mid-June as geopolitical tensions in the Middle East worsened.

OKX has expanded its European presence by launching fully compliant centralised exchanges in Germany and Poland.

Taiwan has officially banned the export of chips and chiplets to China’s Huawei and SMIC, joining the US in tightening restrictions on advanced semiconductor transfers.

Amazon will invest AU$ 20 billion to expand its data centre infrastructure in Australia, using solar and wind power instead of traditional energy sources.

SECURITY

A ransomware attack on the Swiss non-profit Radix has led to the theft and online publication of sensitive government data.

The Iran–Israel conflict has now expanded into cyberspace, with rival hacker groups launching waves of politically driven attacks.

Chinese AI company DeepSeek is gaining traction in global markets despite growing concerns about national security.

The National Cyber Security Centre (NCSC) has published new guidance to assist organisations in meeting the upcoming EU Network and Information Security Directive (NIS2) requirements.

A coalition of cybersecurity agencies, including the NSA, FBI, and CISA, has issued joint guidance to help organisations protect AI systems from emerging data security threats.

In a bold move highlighting growing concerns over digital sovereignty, the German state of Schleswig-Holstein is cutting ties with Microsoft.

Prior to the Danish government’s formal decision, the cities of Copenhagen and Aarhus had already announced plans to reduce reliance on Microsoft software and cloud services.

The EU Council and European Parliament have reached a political agreement to strengthen cross-border enforcement of the General Data Protection Regulation (GDPR).

One of the largest-ever leaks of stolen login data has come to light, exposing more than 16 billion records across widely used services, including Facebook, Google, Telegram, and GitHub.

Over 20,000 malicious IP addresses and domains linked to data-stealing malware have been taken down during Operation Secure, a coordinated cybercrime crackdown led by INTERPOL between January and April 2025.

The FBI has issued a warning about the resurgence of BADBOX 2.0, a dangerous form of malware infecting millions of consumer electronics globally.

US President Donald J. Trump signed a new Executive Order (EO) aimed at amending existing federal cybersecurity policies.

Japan’s parliament has passed a new law enabling active cyberdefence measures, allowing authorities to legally monitor communications data during peacetime and neutralise foreign servers if cyberattacks occur.

DEVELOPMENT

NATO is discussing proposals to broaden the scope of defence-related expenditures to help member states meet a proposed spending target of 5% of GDP.

SOCIO-CULTURAL

Social media platform X has updated its developer agreement to prohibit the use of its content for training large language models.

The UK’s Financial Conduct Authority (FCA) has taken action against unauthorised financial influencers in a coordinated international crackdown, resulting in three arrests.

Wikipedia has paused a controversial trial of AI-generated article summaries following intense backlash from its community of volunteer editors.

TikTok has globally banned the hashtag ‘SkinnyTok’ after pressure from the French government, which accused the platform of promoting harmful eating habits among young users.

For more information on cybersecurity, digital policies, AI governance and other related topics, visit diplomacy.edu.

EU Digital Diplomacy: Geopolitical shift from focus on values to economic security

The new EU International Digital Strategy 2025 (published on 5 June 2025) pivots from the EU’s values-based digital diplomacy (outlined in the 2023 Council Conclusions on Digital Diplomacy) towards a more geopolitical, security – and competition–driven approach.

The strategy is comprehensive in coverage, from digital infrastructure and security to economic issues and human rights. Yet, in a wide range of policy initiatives, it prioritises issues such as secure digital infrastructure around submarine cables and gives less prominence to, for example, the use of the ‘Brussels effect’ of spreading the EU’s digital regulations worldwide.

The strategy provides a multi-tier approach to the EU’s digital relations with the world, starting from global negotiations (the UN, G7, G20) via engagement with regional organisations (e.g. African Union, ASEAN) and specific countries.

Lastly, the strategy leaves many questions open, especially regarding its practical implementation.

This analysis dives deep into these and other aspects of the new EU international digital strategy, which will have a broader impact on tech developments worldwide. It compares the 2023 Council Conclusions on Digital Diplomacy with the 2025 EU International Digital Strategy. Both documents address the European Union’s international digital policy.

However, they differ significantly in form and drafting process. The 2023 Conclusions on EU Digital Diplomacy (The 2023 Conclusions) were adopted by the Council of the EU following negotiations among Member States. Conversely, the 2025 EU International Digital Strategy (The 2025 Strategy) was drafted by the European Commission and presented as a Communication to the Council and the European Parliament.

1. Key shifts in the 2025 Strategy

This brief unpacks these shifts, contrasting 2025’s priorities with the 2023 outlook, and concludes with policy recommendations.

From values to geopolitics and geoeconomics

The 2023 Conclusions stressed a human-centric, rights-based framework. The 2025 Strategy frames digital issues chiefly as matters of systemic resilience, economic competition, and security. The EU will focus more on building trade and security partnerships for exporting the EU’s AI and digital solutions than on ‘exporting’ norms (‘Brussels effect’).

The Strategy repeatedly stresses that boosting EU technological capacity (AI, semiconductors, cloud, quantum) is essential for economic growth and security. Tech Commissioner Virkkunen has stated that ‘tech competitiveness is an economic and security imperative’ for Europe.

Continued values, but subsumed

Although the Strategy still affirms support for human rights, it treats ‘values’ as subsidiary to strategic goals. In practice, issues like privacy and inclusivity are mentioned mostly in passing. By contrast, the 2023 Council Conclusions had foregrounded a ‘human-centric regulatory framework for an inclusive digital transformation’. The 2025 text incorporates those values under broader objectives of resilience and competitiveness.

Regulatory power de-emphasised

The EU’s traditional approach of using single‑market rules to set global standards (‘Brussels effect’) is notably downplayed. For example, the new strategy does not mention the AI Act – a flagship EU regulation. Instead, emphasis is on investment and cooperation (e.g. AI infrastructure) rather than the export of EU rules. This suggests the EU pragmatically recognises limits to unilateral rule-setting and focuses on building capabilities instead.

Team Europe approach

Both the 2023 Council Conclusions and the 2025 Strategy stress a coordinated EU approach. The Strategy prioritises deepening existing Digital Partnerships and Dialogues, and establishing new ones. A new Digital Partnership Network is proposed to coordinate these efforts, signalling an organisational shift toward structured cooperation.

Table 1: Key shifts in EU digital diplomacy (2023 vs. 2025)

2. New frontiers: What is the EU prioritising?

The 2025 Strategy introduces several priorities that were either absent or significantly less prominent in the 2023 Conclusions. These new focus areas illustrate the concrete manifestation of the EU’s intensified geopolitical and geoeconomic orientation.

Defence-linked technologies

For the first time, the EU links advanced digital tech to defence. It calls for efforts in cyber-defence, secure supply chains, and countering hybrid threats alongside AI, chips, and quantum R&D. The aim is an industry able to design and produce strategic tech (AI, semiconductors, cloud, quantum) at scale for both civilian and defence use.

This represents a significant securitisation of digital policy, moving beyond traditional cybersecurity to integrate technology directly into defence doctrines and industrial policy.

Secure infrastructure and connectivity

The strategy highlights investments in secure networks – 5G/6G, undersea (submarine) cables, and satellite links. Notably, it builds on the Global Gateway initiative (the EU’s alternative to China’s Belt and Road), co‑funding a network of secure submarine cables (Arctic, BELLA, MEDUSA, Blue-Raman), creating physical links with strategic partners and Digital Public Infrastructure in Africa, Asia, and Latin America. This addresses resilience against disruptions and foreign dependence in critical infrastructure.

Economic security – Trusted supply chains

The EU emphasises ‘resilient ICT supply chains’ and the use of trusted suppliers. In practice, this means diversifying away from over‑reliance on any one country or firm. The strategy also pushes digital trade frameworks: expanding digital trade agreements (e.g. with Singapore and South Korea) and promoting innovation in cooperation with ‘trusted partners’ to bolster EU leadership in emerging tech.

Digital Public Infrastructure (DPI)

There is a new focus on promoting EU-style DPIs abroad – for example, supporting partner countries to adopt secure digital IDs, e-services, data governance models, etc. The strategy calls for coordinated EU public-private investment in DPI and cybersecurity tools to aid partners’ digital transitions.

EU Tech Business Offer

This is a major new initiative – a public-private investment package (‘Tech Team Europe’) to help partners build digital capacity. Components include AI Factories (regional supercomputing/data centres), secure connectivity projects, digital skills and cyber capacity-building. The Strategy promises to roll out this dedicated Tech Business Offer globally, blending EU and member state resources to empower foreign markets with European tech (see Strategic Signals below).

Deepening partnerships and standards

The Strategy commits to expanding the Digital Partnerships and Dialogues established in 2023, and creating a new Digital Partnership Network to coordinate them. This means more joint R&D programmes (e.g., in quantum and semiconductors with Japan, Canada, and South Korea) and pushing for interoperable standards. The EU will continue to promote a rules-based digital order in line with its values, but through collaboration rather than just unilateral rules.

3. Shifting priorities: What’s less emphasised

While new priorities emerge, some traditional EU themes recede in prominence within the 2025 Strategy, though most are still present, with less emphasis. This highlights strategic trade-offs and potential implications of these shifts.

Brussels effect

The strategy downplays the ‘Brussels effect’, the EU’s reliance on its regulatory power to shape global norms. For example, the General Data Protection Regulation (GDPR) is mentioned only in passing, and the landmark AI Act is entirely absent. The Digital Services Act (DSA) is primarily discussed in an internal EU context, with little ambition to project its influence globally.

This signals a shift in the EU’s approach to ‘regulatory diplomacy,’ reflecting a broader reassessment of its approach to digital regulations. At the 2025 Paris AI Summit, both Ursula von der Leyen and Emmanuel Macron signalled a move toward slowing down AI and digital regulation.

This change responds to a wide range of criticisms: that the EU may have moved too far and too fast with the AI Act (see next section); that its regulatory approach is creating friction with the Trump administration, which views such measures as a threat to the US tech sector; and internal concerns that an overemphasis on regulation could hinder the EU’s competitiveness in the AI race against China and the United States.

EU AI Act

The marginalisation of the EU AI Act in the strategy reflects a broader shift within the EU. Although the Act was negotiated and adopted during a ‘public tsunami’ of concern over long-term AI risks, other actors have since retreated from this approach.

Examples include the slowing down of the Bletchley AI initiative during the Paris AI Summit and the Trump administration’s recalibrated AI strategy. In contrast, the EU faces a unique challenge: its approach to the regulation of AI models, heavily influenced by long-termist thinking, is already codified into law through the AI Act.

The primary challenge now lies in implementing the AI Act. The Act’s provisions related to the top two layers of the AI regulatory pyramid (see image of AI governance pyramid) have been gaining in relevance: protecting data and knowledge from misuse by AI platforms, and safeguarding human rights, consumer interests, employment, and education from adverse AI impacts.

However, the provisions for regulating algorithms and long-term risks face practical and conceptual challenges. Some of these provisions, such as identifying the power of AI models mainly with the quantitative parameters of the number of FLOPs, are already outdated due to rapid technological advancements. There are criticisms that regulating algorithms may impact the competitiveness of the EU’s emerging AI industry.

Values-first framing

‘Human‑centric’ language still appears, but under resilience. Explicit human rights advocacy, such as protections for dissidents online or campaigns against censorship and surveillance, is barely mentioned in the strategy. In contrast, the 2023 Conclusions devoted numerous paragraphs to ‘vulnerable… groups’ (women, children, the disabled) and digital literacy.

The 2025 Strategy only briefly nods to ‘fundamental values’ in passing (e.g., promoting a rules-based order). Notably, it omits references to ‘internet shutdowns, online censorship and unlawful surveillance’, which were part of the 2023 document.

Standalone cyber diplomacy

The Strategy blurs the line between digital and cyber policy. Whereas in the past, the EU approaches treated cybersecurity and digital cooperation as distinct tracks, the new document integrates them. (As one example, the traditional ‘cyber diplomacy toolbox’ is now subsumed under broad tech partnerships.)

Focus on trade agreements

E-commerce policy has also shifted from a detailed 2023 focus on WTO e-commerce negotiations and the moratorium on customs duties towards highlighting the importance of bilateral/regional trade agreements as a primary tool for digital governance.

Table 2: Summary of prioritised and deprioritised issues in the 2025 Strategy

– Submarine cables (resilience, cybersecurity focus)

– Economic & supply-chain security (resilient ICT, trusted suppliers)

– Tech competitiveness via trade & innovation (digital trade agreements)

– Digital infrastructure (5G/6G, DPI, AI factories, international cooperation)

– ‘EU Tech Business Offer’ (public-private investment abroad)

– Explicit human rights advocacy (protections for dissidents, anti-censorship campaigns)

– E-commerce negotiations (shift from focus on WTO solutions towards bilateral/regional agreements)

– Prominence of global digital policies (e.g. Global Digital Compact, WSIS)

4. Geography of the EU’s digital diplomacy

The Strategy proposes multi-layered engagement combining bilateral arrangements (e.g. digital partnerships), regional framework (e.g. EU-LAC alliance) and global governance (GDC and WSIS) approach from the global via regional to national levels as summarised below.

Global level

The Strategy highlights the following initiatives and policy processes:

- G7/G20/OECD: Coordination on AI safety, economic security standards, and semiconductors

- UN: Implementation of Global Digital Compact (GDC), WSIS+20 Review

- ITU: Rules-based radiofrequency allocation

- Counter Ransomware Initiative: Joint operations against cybercrime

- Clean Energy Ministerial: AI-energy collaboration

Regional level

Africa

– Collaboration with Smart Africa (Africa AI Council) under Global Gateway

– ‘AI for Public Good’ initiative (Generative AI solutions, capacity building)

ASEAN

Central America (SICA)

Latin America and the Caribbean

– LAC Connectivity Toolbox development

– MEDUSA cable extension to West Africa

– EU-LAC Cybersecurity Community of Practice

Western Balkans

There is ambiguity in dealing with the Western Balkans. While the regional approach is used in the EU’s policies, including this strategy, the EU engages countries from the region individually.

– Mutual recognition of e-signatures

– Onboarding to Single Digital Gateway

Country level

The Strategy’s linguistic statistics show India and Japan’s growing relevance for the EU’s digital diplomacy.

Table 3: Frequency of country referencing in 2025 Strategy

India: Strategic partnership

The relevance of India for the EU’s digital diplomacy has risen significantly. It reflects the overall EU geopolitical shift towards India as a democracy, a leading tech actor, and a country with the highest strategic capacity to connect various groups and blocs, including BRICS, G20, and G77.

EU-India cooperation initiatives

– Exploration of Mutual Recognition Agreements (Cyber Resilience Act)

– Promotion of EU eID Wallet model

– Promotion of the EU eID Wallet model

Like-minded partners: Deepening alliances

The EU explicitly seeks deeper digital ties with like‑minded countries, including Japan, South Korea, Canada, India, Singapore, and the United Kingdom. A first Digital Partnership Network meeting (involving EU and partner states) is planned to coordinate tech cooperation. Joint research initiatives are slated (quantum, semiconductor programs with Japan, Canada, South Korea).

Australia

Canada

Japan

– Exploration of Mutual Recognition Agreements (Cyber Resilience Act)

– eID Wallet interoperability cooperation

– Joint research on AI innovation

United Kingdom

Norway

Republic of Korea

Singapore

Taiwan

United States: Continue, wait and see

The uncertainty of EU-US digital relations is signalled by the lack of references in the strategy of the EU-US Trade Technology Council, which was the main mechanism for transatlantic digital cooperation. However, the EU signalled its readiness to cooperate, emphasising that it ‘remains a reliable and predictable partner’.

Several ‘omissions’ in the strategy also underscore this readiness to engage. Notably, tech companies are not singled out for criticism, as they often are in the EU’s tech sovereignty approaches and numerous anti-monopoly and content policy initiatives. There is no explicit reference to digital services taxes for tech companies, which has historically been a strong card in the EU’s geoeconomic relations with the US.

EU-USA cooperation initiatives

– Transparency mechanism on semiconductor subsidies

China: Between cooperation and competition

China is scarcely mentioned in the text – digital cooperation is deferred to an upcoming summit. However, the Strategy is clearly defensive: it doubles down on ‘secure and trusted 5G networks’ globally, implicitly excluding Chinese vendors like Huawei. It also casts the EU’s Global Gateway as a digital alternative to China’s Belt and Road, investing in secure cables and AI infrastructure in Asia, Africa and Latin America. In sum, the signal to Beijing is one of wary competition. Digital relations will be discussed during the forthcoming China-EU Summit in July 2025.

Neighbourhood: Deep engagement

Regionally, the EU will push its Digital Single Market model to its neighbours. For instance, Ukraine, Moldova, and the Western Balkans are targeted to integrate EU digital rules rapidly (secure IDs, connectivity, regulatory alignment).

Moldova

– Extension of EU Cyber-Reserve

Ukraine

– Extension of the EU Cyber-Reserve

– AI-based Local Digital Twins for urban reconstruction

– Synergies with Hub for European Defence Innovation (HEDI)

Global South: Global inclusion

The EU plans to expand Global Gateway digital projects for Africa, Asia, and Latin America: co-financing secure submarine cables, undersea connectivity to Europe, and building local digital infrastructure. A dedicated Tech Business Offer will extend to the Southern Neighbourhood and sub-Saharan Africa.

Brazil

Egypt

– eID Wallet interoperability cooperation

Costa Rica

5. Open questions

Several critical questions will impact the implementation of the Strategy.

Synchronising internal and external digital policy

So far, the EU’s internal and foreign policies have been in sync. Europe has been practising locally what was preached abroad. The new Strategy may change these dynamics as Brussels remains committed to a values-based domestic order. Still, externally, the Strategy recasts digital policy in terms of global rivalry and resilience in security and economic domains. This shift from principles to geopolitical interests will trigger tensions in the implementation, as the two approaches necessitate different policy instruments, methods, and language.

Defining digital and internet governance

The Strategy repeatedly speaks of ‘digital’ affairs, but it also includes several references to ‘internet governance’, leaving it unclear how the EU sees the two concepts and the distinctions between them (if any). In practice, nearly all tech issues (from infrastructure to AI) fall under the internet’s umbrella, so perhaps a clear separation between the two is not very straightforward. Clarifying what means what for the EU is needed to avoid confusion over overlapping mandates.

Cyber vs. digital diplomacy coordination

The EU has two separate diplomatic tracks for cybersecurity and overall digital issues. The new Strategy implies that these converge, but the organisational plan is vague. Will the EU continue to have two – cyber and digital – ambassador networks, or will there be an integrated structure? The cyber-digital coordination will rise in importance as the strategy is implemented. The forthcoming Danish presidency of the EU in the second part of 2025 may provide some solutions as Denmark has experience in running holistic tech diplomacy, combining security, economic, and other policy aspects.

Implementation and resources

The Strategy misses implementation details. Which EU body will lead its rollout? How will funding be allocated across the many initiatives (Tech Business Offer, infrastructure projects, partnerships)? Importantly, will the EU follow up on the 2023 Conclusions, which explicitly call to ensure that ‘at least one official in every EU Delegation has relevant expertise on digital diplomacy‘ and that diplomats receive training?

Progress on this front will be a key test of delivery.

AI Strategy – Computing power vs data and knowledge

The Strategy heavily emphasises computing infrastructure (AI ‘gigafactories’). It follows global inertia but not AI research, which shows that adding GPU power does not significantly improve AI inference quality (diminishing returns).1 Should the EU instead leverage its data strengths, broad knowledge pool, and human expertise? Ensuring quality data, skilled human capital, and innovative applications may be as important as raw computing. Balancing the EU’s high-education workforce and data resources with these new AI investments is an open policy debate.

6. Conclusion and recommendations

The 2025 Strategy marks a realpolitik turn for EU digital policy. It recognises that digital affairs are a geopolitical competition and an economic power domain. The EU is right to bolster its tech capabilities, diversify alliances, and secure critical infrastructure. However, it must not abandon its foundational advantages.

Keep values and ethics in view

Even as the strategy focuses on power and security, EU core values – human rights, privacy, democracy – remain the EU’s unique selling points. The EU should ensure that its external actions (e.g. tech exports, partnerships) reflect these values. For example, the Ethics Guidelines for AI or human rights due diligence in tech supply chains should accompany hard-power initiatives. Over time, this ‘soft’ aspect of tech diplomacy will underpin global trust in EU solutions, especially during the AI transformation of societies.

Leverage the knowledge ecosystem

Europe’s educated workforce, strong research base, and innovative companies are among its strengths. The Strategy should explicitly link new initiatives (like AI factories) to European data assets and talent. For instance, investing in common European data spaces, R&D hubs, and AI talent training will complement hardware investments. Policies should channel these resources into EU security and cooperative R&D projects with partners, maximising long-term benefits.

Building digital diplomacy capacity

The EU should develop digital diplomacy capabilities. The Commission and the High Representative for Foreign Affairs should clarify the future of the cyber/digital networks, ensuring diplomats and delegations have the skills to carry out the strategy. This could involve appointing Digital Envoys in key regions, scaling up EU-funded training programs for officials, and embedding digital attachés in trade missions. Progress should be reported regularly (as the 2023 Conclusions demanded).

Clarify governance frameworks

The EU should avoid confusion by clearly defining its terms. If ‘digital diplomacy’ now covers everything from 5G to AI, the Strategy (or follow-up guidelines) should explain how it relates to internet governance, cyber diplomacy, and other fields. This will help partners and stakeholders navigate the new agenda.

Continue global engagement

Finally, the EU should remember that its influence often came from combining hard and soft power. Alongside alliances, it should maintain active engagement in multilateral fora (e.g. UN Internet Governance Forum, WTO e-commerce activities) to promote interoperability and standards. The Strategy’s emphasis on bilateral ties should balance efforts to shape open, rules-based markets globally.

The 2025 Strategy lays out an ambitious vision of a stronger, more assertive EU in global tech. To make it work, policymakers should marry this realpolitik turn with the EU’s enduring strengths – its values, expertise, and rule-of-law model – and put concrete implementation in place.

Annex: The strategy and conceptual approach to digital diplomacy

The 2025 strategy covers the impact of digital technology on the geopolitical environment for diplomacy, and topics on the diplomatic agenda, as per Diplo’s methodology for the impact of digitalisation on diplomacy.

The Strategy uses the term ‘digital diplomacy’ only once in the context of a policy topic to advance ‘our international priorities and to build partnerships’. It does not address the use of digital tools for the conduct of diplomacy (e.g. the use of social media). The EU has been using ‘digital diplomacy’ to cover geopolitical changes and new topics on the diplomatic agenda, as summarised here.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!

Advancing Swiss AI Trinity: Zurich’s entrepreneurship, Geneva’s governance, and communal subsidiarity

Switzerland can chart a unique path in the global AI race by combining three strengths: Zurich’s innovative entrepreneurship, Geneva’s responsible governance, and communal enabling subsidiarity.

Swiss AI Trinity can advance beyond technology by reimagining a national social contract for the AI age, firmly grounded in Swiss values.

The country’s AI transformation unfolds as the tech world faces profound flux. Amid the US push for unfettered tech growth and escalating US-China rivalry resembling an AI arms race, the EU and nations worldwide seek their own strategies. Uncertainty grows alongside deepening concerns about AI’s impact, from jobs and the economy to education and media.

In this fluid environment, Switzerland has a rare opportunity to carve out a distinctive approach to cutting-edge AI development, anchored in subsidiarity, apprenticeship, and national traditions.

Time is short, but the timing is favourable for Switzerland:

AI is commoditised: Switzerland’s delayed start in developing LLMs is no longer a disadvantage. New LLMs emerge daily; open-source models enable easy retraining; AI agents can be built in minutes. Success now hinges on data, knowledge, and uses of AI, not just hardware or algorithms. The key is the nexus between artificial and human intelligence, where Switzerland can excel due to its robust educational and apprenticeship model.

Pushback on AI regulation: After a frenzied regulatory race last few years, a more balanced approach now prioritises short-term risks (jobs, education) over existential long-term threats. The Trump administration has already slowed down US regulatory momentum, while the EU re-evaluates parts of its AI Act concerning generative models. Switzerland’s prudent regulatory stance has become an advantage. New AI regulation may be adopted as technology matures and crystallise real policy problems that should be regulated.

Against this backdrop, Switzerland’s AI Trinity proposes a three-pronged strategy:

- Zurich: A hub of private-sector innovation;

- Geneva: A crucible for global governance and standardisation;

- Swiss cantons, cities, and communities: Upholding subsidiarity to drive inclusive, bottom-up AI development.

Each pillar builds on existing strengths while addressing the urgent need to rethink business models, governance, and social contracts for the AI era.

Zurich: Supercharging business and innovation

While cutting-edge technology often seems concentrated in massive data centres and trillion-dollar corporations, DeepSeek exemplifies how breakthroughs can spring from lean, agile labs. Zurich is ideally placed to harness both approaches.

- World-class ecosystem: ETH Zurich—ranked among the world’s top universities—alongside R&D hubs for Microsoft, Google, and others, provides the talent, research excellence, and entrepreneurial mindset to keep Switzerland at AI’s forefront.

- Global reach, diverse perspectives: Partnerships must extend beyond Silicon Valley. Engaging Chinese and Indian tech players fosters competition, sparks creativity, and mitigates over-reliance on Western supply chains.

- Stronger academic-industry ties: Deeper collaboration between ETH and private-sector leaders will accelerate ventures. Breakthroughs in healthcare, climate tech, and beyond emerge when elite research meets real-world application.

Zurich is thus not just a global financial centre, but a beacon for responsible, human-centric AI.

Geneva: Forging global governance and standards

While Zurich drives innovation, Geneva can shape balanced global AI governance—a race intensifying amid rival initiatives from the Gulf and emerging tech hubs. Geneva must act decisively:

- Translating hype into action: Nations urgently need pragmatic AI tools for pandemic response, environmental risk, and equitable education. Geneva-based bodies should prioritise tangible solutions, dispelling perceptions of AI as abstract hype.

- Mainstreaming AI: Treating AI as integral to health, commerce, and labour rights is vital. As AI becomes a universal necessity, Geneva’s institutions must weave digital policy into all major global negotiations.

- Modernising international organisations: Outdated, top-heavy management of international organisations lacks the agility for rapid AI evolution. Embedding AI translation services, automated reporting, and similar tools will boost transparency, efficiency, and collaboration.

- Defining standards where treaties fall short: Without robust international agreements, technical standards ensure interoperability. Geneva’s standardisation expertise positions it to lead in healthcare AI, digital trade, and environmental data.

By embracing this role, Geneva can ensure that multilateral bodies guide AI ethics in a transforming world.

Communities and cantons: Inclusion through subsidiarity

Switzerland’s greatest AI advantage lies in subsidiarity—the principle of localised decision-making. Distributing AI development across cantons and communities ensures innovation aligns with real needs, addresses local contexts, and leaves no one behind. Key activities include:

- ‘AI for All’ programme: Offer small grants to citizens and small businesses for developing AI agents, democratising access to tools and catalysing solutions rooted in local needs.

- AI education and apprenticeship: Develop AI apprenticeship, building on the Swiss long tradition of vocational training; integrate AI education from primary schools to universities.

- Libraries and local AI labs: Repurpose libraries, community centres, and post offices into AI knowledge hubs. Applying machine learning to hyper-local challenges – agriculture, tourism, health – can empower communities as active creators of AI innovation.

A call to action: Switzerland’s moment to lead

Switzerland stands at a decisive juncture. Uniting Zurich, Geneva, and its cantons can nurture an AI future that is cutting-edge yet fair, transparent, and Swiss at its core.

By adopting this AI Trinity approach—balancing innovation, governance, and subsidiarity—Switzerland can show the world how to embrace advanced technology without sacrificing societal values.

Practical steps forward:

- Scale AI apprenticeships through Switzerland’s vocational tradition.

- Launch a national AI capacity-building programme for citizens and companies.

- Adapt school and university curricula to foster creativity as AI automates tasks like essay drafting.

- Repurpose libraries and post offices into community knowledge hubs.

- Prioritise ‘AI for All’ principles in public projects, procurement, and grants at all governmental levels.

Swiss AI Trinity approach can democratise AI, empower local innovation, and fuel inclusive growth from the ground up. The tools, talent, and tradition are in place. The time to act is now.

For more information on these topics, visit diplomacy.edu.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!

Breaking down the OEWG’s legacy: Hits, misses, and unfinished business

The OEWG on cybersecurity (2019–2025) shaped global debates on digital security, but did it deliver? External experts weigh in on its lasting impact, while our team, who tracked the process from day one, dissect the milestones and missed opportunities. Together, these perspectives reveal what’s next for cyber governance in a fractured world.

What is the OEWG?

The open-ended working groups (OEWGs) are a type of format present in the UN that is typically considered the most open, as the name suggests. It means that all UN member and observer states, intergovernmental organisations, and non-governmental organisations with the UN Economic and Social Council (ECOSOC) consultative status may attend public meetings of the working group. Yet, decisions are made by the UN member states. There are various OEWGs at the UN. Here, we are addressing the one dealing with cybersecurity.

What does the OEWG on cybersecurity do? In plain language, it tries to find more common ground on what is allowed and what is not in cyberspace, and how to ensure adherence to these rules. In the UN language, the Cyber OEWG was mandated to ‘continue to develop the rules, norms, and principles of responsible behaviour of states, discuss ways for their implementation, and to study the possibility of establishing regular institutional dialogue with broad participation under the auspices of the UN.’

How was the OEWG organised? The OEWG was organised around an organisational session that discussed procedures and modus operandi, and substantive ones dealing with the matter, as well as intersessional meetings and town halls supplementing the discussions. The OEWG held 10 substantive sessions during its 5-year mandate, with the 11th and final session just around the corner in July 2025, where the group will adopt its Final report.

The OEWG through expert eyes: Achievements, shortfalls, and future goals

As the OEWG 2019–2025 process nears its conclusion, we spoke with cybersecurity experts to reflect on its impact and look ahead. Their insights address four key questions:

- The OEWG’s most substantive contributions and shortcomings in global ICT security

- Priorities for future dialogues on responsible state behaviour in cyberspace

- The feasibility of consensus on a permanent multilateral mechanism

- The potential relevance of such a mechanism in today’s divisive geopolitical climate

Their perspectives shed light on what the OEWG has achieved—and the challenges still facing international cyber governance.

In addition to external cybersecurity experts, we asked our own team—who have tracked the OEWG process since its inception—to share their analysis. They highlight key achievements over the past five years, identify gaps in the discussions, and offer predictions on where debates may lead during the final session and beyond.

Read the full expert commentaries on our dedicated web page.

Meme coins: Fast gains or crypto gambling?

A high-stakes game of digital chance, meme coins blur the line between viral entertainment and financial risk in the wildest corner of crypto.

Meme coins have exploded in the crypto market, attracting investors with promises of fast profits and viral hype. These digital tokens, often inspired by internet memes and pop culture, like Dogecoin, Pepe, Dogwifhat and most recently Trump coin, do not usually offer clear utility. Instead, their value mostly depends on social media buzz, influencer endorsements, and community enthusiasm. In 2025, meme coins remain a controversial yet dominant trend in crypto trading.

Viral but vulnerable: the rise of meme coins

Meme coins are typically created for humour, social engagement, or to ride viral internet trends, rather than to solve real-world problems. Despite this, they are widely known for their popularity and massive online appeal. Many investors are drawn to meme coins because of the potential for quick, large returns.

For example, Trump-themed meme coins saw explosive growth in early 2024, with MAGA meme coin (TRUMP) briefly surpassing a $500 million market cap, despite offering no real utility and being driven largely by political hype and social media buzz.

Analysis reports indicate that in 2024, between 40,000 and 50,000 new meme tokens were launched daily, with numbers soaring to 100,000 during viral surges. Solana tops the list of blockchains for meme coin activity, generating 17,000 to 20,000 new tokens each day.

Chainplay’s ‘State of Memecoin 2024’ report found that over half (55.24%) of the meme coins analysed were classified as ‘malicious’.

The risks of rug pulls and scams in meme coin projects

Beneath the humour and viral appeal, meme coins often hide serious structural risks. Many are launched by developers with little to no accountability, and most operate with centralised liquidity pools controlled by a small number of wallets. The setup allows creators or early holders to pull liquidity or dump large token amounts without warning, leading to devastating price crashes—commonly referred to as ‘rug pulls.’

On-chain data regularly reveals that a handful of wallets control the vast majority of supply in newly launched meme tokens, making market manipulation easy and trust almost impossible. These coins are rarely audited, lack transparency, and often have no clear roadmap or long-term utility, which leaves retail investors highly exposed.

The combination of hype-driven demand and opaque tokenomics makes meme coins a fertile ground for fraud and manipulation, further eroding public confidence in the broader crypto ecosystem.

Gambling disguised as investing: The adrenaline rush of meme coins

Meme coins tap into a mindset that closely resembles gambling more than traditional investing. The entire culture around them thrives on adrenaline-fueled speculation, where every price spike feels like hitting a jackpot and every drop triggers a high-stakes rollercoaster of emotions. Known as the ‘degen’ culture, traders chase quick wins fuelled by FOMO, hype, and the explosive reach of social media.

The thrill-seeking mentality turns meme coin trading into a game of chance. Investors often make impulsive decisions based on hype rather than fundamentals, hoping to catch a sudden pump before the inevitable crash.

It is all about momentum. The volatile swings create an addictive cycle: the excitement of rapid gains pulls traders back in, despite the constant risk of losing everything.

While early insiders and large holders strategically time their moves to cash out big, most retail investors face losses, much like gamblers betting in a casino. The meme coin market, therefore, functions less like a stable investment arena and more like a high-risk gambling environment where luck and timing often outweigh knowledge and strategy.

Is profit from meme coins possible? Yes, but…

While some investors have made substantial profits from meme coins, success requires expert knowledge, thorough research, and timing. Analysing tokenomics, community growth, and on-chain data is essential before investing. Although they can be entertaining, investing in meme coins is a risky gamble. Luck remains a big key factor, so meme coins are never considered safe or long-term investments.

Meme coins vs Bitcoin: A tale of two mindsets

Many people assume that all cryptocurrencies share the same mindset, but the truth is quite different. Interestingly, cryptocurrencies like Bitcoin and meme coins are based on contrasting philosophies and psychological drivers.

Bitcoin embodies a philosophy of trust through transparency, decentralisation, and long-term resilience. It appeals to those seeking stability, security, and a store of value rooted in technology and community consensus—a digital gold that invites patience and conviction. In essence, Bitcoin calls for building and holding with reason and foresight.

Meme coins, on the other hand, thrive on the psychology of instant gratification, social identity, and collective enthusiasm. They tap into our desire for excitement, quick wins, and belonging to a viral movement. Their value is less about utility and more about shared emotion— the hope, the hype, and the adrenaline rush of catching the next big wave. Meme coins beckon with the thrill of the moment, the gamble, and the social spectacle. It makes meme coins a reflection of the speculative and impulsive side of human nature, where the line between investing and gambling blurs.

Understanding these psychological underpinnings helps explain why the two coexist in the crypto world, yet appeal to vastly different types of investors and mindsets.

How meme coins affect the reputation of the entire crypto market

The rise and fall of meme coins do not just impact individual traders—they also cast a long shadow over the credibility of the entire crypto industry.

High-profile scams, rug pulls, and pump-and-dump schemes associated with meme tokens erode public confidence and validate sceptics’ concerns. Many retail traders enter the meme coin space with high hopes and are quickly disillusioned by manipulation and sudden losses.

This leads to a sense of betrayal, triggering risk aversion and a generalised mistrust toward all crypto assets, even those with strong fundamentals like Bitcoin or Ethereum. Such disillusionment does not stay contained. It spills over into mainstream sentiment, deterring new investors and slowing institutional adoption.

As more people associate crypto with gambling and scams rather than innovation and decentralisation, the market’s growth potential suffers. In this way, meme coins—though intended as jokes—could have serious consequences for the future of blockchain credibility.

Trading thrills or ticking time bomb?

Meme coins may offer flashes of fortune, but their deeper role in the crypto ecosystem raises a provocative question: are they reshaping finance or just distorting it? In a market where jokes move millions and speculation overrides substance, the real gamble may not just be financial—it could be philosophical.

Are we embracing innovation, or playing a dangerous game with digital dice? In the end, meme coins are not just a bet on price—they are a reflection of what kind of future we want to build in crypto. Is it sustainable value, or just viral chaos? The roulette wheel is still spinning.

Would you like to learn more about AI, tech and digital diplomacy? If so, ask our Diplo chatbot!

Cognitive offloading and the future of the mind in the AI age

The rise of AI is transforming work and education, but raises questions about its impact on critical thinking and cognitive independence.

AI reshapes work and learning

The rapid advancement of AI is bringing to light a range of emerging phenomena within contemporary human societies.

The integration of AI-driven tools into a broad spectrum of professional tasks has proven beneficial in many respects, particularly in terms of alleviating the cognitive and physical burdens traditionally placed on human labour.

By automating routine processes and enhancing decision-making capabilities, AI has the potential to significantly improve efficiency and productivity across various sectors.

In response to these accelerating technological changes, a growing number of nations are prioritising the integration of AI technologies into their education systems to ensure students are prepared for future societal and workforce transformations.

China advances AI education for youth

China has released two landmark policy documents aimed at integrating AI education systematically into the national curriculum for primary and secondary schools.

The initiative not only reflects the country’s long-term strategic vision for educational transformation but also seeks to position China at the forefront of global AI literacy and talent development.

The two guidelines, formally titled the Guidelines for AI General Education in Primary and Secondary Schools and the Guidelines for the Use of Generative AI in Primary and Secondary Schools, represent a scientific and systemic approach to cultivating AI competencies among school-aged children.

Their release marks a milestone in the development of a tiered, progressive AI education system, with carefully delineated age-appropriate objectives and ethical safeguards for both students and educators.

The USA expands AI learning in schools

In April, the US government outlined a structured national policy to integrate AI literacy into every stage of the education system.

By creating a dedicated federal task force, the administration intends to coordinate efforts across departments to promote early and equitable access to AI education.

Instead of isolating AI instruction within specialised fields, the initiative seeks to embed AI concepts across all learning pathways—from primary education to lifelong learning.

The plan includes the creation of a nationwide AI challenge to inspire innovation among students and educators, showcasing how AI can address real-world problems.

The policy also prioritises training teachers to understand and use AI tools, instead of relying solely on traditional teaching methods. It supports professional development so educators can incorporate AI into their lessons and reduce administrative burdens.

The strategy encourages public-private partnerships, using industry expertise and existing federal resources to make AI teaching materials widely accessible.

European Commission supports safe AI use

As AI becomes more common in classrooms around the globe, educators must understand not only how to use it effectively but also how to apply it ethically.

Rather than introducing AI tools without guidance or reflection, the European Commission has provided ethical guidelines to help teachers use AI and data responsibly in education.

Published in 2022 and developed with input from educators and AI experts, the EU guidelines are intended primarily for primary and secondary teachers who have little or no prior experience with AI.

Instead of focusing on technical complexity, the guidelines aim to raise awareness about how AI can support teaching and learning, highlight the risks involved, and promote ethical decision-making.

The guidelines explain how AI can be used in schools, encourage safe and informed use by both teachers and students, and help educators consider the ethical foundations of any digital tools they adopt.

Rather than relying on unexamined technology, they support thoughtful implementation by offering practical questions and advice for adapting AI to various educational goals.

AI tools may undermine human thinking

However, technological augmentation is not without drawbacks. Concerns have been raised regarding the potential for job displacement, increased dependency on digital systems, and the gradual erosion of certain human skills.

As such, while AI offers promising opportunities for enhancing the modern workplace, it simultaneously introduces complex challenges that must be critically examined and responsibly addressed.

One significant challenge that must be addressed in the context of increasing reliance on AI is the phenomenon known as cognitive offloading. But what exactly does this term entail?

What happens when we offload thinking?

Cognitive offloading refers to the practice of using physical actions or external tools to modify the information processing demands of a task, with the aim of reducing the cognitive load on an individual.

In essence, it involves transferring certain mental functions—such as memory, calculation, or decision-making—to outside resources like digital devices, written notes, or structured frameworks.

While this strategy can enhance efficiency and performance, it also raises concerns about long-term cognitive development, dependency on technological aids, and the potential degradation of innate mental capacities.

How AI may be weakening critical thinking

A study, led by Dr Michael Gerlich, Head of the Centre for Strategic Corporate Foresight and Sustainability at SBS Swiss Business School, published in the journal Societies raises serious concerns about the cognitive consequences of AI augmentation in various aspects of life.

The study suggests that frequent use of AI tools may be weakening individuals’ capacity for critical thinking, a skill considered fundamental to independent reasoning, problem-solving, and informed decision-making.

More specifically, Dr Gerlich adopted a mixed-methods approach, combining quantitative survey data from 666 participants with qualitative interviews involving 50 individuals.

Participants were drawn from diverse age groups and educational backgrounds and were assessed on their frequency of AI tool use, their tendency to offload cognitive tasks, and their critical thinking performance.

The study employed both self-reported and performance-based measures of critical thinking, alongside statistical analyses and machine learning models, such as random forest regression, to identify key factors influencing cognitive performance.

Younger users, who rely more on AI, think less critically

The findings revealed a strong negative correlation between frequent AI use and critical thinking abilities. Individuals who reported heavy reliance on AI tools—whether for quick answers, summarised explanations, or algorithmic recommendations—scored lower on assessments of critical thinking.

The effect was particularly pronounced among younger users aged 17 to 25, who reported the highest levels of cognitive offloading and showed the weakest performance in critical thinking tasks.

In contrast, older participants (aged 46 and above) demonstrated stronger critical thinking skills and were less inclined to delegate mental effort to AI.

Higher education strengthens critical thinking

The data also indicated that educational attainment served as a protective factor: those with higher education levels consistently exhibited more robust critical thinking abilities, regardless of their AI usage levels.

These findings suggest that formal education may equip individuals with better tools for critically engaging with digital information rather than uncritically accepting AI-generated responses.

Now, we must understand that while the study does not establish direct causation, the strength of the correlations and the consistency across quantitative and qualitative data suggest that AI usage may indeed be contributing to a gradual decline in cognitive independence.

However, in his study, Gerlich also notes the possibility of reverse causality—individuals with weaker critical thinking skills may be more inclined to rely on AI tools in the first place.

Offloading also reduces information retention

While cognitive offloading can enhance immediate task performance, it often comes at the cost of reduced long-term memory retention, as other studies show.

The trade-off has been most prominently illustrated in experimental tasks such as the Pattern Copy Task, where participants tasked with reproducing a pattern typically choose to repeatedly refer to the original rather than commit it to memory.

Even when such behaviours introduce additional time or effort (e.g., physically moving between stations), the majority of participants opt to offload, suggesting a strong preference for minimising cognitive strain.

These findings underscore the human tendency to prioritise efficiency over internalisation, especially under conditions of high cognitive demand.

The tendency to offload raises crucial questions about the cognitive and educational consequences of extended reliance on external aids. On the one hand, offloading can free up mental resources, allowing individuals to focus on higher-order problem-solving or multitasking.

On the other hand, it may foster a kind of cognitive dependency, weakening internal memory traces and diminishing opportunities for deep engagement with information.

Within the framework, cognitive offloading is not a failure of memory or attention but a reconfiguration of cognitive architecture—a process that may be adaptive rather than detrimental.

However, the perspective remains controversial, especially in light of findings that frequent offloading can impair retention, transfer of learning, and critical thinking, as Gerlich’s study argues.

If students, for example, continually rely on digital devices to recall facts or solve problems, they may fail to develop the robust mental models necessary for flexible reasoning and conceptual understanding.

The mind may extend beyond the brain

The tension has also sparked debate among cognitive scientists and philosophers, particularly in light of the extended mind hypothesis.

Contrary to the traditional view that cognition is confined to the brain, the extended mind theory argues that cognitive processes often rely on, and are distributed across, tools, environments, and social structures.

As digital technologies become increasingly embedded in daily life, this hypothesis raises profound questions about human identity, cognition, and agency.

At the core of the extended mind thesis lies a deceptively simple question: Where does the mind stop, and the rest of the world begin?

Drawing an analogy to prosthetics—external objects that functionally become part of the body—Clark and Chalmers argue that cognitive tools such as notebooks, smartphones, and sketchpads can become integrated components of our mental system.

These tools do not merely support cognition; they constitute it when used in a seamless, functionally integrated manner. This conceptual shift has redefined thinking not as a brain-bound process but as a dynamic interaction between mind, body, and world.

Balancing AI and human intelligence

In conclusion, cognitive offloading represents a powerful mechanism of modern cognition, one that allows individuals to adapt to complex environments by distributing mental load.

However, its long-term effects on memory, learning, and problem-solving remain a subject of active investigation. Rather than treating offloading as inherently beneficial or harmful, future research and practice should seek to balance its use, leveraging its strengths while mitigating its costs.