Limits of Rule-Based AI: Learning from the legacy of Douglas Lenant

Douglas Lenat and his team have been working for nearly four decades on a project called Cyc, which aims to teach computers to think more like humans. Cyc is a digital knowledge base containing over 25 million rules. Lenat acknowledges the rise of machine learning, but believes his approach offers a more logical and nuanced understanding of the world. He envisions a future hybrid AI system that combines the strengths of both Cyc and large language models. Lenat argued that AI cannot become intelligent or conscious.

On 31 August 2023, Douglas Lenant died. For 40 years, he was a prominent promoter of a rule-based approach to AI. Over the course of the Cyc project, he collected 25 million rules to teach computers to think more like humans. He hypothesised that this massive collection of rules would help computers understand the world, similar to what humans acquire through experience. When he started in 1984, he estimated that he would need 100 person-years to collect all the rules. It took him 2,000 years, yet the system could not mimic human intelligence.

Douglas Lenant in front of Cyc-graph

However, ChatGPT and large language models (LLMs) started doing it by generating plausible-sounding text similar to how humans would write. Instead of rule-based AI, LLMs identify patterns and rules from vast amounts of data. LLMs search for probable but not logically undisputable answers, as hoped by Lenant.

Disambiguation was one of the main problems for the Cyc project and overall rule-based AI. Humans can interpret different meanings of words depending on the given context, while machines struggle to do so. It was next to impossible for him to develop many rules that could envision different contexts in which language would be used and interpreted.

Although his experiment and rule-based model did not succeed, there are a few lessons from Lenient’s work:

- Trustworthy AI requires explainability, which, in his view, could be achieved by tracing AI answers logically to basic rules of human behavior. Explainability remains the main problem of LLMs

- Reinforced learning is critical for AI, as his Cyc system did by revising answers, adjusting rules, and indicating pros and cons.

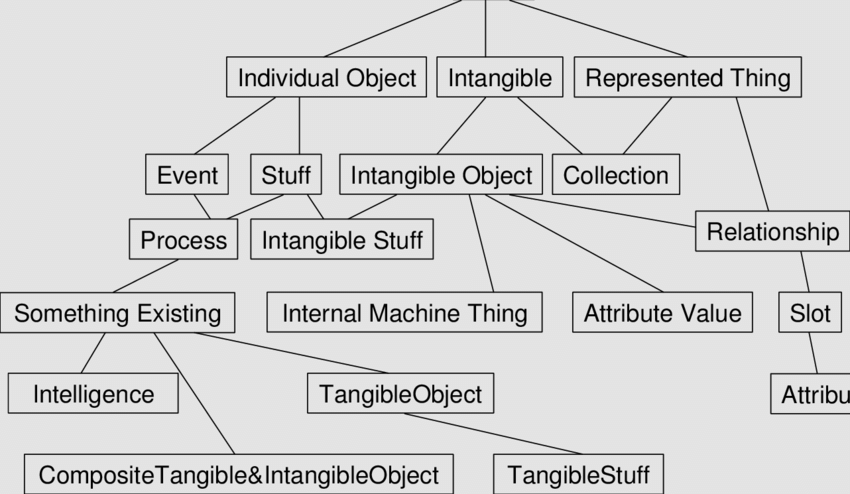

- Cyc’s logical mappings from the 1990s are similar to knowledge graphs in modern AI.

- AI can only enhance, not replace, human intelligence. Moreover, he argued, AI cannot become conscious.

Lenient argued that his rule-based model is an example of a ‘left-brain’ approach aiming for logical clarity and consistency, while LLMs are more on the ‘right-brain’ side of a plausible and probable approach. LLMs are more’ emotional’ than rational by ‘hallucinating’ and not always being correct.

Is AI needed to mimic humans by having a balance between left (rationality) and right (emotional) cognition? It will remain if AI is developed through a hybrid approach combining rule-based and LLM approaches to AI.

Read more in The Economist.