Creators of AI issue new warning and urge for AI risks to be a global priority

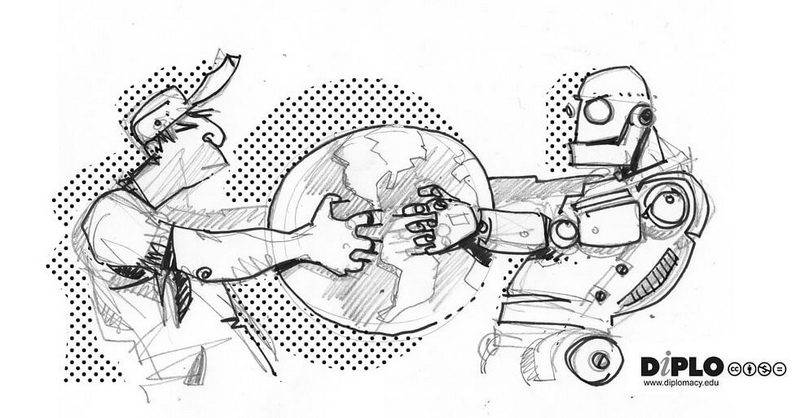

Prominent AI creators issue a crucial warning on AI risks, stressing the imperative of global cooperation and regulation.

Prominent creators of AI, including OpenAI and DeepMind, have issued a one-sentence open letter underscoring the risks associated with AI and advocating for its global control. The letter states that AI’s evolution could trigger an extinction event and warrants treatment as a societal-scale risk akin to pandemics and nuclear war.

Spearheaded by the nonprofit organisation Center for AI Safety, the open letter has garnered support from a wide range of signatories, including CEOs, executives, engineers, academics, and scientists hailing from diverse tech and non-tech backgrounds.

Critics argue that such warnings from AI creators contribute to the hype around their products’ capabilities and divert attention from the immediate need for regulations. Nevertheless, proponents assert that addressing potential AI risks and catastrophes is essential to preempt harm.