Dear readers,

A new biometric-cryptocurrency project has diverted everyone’s attention from AI developments to iris patterns and privacy issues. Still, over at the regulators in charge of competition, no fewer than four new cases against Big Tech emerged, with two of them outlined below.

Let’s get started.

Stephanie and the Digital Watch team

// HIGHLIGHT //

There’s a new device in town,

and it’s coming for your iris

Nowadays, people are happily sharing biometric data through their trendy smartwatches. The allure of profiting from cryptocurrency is as tantalising as Bitcoin’s early days. And Sam Altman has garnered a considerable following of techno-enthusiasts since the launch of ChatGPT.

So the timing couldn’t be better for Sam Altman to relaunch his Worldcoin project, a cryptocurrency-cum-identity network that functions by verifying that someone is both a human being and a unique person. The verification is carried out by a custom-built spherical device called an Orb. (Read about Worldcoin’s history.)

Are you unique? The uniqueness requirement is why verification is based on an iris scan: Since the structure of our irises is both individually identifiable and stays more or less the same over time, iris biometrics are much more accurate and reliable.

Privacy safeguards: WorldCoin also provides some privacy features. Iris scans are processed locally on each Orb, and turned into a set of numbers. The original scan is then deleted (unless the user prefers to have it stored on Worldcoin’s servers ‘to reduce the number of times you may need to go back to an Orb’).

Regulators stepping in: Despite the safeguards, European regulators have been quick to react. France’s privacy watchdog said it had reservations about the legality of the biometric data collection, and how it’s being stored. The UK said it was reviewing the project.

Germany is way ahead: Its data protection regulator in Bavaria – the lead EU authority investigating Worldcoin due to the company’s German subsidiary in the region – has been investigating the project’s biometric data processing since November last year.

Why the iris scanning project is more than a headache

As the investigations unfold, there are several challenges that are raising alarm bells about the iris project.

1. The massive database. Regardless of all the noble purposes (mostly) behind the Worldcoin project, the fact is that a massive biometric database is being built. And we all know the risks that come with that – from breaches to data misuse.

2. The Orb operators. Let’s say your iris pattern is deleted immediately. There are still plenty of risks associated with how that data is collected. The company emphasises that the orb operators – the people entrusted with the shiny spheres – are independent contractors over whom ‘we have no control over and disclaim all liability for what they say or how they conduct themselves’.

3. The money pitch. Worldcoin is providing people with an incentive to have their irises scanned: the prospect of making money. ‘Eligible verified users’, that is, anyone who’s had their iris scanned, ‘can claim one free WLD token per week with no maximum.’ On the one hand, a company is finally paying users for their data, but on the other hand, that data is sensitive biometric information. Are users on an equal footing with the company in this exchange? Should the sale of sensitive biometric information be permitted? It’s a transaction that warrants closer scrutiny.

Beyond the boundaries of what is acceptable or prohibited, projects that involve large-scale collection of biometric data are undoubtedly contributing to society’s changing attitudes towards privacy. It’s probably time to reassess the essence of what users are actually trading, and more than that, whether users have the power to defend their rights and position in this negotiation.

// AI //

Industry leaders partner to establish forum for responsible development of frontier AI

Four companies developing AI – Anthropic, Google, Microsoft, and OpenAI – have launched a new industry body to focus on the safe and responsible development of frontier AI models, that is, models that exceed the capabilities of what’s currently available.

The Frontier Model Forum will focus on identifying best practices for safety standards, advancing AI safety research by coordinating efforts on areas like adversarial robustness and interpretability, and facilitating secure information sharing between companies and governments on AI safety and risks.

Why is this relevant? Beyond the AI models we see today, over 350 AI experts recently raised concerns on the potential for future AI to bring about human extinction and other global perils, such as pandemics and nuclear warfare. The list of signatories comprised the leaders of the very AI companies driving the Frontier Model Forum forward.

// CONTENT POLICY //

Biden administration challenges social media censorship order

The Biden administration has criticised a recent court order restricting government officials’ communications with social media companies as overly broad. Appealing the court order, the government said the order hampers its ability to fight misinformation, and must be lifted.

How it started. In May 2022, the attorneys general of Missouri and Louisiana sued the government for demanding that social media platforms remove content that the government deemed misinformation. On 4 July 2023, the Louisiana court ordered government agencies to refrain from communicating with social media companies for the purpose of moderating content. In other words, the court said the government was only allowed to contact social media companies on content related to national security threats, criminal activity, and cyberattacks.

The government’s counter-argument. It’s one thing to try to persuade platforms, and quite another to coerce them. ‘The district court’s ruling ignored that fundamental distinction… [it] equated the government’s legitimate efforts to identify truthful information with illicit efforts to “‘silenc[e] the voice of opposition’… and… to coerce.’

Why is this case relevant? First, this places a wedge between the US government and social media companies by setting a precedent for how the US government can interact with social media companies. Second, it affects the way misinformation is tackled by undermining the credibility of public authorities as trustworthy providers of information. Third, the idea that social media giants such as Facebook and Twitter can be easily coerced into compliance is not exactly the image we all have of them…

Case numbers: District Court, W.D. Louisiana, 3:22-cv-01213; Court of Appeals, 5th Circuit, 23-30445

Breton tells NGOs: Shutdowns only in far-reaching situations; courts will have final say

You could say that Internal Market Commissioner Thierry Breton rocked the boat a little when he recently suggested on France Info that online platforms could be shut down if they don’t remove illegal content immediately, especially when riots and violent protests are involved. Over 60 civil rights NGOs immediately asked him to clarify that the Digital Services Act (DSA) would not be used as a censorship tool.

Breton has now clarified his comment: The possibility of a temporary suspension is a last resort if a platform fails to take necessary and effective actions in far-reaching situations, such as systemic failure to terminate infringements linked to calls for violence or manslaughter. In any case, the courts will have the final say.

Why is this relevant? The exchange between the European Commission and the NGOs served to clarify what type of last-resort measures against infringement can be ordered by authorities. The DSA’s obligations for very large online platforms and search engines come into effect on 25 August.

// ANTITRUST //

EU confirms antitrust investigation against Microsoft for bundling Teams with Office

It didn’t take long for the European Commission to confirm our hunch from last week. Just days after Alfaview’s anti-competition complaint against Microsoft, the commission launched formal proceedings against Microsoft for bundling the communication software Teams with its Office 365.

A long time coming. At the height of the COVID-19 pandemic in 2020, Zoom soared to success while Teams emerged as a formidable competitor. It was during this time that Microsoft decided to bundle Teams with Office. The move faced backlash from Slack, a rival company (that was subsequently acquired by Salesforce in 2021), which complained to the commission that Microsoft’s bundling constituted an abuse of its dominant position.

Why is this case relevant? This makes it the first investigation by the European Commission against Microsoft since the Internet Explorer bundling case concluded in 2009 (Microsoft was fined a few years later for breaching its commitments). This case also highlights the limited effectiveness of antitrust laws and enforcement in deterring dominant companies. Even if Microsoft were to lose the case, Teams would remain firmly established as one of the leading meeting software apps, making any findings of anti-competitive behaviour ineffective in displacing it.

Case number: AT.40721

French competition authority to investigate Apple’s app tracking policy

The French competition authority has launched an investigation into Apple’s practices for allegedly abusing its dominant market position. Advertisers have complained that while Apple imposes its App Tracking Transparency (ATT) policy upon them, it exempts itself from the same regulations, resulting in self-preferential treatment.

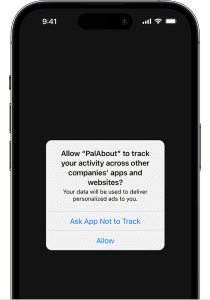

The issues with Apple’s tracking policy. Apple’s ATT policy, first announced in 2020, triggers a privacy pop-up to iPhone and iPad users during the installation of third-party apps attempting to track them. That’s very much welcomed by privacy advocates. However, app developers say that this policy does not extend to Apple’s own apps, creating hesitation among users to allow third-party tracking, leading them to favour Apple’s apps. This also means that Apple has access to more complex device and advertising data than third-party developers, allowing it to more accurately target its ads to users in ways that third-party developers cannot.

Apple says its apps do not track users via third-party apps, and hence, do not require the ATT prompt. But competition authorities aren’t so sure anymore that this isn’t an abusive self-preferencing practice.

Why is this case relevant? First, this case has been gaining momentum since 2020. At that time, the French Competition Authority was approached by advertising associations with a complaint against the ATT policy and a request for interim measures against Apple. A year later, the French authority concluded that there was nothing wrong with providing users additional possibilities for deciding whether they wished to be tracked, and after all, at that time, the French authority did not have any proof that Apple was subjecting third-party app developers to stricter measures than those it imposed on itself for comparable purposes. And this is precisely what the French authority will now be looking at. Second, because multiple jurisdictions are looking into the same issue, including the UK, Italy, Germany, and California.

Was this newsletter forwarded to you, and you’d like to see more?

Since it’s a relatively quiet month, we’re looking ahead at the next 4-5 weeks:

10–13 August: DEF CON 31 is the Las Vegas event which will show, among other workshops, training, and contests, the White House-backed red-teaming of OpenAI’s models.

21 August–1 September: The Ad Hoc Committee on Cybercrime meets in New York for its 6th session.

25 August: Very large online platforms and search engines must start abiding by the DSA’s obligations as of this date.

Was this newsletter forwarded to you, and you’d like to see more?