Dear readers,

It’s more AI governance this week: The US White House is inching towards AI regulation, marking a significant shift from the laissez-faire approach of previous years. At the UN, the Secretary-General is also shaking (some) things up.

Elsewhere, cybercrime is rearing its ugly head, bolstered by generative AI. Antitrust regulators gear up for new battles while letting go of others. And in case you haven’t heard, Twitter’s iconic blue bird logo is no more.

Let’s get started.

Stephanie and the Digital Watch team

// HIGHLIGHT //

Voluntary measures a precursor to White House regulations on AI

There’s more than meets the eye in last week’s announcement that seven leading AI companies in the USA – Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI – agreed to implement voluntary safeguards. The announcement made it crystal clear that executive action on AI is imminent, indicating a shift to a higher gear towards AI regulation within the White House.

AI laws on the horizon

In comments after his meeting with AI companies, President Joe Biden spoke of plans for new rules: ‘In the weeks ahead, I’m going to continue to take executive action to help America lead the way toward responsible innovation.’ The White House also confirmed that it ‘is currently developing an executive order and bipartisan legislation to position America as a leader in responsible innovation’. In addition, the voluntary commitments state that they ‘are intended to remain in effect until regulations covering similar issues are officially enacted’.

In June, officials revealed they were already laying the groundwork for several policy actions, including executive orders, set to be unveiled this summer. Their work involved creating a comprehensive inventory of government regulations applicable to AI, and identifying areas where new regulations are needed to fill the gaps.

The extent of the White House’s shift in focus will be revealed when the executive order(s) are announced. One possibility is that they will focus on the same safety, security and trust aspects that the voluntary safeguards reflect on, mandating new rules to fill in the gaps. Another possibility, though less likely, is for the executive action to focus on tackling China’s growth in the AI race.

The voluntary measures

While the voluntary commitments address some of the main risks, they mostly encompass practices that companies are either already implementing or have announced, making them less impressive. In a way, the commitments appear reminiscent of the AI Pact announced by European Commissioner Thierry Breton as a preparatory step for the EU’s AI Act – a way for companies to get ready for impending regulations. In addition, these commitments apply primarily to generative models that surpass the current industry frontier in terms of power and scope.

The safeguards revolve around three crucial principles that should underpin the future of AI: Safety, security, and trust.

1. Safety: Companies have pledged to conduct security testing of their AI systems before release, employing internal and external experts to mitigate risks related to biosecurity, cybersecurity, and societal impacts. The White House previously endorsed a red-teaming event at DEFCON 31 (taking place in August), aimed at identifying vulnerabilities in popular generative AI tools through the collaboration of experts, researchers, and students.

2. Security: Companies have committed to invest in cybersecurity and insider threat safeguards, ensuring proprietary model weights (numeric parameters that machine learning models learn from data during training to make accurate predictions) are released only under intended circumstances and after assessing security risks. They have also agreed to facilitate third-party discovery and reporting of AI system vulnerabilities to support prompt action on any post-release challenges.

3. Trust: Companies have committed to developing technical mechanisms, such as watermarking, to indicate AI-generated content, promoting creativity while reducing fraud and deception. OpenAI is already exploring watermarking. Companies have also pledged to publicly disclose AI system capabilities, limitations, and appropriate/inappropriate use. They will also address security and societal risks, including fairness, bias, and privacy – again, a practice some companies already implement.

The industry’s response

Companies have welcomed the White House’s lead in bringing them together to agree on voluntary commitments (emphasis on voluntary). While they have advocated for future AI regulation in their testimonies and public remarks, the industry generally leans towards self-regulation as the preferred approach.

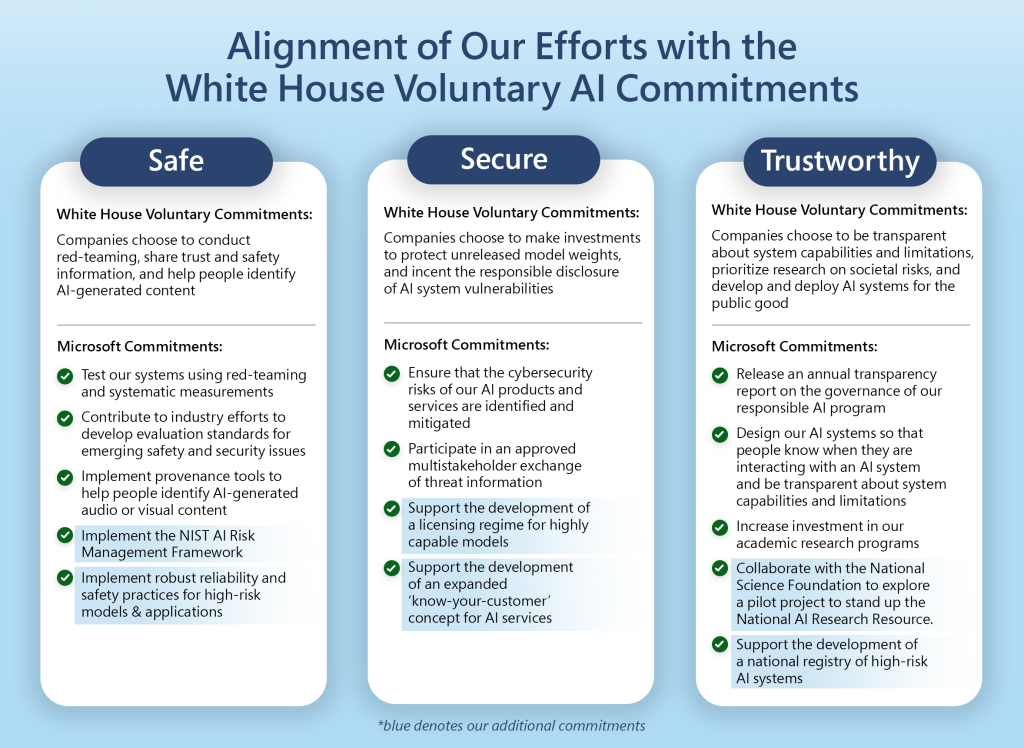

For instance, Meta’s Nick Clegg said the company was pleased to commit these voluntary commitments alongside others in the sector, which ‘create a model for other governments to follow’. (We’re unsure what he meant, given that other countries have already introduced new laws or draft rules on AI.) Microsoft’s Brad Smith’s comment went a step further, noting that the company is not only already implementing the commitments, but is going beyond them (see infographic).

Minimal impact on the international front

The White House said that its current work seeks to support and complement ongoing initiatives, including Japan’s leadership of the G7 Hiroshima Process, the UK’s leadership in hosting a Summit on AI Safety, India’s leadership in the Global Partnership on AI, and ongoing talks at the UN (no mention of the Council of Europe negotiations on AI though).

In practice, we all know how intergovernmental processes operate, along with the pace at which things generally unfold. So no immediate changes are expected.

Plus, the USA may well contemplate the regulation of AI companies within its own borders, but opening the doors to international regulation of its domestic enterprises is an entirely separate issue.

// AI //

UN Security Council holds first-ever AI debate; Secretary-General announces initiatives

The UN Security Council held its first-ever debate on AI (18 July), delving into the technology’s opportunities and risks for global peace and security. A few experts were also invited to participate in the debate chaired by Britain’s Foreign Secretary James Cleverly. (Read an AI-generated summary of country positions, prepared by DiploGPT).

In his briefing to the 15-member council, UN Secretary-General Antonio Guterres promoted a risk-based approach to regulating AI, and backed calls for a new UN entity on AI, akin to models such as the International Atomic Energy Agency, the International Civil Aviation Organization, and the Intergovernmental Panel on Climate Change.

Why is it relevant? In addition to the debate, Guterres announced that a high-level advisory group will begin exploring AI governance options by late 2023. He also said that his latest policy brief (published 21 July) recommends that countries develop national AI strategies and establish global rules for military AI applications, and urges them to ban lethal autonomous weapons systems (LAWS) that function without human control by 2026. Given that a global agreement on AI principles is already a big challenge in itself, agreement on a ban on LAWS (negotiations have been ongoing within the dedicated Group of Governmental Experts since 2016) is an even greater challenge.

Cybercriminals using generative AI for phishing and producing child sexual abuse content

Canada’s leading cybersecurity official, Sami Khoury, warned that cybercriminals are now exploiting AI for hacking and spreading misinformation by developing harmful software, creating convincing phishing emails, and propagating false information online, all generated by AI.

In separate news, the International Watch Foundation (IWF) reported it has looked into 29 reported cases of URLs potentially housing AI-generated child sexual abuse imagery. From these reports, it has been confirmed that 7 URLs did indeed contain such content. In addition, during their analysis, IWF experts also discovered an online manual that teaches offenders how to refine prompts and train AI systems to produce increasingly realistic outcomes.

Why is it relevant? Reports from law enforcement and cybersecurity authorities (such as Europol) have previously warned about the potential risks of generative AI. Real-world instances of suspected AI-generated undesirable content are now being documented, marking a transition from perceiving it as a possible threat to acknowledging it as a current risk.

// ANTITRUST //

FTC suspends competition case in Microsoft’s Activision takeover

The US Federal Trade Commission (FTC) has suspended its competition case against Microsoft’s takeover of Activision Blizzard, which was scheduled for a hearing in an administrative court in early August.

Since then, Microsoft and Activision Blizzard agreed to extend the deadline for closing the acquisition deal by 3 months to 18 October.

Why is it relevant? This indicates that the deal is close to being approved everywhere, especially since Microsoft and Sony have also reached agreements ensuring the availability of the Call of Duty franchise on PlayStation – commitments which are appeasing the concerns raised by regulators who were initially opposed to the deal.

Microsoft faces EU antitrust complaint over bundling Teams with Office

Microsoft is facing a new EU antitrust complaint lodged by German company alfaview, centering on Microsoft’s practice of bundling its video app, Teams, with its Office product suite. Alfaview says the bundling gives Teams an unfair competitive advantage, putting rivals at a disadvantage.

The European Commission has confirmed receipt of the antitrust complaint, which was first announced by alfaview, and is said to be preparing to launch a formal investigation into Microsoft’s actions since the company’s remedies so far were deemed insufficient. Microsoft has been undergoing an informal investigation by the European Commission.

Why is it relevant? This is not the first complaint against Microsoft’s Teams-Office bundling: Salesforce lodged a similar complaint in 2020. The Commission doesn’t take lightly to anti-competitive practices, so we can expect it to come out against Microsoft’s practices in full force.

// DSA //

TikTok: Almost, but not quite

TikTok, a social media platform owned by a Chinese company, appears to be making progress towards complying with the EU’s Digital Services Act. It willingly subjected itself to a stress test, indicating its commitment to meeting the necessary requirements.

After a debrief with TikTok CEO Shou Zi Chew, European Commissioner for the Internal Market Thierry Breton tweeted that the meeting was constructive, and that it was now time for the company ‘to accelerate to be fully compliant’.

Why is it relevant? TikTok is trying very hard to convince European policymakers of its commitment to protecting people’s data and to implementing other safety measures. Countries have been viewing the company as a security concern, prompting the company to double and triple its efforts at proving its trustworthiness. Compliance with the EU’s Digital Services Act (DSA) could help restore the company’s standing in Europe.

// CYBERSECURITY //

Chinese hackers targeted US high-ranking diplomats

The US ambassador to China, Terry Branstad, was hacked by a Chinese government-linked spying operation in 2019, according to a report by the Wall Street Journal. The operation targeted Branstad’s private email account and was part of a broader effort by Chinese hackers to target US officials and their families.

Daniel Kritenbrink, the assistant secretary of state for East Asia, was among those targeted in the cyber-espionage attack. These two diplomats are considered to be the highest-ranking State Department officials affected by the alleged spying campaign.

The Chinese government has denied any involvement in the hacking.

Why is it relevant? The news of the breach comes amid ongoing tensions between the USA and China; the fact that the diplomats’ email accounts had been monitored for months could further strain relations between the two countries. It also highlights the ongoing issue of state-sponsored cyber espionage.

Was this newsletter forwarded to you, and you’d like to see more?

22–28 July: The 117th meeting of the Internet Engineering Task Force (IETF) continues this week in San Francisco and online.

24–28 July: The Open-Ended Working Group (OEWG) is holding its 5th substantive session this week in New York. Bookmark our observatory for updates.

Was this newsletter forwarded to you, and you’d like to see more?