Dear readers,

Once again we’re starting with an AI-related highlight: There’s been progress in the AI Act’s legislative journey, and the EU could well see the proposed rules come into effect very soon. In other news, ChatGPT is now being monitored in Latin America, while TikTok got kicked off Austrian governments’ phones.

Let’s get started.

Stephanie and the Digital Watch team

// HIGHLIGHT //

Europe’s AI Act moves ahead: Why, how, and what’s next

The EU’s proposed AI Act moved ahead last week after a key vote at the committee level. In practice, this means that the proposed law could very well be passed by the end of this year. Let’s break all of this down and see what this means for companies and consumers.

Who voted. Last week, members of the European Parliament in two committees – the Internal Market Committee and the Civil Liberties Committee – voted on hundreds of amendments made to the European Commission’s original draft rules.

The AI Act proposes a sliding scale of rules based on risk. Practices with an unacceptable level of risk will be prohibited; those considered high-risk will carry a strict set of obligations; less risky ones will have more relaxed rules, and so on.

Why the vote is relevant. First, lawmakers wanted to ensure that general-purpose AI – like ChatGPT – is captured by the new draft rules. Second, in the grand scheme of things, when one of the principal EU entities agrees on a text, that marks a significant milestone in the EU’s multi-stage legislative process.

What Parliament’s version of the AI Act says. There are many new amendments, so we’ve rounded up the most important:

- Tough rules for ChatGPT-like tools. Parliament’s amendments regulate ChatGPT and similar tools. The proposed rules define generative AI systems under a new category called Foundation Models (this is what underpins generative AI tools). Providers would have to abide by similar obligations as high-risk systems. This means applying safety checks, data governance measures, and risk mitigations before introducing models into the market. The proposed rules would also oblige them to consider foreseeable risks to health, safety, and democracy.

- Copyright tackled, too (sort of). Providers would also need to be transparent: They would need to inform people that content was machine-generated and provide a summary of (any) copyrighted materials used to train their AIs. It’s then up to the rights holders to sue for copyright infringement if they so decide.

- New prohibited practices introduced. Parliament wants to see intrusive and discriminatory uses of AI systems banned. These would include real-time remote biometric identification systems in public spaces, the use of emotion recognition systems by law enforcement and employers, and the indiscriminate scraping of biometric data from social media or CCTV footage for creating databases (reminds us of Clearview AI’s practices – coincidentally last week, Austria’s Data Protection Authority ruled against the company.).

- Consumers may complain. The proposal boosts people’s right to file complaints about AI systems and receive explanations of decisions based on high-risk AI systems that impact their rights.

- Research activities excluded. AI components provided under open-source licences are also excluded. So if a company says it is experimenting with a system, it might be able to avoid the rules. But if it then implements that system? The rules would apply.

Compare and contrast with China’s plans. Interestingly, the draft rules on generative AI, which China published last month, contain similar provisions on transparency, accountability, data protection, and risk management. The Chinese version does, however, go much further on copyright (tools can’t be trained on data that infringes intellectual property rights) and the accuracy of information (whatever is generated by AI needs to be true and accurate) – two major concerns for governments around the world.

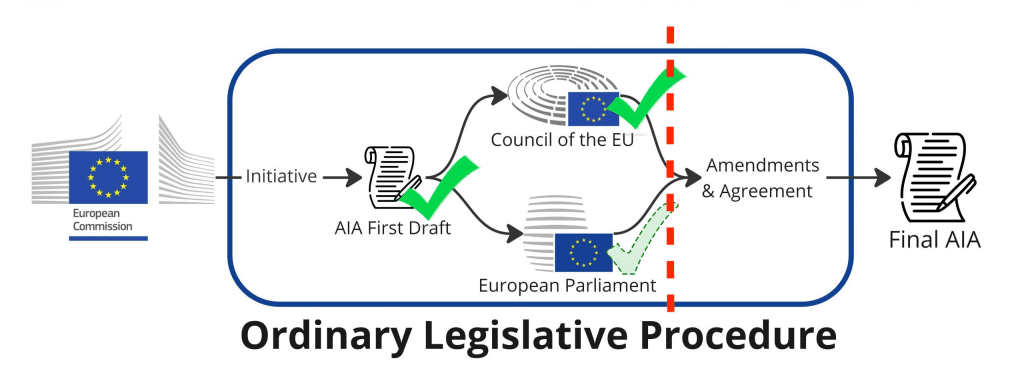

What to expect in the next stage. Once the discussions between the EU Council and European Parliament (on the Commission’s proposal) start – the so-called trilogues – there’s a risk that the rules could get watered down. It’s not necessarily a matter of diluting the stringent rules for providers – six months on from the introduction of ChatGPT, there’s a pretty clear understanding of what these tools can, cannot, and shouldn’t be allowed to do.

Rather, it’s more a matter of governments wanting to ensure their own freedom to use AI tools in ways they deem essential for people’s safety (including some practices that Parliament wants banned) and to address national security concerns when needed.

As for timelines, there’s pressure from all sides to see this through by the end of the year. Providers want legal certainty; users want protection, and the Spanish Presidency (which takes the helm of the EU Council from June to December this year) will want to be remembered for seeing the law through.

// AI //

Data protection authorities in Latin America monitoring ChatGPT

Latin American data protection watchdogs forming part of the Ibero-American Data Protection Network (RIPD) are monitoring OpenAI’s ChatGPT for potential privacy breaches. The network, comprised of 16 authorities from 12 countries, is also coordinating action around children’s access to the AI tool and other risks, such as misinformation.

Why is it relevant? ChatGPT has become a global concern, far beyond the investigative action we’d expect from the usual regulatory hotspots (USA, Europe, China).

// COMPETITION //

European Commission approves Microsoft’s acquisition of Activision

Microsoft’s acquisition of Activision, the creator of the widely popular Call of Duty video game franchise, has received the European Commission’s seal of approval. Microsoft must adhere fully to its commitments for approval to be granted.

Since the EU’s antitrust regulators believe that Microsoft could harm competition if it made Activision’s games exclusive to its own cloud game streaming service, the company will now have to give consumers a licence to stream anywhere they like.

Why is it relevant? This contrasts with last month’s decision by the UK’s Competition and Markets Authority (CMA) to block the acquisition over concerns that the merger would negatively affect the cloud gaming industry. This decision will be confirmed or rejected on appeal. In the USA, the Federal Trade Commission’s case is scheduled for a hearing on 2 August.

// TIKTOK //

Austria blocks TikTok from government phones

The Austrian government has joined other countries in banning Chinese-owned TikTok from being used on federal government officials’ official phones.

The announcement was made by Austria’s Federal Chancellor, together with his vice-chancellor (and minister of culture and arts), and the ministers for finance and home affairs. Citing the ban by the European Commission in February, Austria is concerned with three issues:

- Foreign authorities (read: China) potentially having technical access to official devices through the app’s functions and exploiting any vulnerabilities to access sensitive information.

- The potential for data protection and security breaches through the collection of a large amount of personal information and sensor data.

- The risk of influencing the opinion-forming process of public officials, such as through the manipulation of search results.

Why is it relevant? This adds momentum to the ongoing anti-TikTok wave in Europe and the USA and adds pressure on the company to prove its trustworthiness and security measures to avoid being blocked.

// ANTITRUST //

Italy investigating Apple for alleged abuse of dominant position in app market

The Italian competition authority (the Autorità Garante della Concorrenza e del Mercato – AGCM) has launched an investigation into Apple’s uneven application of its own app tracking policies, which the agency says is a potential abuse of the company’s dominant position in the online app market.

What’s the issue about? If you’re an iPhone or iPad user, you might have noticed a privacy pop-up (such as the one below) when installing third-party apps that try to track you. That’s a feature of Apple’s App Tracking Transparency (ATT) policy introduced two years ago. The problem is that the same interruption doesn’t apply to Apple itself when its own apps try to track you, so users are more likely to think twice before allowing a third-party app to track their activity. In addition, the advertising data passed on to third-party developers is inferior to the data that Apple possesses, putting third-party developers at a disadvantage.

Why is it relevant? Not only are Apple’s App Store practices being probed by the EU’s competition authority in at least three separate cases (there’s a fourth concerning mobile app payments, which continued last week), but the ATT policy itself is being investigated elsewhere, including the UK, Germany, and California.

// DATA PROTECTION //

GSMA gets GDPR fine for use of facial recognition during annual event

The Spanish data protection authority has confirmed that GSMA, the organiser of the annual Mobile World Congress (MWC), violated the GDPR. The fine of EUR 200,000 (USD 218,000) was also confirmed on appeal.

The authority found that GSMA failed to conduct the necessary impact assessments before deciding to collect biometric data on almost 20,000 participants for the MWC’s 2021 edition. Worse, sensitive biometric information was a mandatory step of the registration procedure, with no possibility to opt out.

Why is it relevant? If you’re an event organiser and you’re thinking of using facial recognition to automate participants’ entry to your event, think again. Despite our tendency to focus mainly on Big Tech’s use of our data, the GDPR covers more than that. Every person or organisation handling personal data, including sensitive details like biometrics, falls under the regulation.

// SHUTDOWNS //

Internet shutdowns amid unrest

Internet access was cut off in several regions of Pakistan last week, while access to Twitter, Facebook, and YouTube has been entirely restricted in the wake of the arrest of Pakistan’s former Prime Minister Imran Khan.

In Sudan, ongoing conflict has led to energy shortages, which, in turn, led to prolonged internet outages.

Both shutdowns were confirmed by NetBlocks, a global internet monitoring service.

Trudeau slams Meta. Canada’s Prime Minister Justin Trudeau rebuked Meta for refusing to compensate publishers for news articles that appear on its platform, calling it ‘deeply irresponsible’. The day before, Google and Meta testified at the Senate’s Standing Committee on Transport and Communications hearing, urging revisions to the proposed online news bill (C-18) to avoid their departure from Canada.

16 May: OpenAI CEO Sam Altman and IBM Chief Privacy and Trust Office Christina Montgomery appear at a hearing of the US Senate Judiciary Subcommittee on Privacy, Technology and the Law to discuss AI governance and oversight of rules. Watch live at 10:00 EDT (14:00 UTC).

16 May: The first ministerial meeting of the new EU-India Trade and Technology Council (TTC), launched in February, takes place in Brussels today.

16–17 May: The agenda of heads of government attending the 4th Council of Europe Summit in Reykjavik, Iceland, includes AI governance.

17 May: Happy World Telecommunication and Information Society Day! The celebration marks the signing of the first International Telegraph Convention in 1865 and the creation of the Geneva-based International Telecommunication Union (ITU).

19–21 May: The G7 Summit takes place in Hiroshima, Japan, this week. As Japan’s Prime Minister announced recently, AI will also be on the agenda. (As a refresher, read our coverage in Weekly #108: ChatGPT to debut on multilateral agenda at G7 Summit).

For more events, bookmark the observatory’s calendar of global policy events.

Ransomware attacks: A sobering reality check

The latest edition of Sophos’ annual report, State of Ransomware 2023, confirms that ransomware remains a major threat, especially for target-rich, resource-poor organisations. Although ransomware attacks haven’t increased compared to the previous year, a higher number of attacks now involve encrypting data (and then stealing it). Read the report.

Was this newsletter forwarded to you, and you’d like to see more?