30 January – 6 February 2026

HIGHLIGHT OF THE WEEK

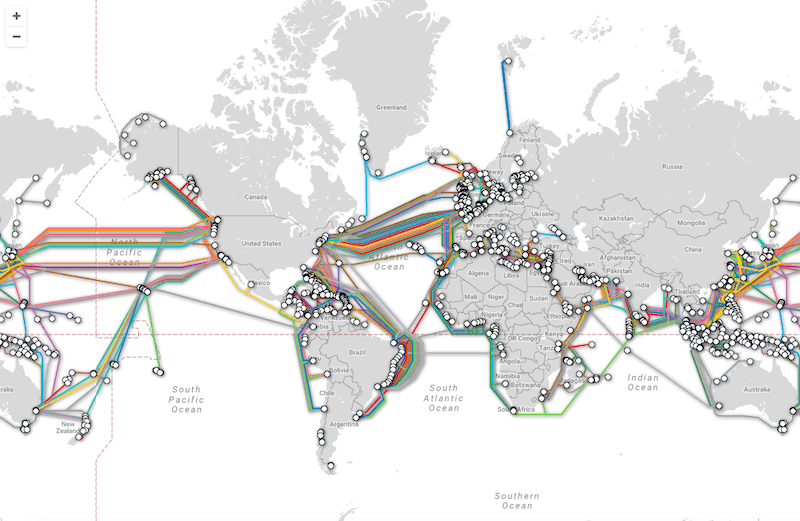

The Porto roadmap for more resilient global submarine cables

In early February 2026, Porto, Portugal hosted the Second International Submarine Cable Resilience Summit, building on last year’s Abuja Summit. Under the high patronage of the President of Portugal and organised by ANACOM in partnership with ITU and the International Cable Protection Committee (ICPC), the event brought together representatives from over 70 countries, including governments, industry leaders, regulators, investors, and technical experts.

The summit concluded with the Porto Declaration on Submarine Cable Resilience, reaffirming the vital role of submarine cables in economic development, social inclusion, and digital transformation. The non-binding guidance calls for closer international cooperation to make submarine cables more resilient by simplifying deployment and repair rules, removing legal and regulatory barriers, and improving coordination among authorities. It emphasises investing in diverse and redundant cable routes—especially for small islands, landlocked countries, and underserved regions—while promoting industry best practices for risk management and protection. The recommendations also stress the development of skills and the use of new technologies to improve monitoring, design, and climate resilience.

Among the key outcomes of the summit were 2 IAB Working Group recommendations.

The recommendations on fostering connectivity and geographic diversity focus on expanding submarine cable connectivity to Small Island Developing States (SIDS), Landlocked Developing Countries (LLDCs), and underserved regions. Key measures include promoting blended-finance and public-private partnerships, de-risking investments through insurance and anchor-tenancy models, and encouraging early engagement among governments, operators, and financiers. Governments are urged to create clear regulatory frameworks, enable non-discriminatory access to landing stations, and incentivise shared infrastructure. Technical measures emphasise integrating branching units, ensuring route diversity and resiliency, conducting hazard assessments, and adopting protocols for seamless failover to backup systems. Capacity-building and the adoption of best practices aim to accelerate deployment while reducing costs and risks.

The recommendations on timely deployment and repair encourage governments to streamline permitting and approval processes through clear, transparent, and predictable frameworks, reduce barriers such as customs and cabotage restrictions—especially for emergency repairs—and designate a Single Point of Contact to coordinate across agencies. A voluntary global directory of these contact points would help industry navigate national requirements, while greater use of regional and intergovernmental forums is encouraged to promote regulatory alignment and cooperation, drawing on existing industry associations and best practices. The recommendations also aim to strengthen the global repair ecosystem and public–private cooperation. They call for expanding and diversifying repair assets, including vessels and spare parts, particularly in high-risk or underserved regions; developing rapid-response capabilities for shallow-water incidents; and promoting shared maintenance models, joint vessel funding, and public–private partnership hubs. Mapping global repair gaps, encouraging long-term maintenance agreements, sharing best practices and data, investing in training and knowledge platforms, and establishing national public–private coordination mechanisms with 24/7 contacts, joint exercises, and practical operational tools are all seen as essential to improving resilience and speeding up repair responses.

The recommendations on risk identification, monitoring and mitigation encouraged governments to develop evidence-based national strategies in collaboration with cable owners to improve visibility over cable faults and vulnerabilities, while addressing data security and sharing. Knowledge exchange, coordinated through bodies such as the ICPC and regional cable protection committees, is seen as essential, alongside voluntary, standardised mechanisms for sharing anonymised information on cable delays, faults, and outages. The recommendations also stress the importance of robust legal frameworks, enforcement, and maritime coordination. States are urged to clarify jurisdiction, implement relevant UNCLOS and IHO obligations, and involve law enforcement in investigations, supported by real-time data sharing and clearer liability standards. Greater integration of cable protection into maritime training, vessel inspection, and nautical charting is encouraged. Finally, resilience should be reinforced through regular stress tests and audits, stronger physical and digital security, better planning for decommissioning and redundancy—particularly for SIDS—and higher upfront investment to reduce long-term outage risks.

Why does it matter? With more than 99% of international data traffic carried by submarine cables and over 200 faults reported annually, the summit underscored the shared responsibility of governments and industry to safeguard this critical infrastructure. The outcomes of Porto are expected to guide policy, operational practice, and investment decisions globally, reinforcing a resilient, open, and reliable foundation for the digital economy.

IN OTHER NEWS LAST WEEK

This week in AI governance

The UN. The UN Secretary-General has submitted to the General Assembly a list of 40 distinguished individuals for consideration to serve on the Independent International Scientific Panel on Artificial Intelligence. The Panel’s main task is to ‘issuing evidence-based scientific assessments synthesising and analysing existing research related to the opportunities, risks and impacts of AI’, in the form of one annual ‘policy-relevant but non-prescriptive summary report’ to be presented to the Global Dialogue on AI Governance. The Panel will also ‘provide updates on its work up to twice a year to hear views through an interactive dialogue of the plenary of the General Assembly with the Co-Chairs of the Panel’.

The UN Children’s Fund (UNICEF) has called on governments to criminalise the creation, possession and distribution of AI-generated child sexual abuse content, warning of a sharp rise in sexually explicit deepfakes involving children and urging stronger safety-by-design practices and robust content moderation. A study cited by the agency found that at least 1.2 million children in 11 countries reported their images being manipulated into explicit AI deepfakes, with ‘nudification’ tools that strip or alter clothing posing heightened risks. UNICEF stressed that sexualised deepfakes of minors should be treated as child sexual abuse material under the law and urged digital platforms to prevent circulation rather than merely remove content after the fact.

China. A court in eastern China has set an early legal precedent by limiting developer liability for AI hallucinations, ruling that developers are not automatically responsible unless users can prove fault and demonstrable harm. Judges characterised AI services as service providers, requiring claimants to show both provider fault and actual injury from erroneous outputs, a framework intended to balance innovation incentives with user protection.

International experts. The second International AI Safety Report 2026 has been published. The report synthesises evidence on AI capabilities — such as improved reasoning and task performance — alongside emerging risks like deepfakes, cyber misuse and emotional reliance on AI companions, while noting uneven reliability and ongoing challenges in managing risks. It aims to equip policymakers with a science-based foundation for regulatory and governance decisions without prescribing specific policies.

The UK. Britain is partnering with Microsoft, academics, and tech experts to develop a deepfake detection system to combat harmful AI-generated content. The government’s framework will standardise how detection tools are evaluated against real-world threats such as impersonation and sexual exploitation, building on recent legislation criminalising the creation of non-consensual intimate synthetic imagery. Officials cited a dramatic increase in deepfakes shared online in recent years as motivation for the initiative.

Grok. The cybercrime unit of the Paris prosecutor has raided the French office of X as part of this expanded investigation. Musk and former CEO Linda Yaccarino have been summoned for voluntary interviews. X denied any wrongdoing and called the raid an ‘abusive act of law enforcement theatre’ while Musk described it as a ‘political attack.’

The UK Information Commissioner’s Office (ICO) opened a formal investigation into X and xAI over whether Grok’s processing of personal data complies with UK data protection law, namely core data protection principles—lawfulness, fairness, and transparency—and whether its design and deployment included sufficient built-in protections to stop the misuse of personal data for creating harmful or manipulated images.

Meanwhile, Indonesia has restored access to Grok after banning it in January, having received guarantees from X that stronger safeguards will be introduced to prevent further misuse of the AI tool.

MoltBook: Is AI singularity here?

The rapid rise of Moltbook, a novel social platform designed specifically for AI agents to interact with one another, has ignited both excitement and scepticism.

Unlike traditional social media, where humans generate most content, Moltbook restricts posting and engagement to autonomous AI agents — human users can observe the activity but generally cannot post or comment themselves.

The platform quickly attracted attention due to its scale and rapid growth. Thousands of AI agents reportedly joined within days of its launch, creating a dynamic environment in which automated systems appeared to converse, debate, and even develop distinct communication patterns. The network relies on autonomous scheduling mechanisms that enable agents to post and interact without continuous human prompting.

The big question. Is MoltBook AI singularity in action? According to the newest research by Wiz, the network is actually mostly humans running fleets of bots. About 17,000 people control 1.5 million registered agents, and the platform lacks any way to verify if an account is truly AI or just a scripted human. So, MoltBook is a sandbox for autonomous AI interaction, not a step toward singularity.

Child safety online: The bans club grows

The momentum on banning children from accessing social media continues, as Austria, Greece, Poland, Slovenia and Spain weigh legislative moves and enforcement tools.

In Spain, Prime Minister Pedro Sánchez’s government has proposed legislation that would ban social media access for users under 16, framing the measure as a necessary child-protection tool against addiction, exploitation, and harmful content. Under the draft plan, platforms must deploy mandatory age-verification systems designed as enforceable barriers rather than symbolic safeguards—signalling a shift toward stronger regulatory enforcement rather than voluntary compliance by tech companies. Proposals also include legal accountability for technology executives over unlawful or hateful material that remains online.

Poland’s ruling coalition is currently drafting a law that would ban social media use for children under 15. Lawmakers aim to finalise the law by late February 2026 and potentially implement it by Christmas 2027. Poland aims to update its digital ID app, mObywatel, to enable users to verify their age.

Slovenia is preparing draft legislation to ban minors under 15 from accessing social media, a move initiated by the Education Ministry.

In Austria, the government is actively debating a prohibition on social media use for children under 14. State Secretary for Digital Affairs Alexander Pröll confirmed the policy is under discussion with the aim of bringing it into force by the start of the school year in September 2026.

Greece is reportedly close to announcing a ban on social media use for children under 15. The Ministry of Digital Governance intends to rely on the Kids Wallet application, introduced last year, as a mechanism for enforcing the measure instead of developing a new control framework.

These individual national efforts unfold against a backdrop of increasing international regulatory coordination. On 3 February 2026, the European Commission convened with Australia’s eSafety Commissioner and the UK’s Ofcom to share insights on age assurance measures—technical and policy approaches for verifying users’ ages and enforcing age‑appropriate restrictions online. The meeting followed a joint communication signed at the end of 2025, where the three regulators pledged ongoing collaboration to strengthen online safety for children, including exploring effective age‑assurance technologies, enforcement strategies, and the role of data and independent research in regulatory action.

Zooming out. These initiatives across multiple nations confirm that Australia’s social media ban was not an isolated policy experiment, but rather the beginning of a global bandwagon effect. This momentum is particularly striking given that Australia’s own ban is not yet widely deemed a success—its effectiveness and broader impacts are still being studied and debated.

The developments come just as Australia’s eSafety report notes that tech giants—including Apple, Google, Meta, Microsoft, Discord, Snap, Skype and WhatsApp—have made only limited progress in combating online child sexual exploitation and abuse (CSEA) despite being legally required to report measures under Australia’s Online Safety Act.

TikTok’s addictive design violates DSA, preliminary investigation finds

The European Commission has preliminarily concluded that TikTok’s design violates the bloc’s Digital Services Act (DSA) due to features that the Commission considers addictive, such as infinite scroll, autoplay, push notifications, and its highly personalised recommender system.

According to the Commission, existing safeguards on TikTok—such as screen-time management and parental control tools—do not appear sufficient to mitigate the risks associated with these design choices.

At this stage, the Commission indicates that TikTok would need to modify the core design of its service. Possible measures include phasing out or limiting infinite scroll, introducing more effective screen-time breaks, including at night, and adjusting its recommender system to reduce addictive effects.

What’s next? TikTok can now review the Commission’s case file and respond to the preliminary findings while the European Board for Digital Services is consulted. If the Commission’s findings are confirmed, it may issue a non-compliance decision that could result in fines of up to 6% of the company’s global annual turnover.

Governments continue the push for digital sovereignty

Last week saw further developments pointing to digital sovereignty as the prevailing trend, carrying over from December 2025 into January and February 2026.

In Brussels, the European Commission has begun testing the open-source Matrix protocol as a possible alternative to proprietary messaging platforms for internal communication. Matrix’s federated architecture allows communications to be hosted on European infrastructure and governed under EU rules, aligning with broader efforts to build sovereign digital public services and reduce reliance on external platforms.

In France, the government has taken a hard line on control of satellite infrastructure, another cornerstone of digital sovereignty. Paris blocked the sale of ground-station assets owned by Eutelsat to an external investor, arguing that such infrastructure underpins both civilian and military space communications and must remain under domestic authority. French officials described these facilities as critical to strategic autonomy, in part because Eutelsat represents one of Europe’s few genuine competitors to US-led satellite constellations such as Starlink.

The big picture. As governments recalibrate their digital architectures, the balance between interoperability, security, and sovereign control will remain one of the defining tensions of 21st-century technology policy.

France declares a ‘year of resistance’ against Shein and other ultra-cheap online platforms

France is stepping up its pushback against ultra-low-cost online retailers, with Minister for Small and Medium Enterprises, Trade, Crafts, Tourism, and Purchasing Power Serge Papin declaring 2026 a ‘year of resistance’ to platforms such as Shein. The government argues that physical French shops face strict rules and liability, while global online marketplaces operate under looser standards, creating unfair competition.

Paris is now challenging a December court ruling that refused to suspend Shein’s French operations after inappropriate products were found on its marketplace.

At the same time, the government is preparing legislation that would give authorities the power to suspend online platforms without first seeking judicial approval, a significant expansion of executive oversight in the digital economy.

Fiscal measures are also being brought into play. From 1 March 2026, France plans to impose a €2 tax on small parcels to target the flood of low-value direct-to-consumer imports. This will be followed by a broader EU-level levy of €3 per parcel in the summer, aimed at narrowing the price advantage enjoyed by overseas platforms.

Why does it matter? Taken together, these steps point to a shift from targeting individual companies to tightening the rules for digital marketplaces as a whole, with potential implications beyond France.

China proposes exit bans for cybercriminals and expansion of enforcement powers

The Chinese Ministry of Public Security has drafted a new law that would allow authorities to impose exit bans for up to 3 years on convicted cybercriminals, as well as individuals and entities that facilitate, support, or abet such activities.

The proposal would also allow authorities could bar entry to anyone convicted of cybercrime, prosecute Chinese nationals abroad, and pursue foreign entities whose actions are seen as harming national interests.

The draft also seeks to curb the spread of fake news and content that disrupts public order or violates social norms, reflecting a broader push to regulate online information.

Why does it matter? By imposing exit bans and targeting anyone connected to cybercrime—including service providers or foreign entities—the law could affect global businesses, cross-border collaborations, and the movement of tech professionals.

LAST WEEK IN GENEVA

11th Geneva Engage Awards

Diplo and the Geneva Internet Platform (GIP) organised the 11th edition of the Geneva Engage Awards, recognising the efforts of International Geneva actors in digital outreach and online engagement.

The awards honoured organisations across three main categories: international organisations, NGOs, and permanent representations. The awards assessed efforts in social media engagement, web accessibility, and AI leadership, reinforcing Geneva’s role as a trusted source of reliable information as technology changes rapidly.

In the International Organisations category, the United Nations Conference on Trade and Development (UNCTAD) won first place. Among non-governmental organisations, the International AIDS Society ranked first. In the Permanent Representations category, the Permanent Mission of the Republic of Indonesia to the United Nations Office and other international organisations in Geneva took first place.

The Web Accessibility Award went to the Permanent Mission of Canada, while the Geneva AI Leadership Award was presented to the International Telecommunication Union (ITU).

Honourable mentions were awarded to the World Economic Forum (WEF), the Permanent Delegation of the European Union to the United Nations Office in Geneva, the World Health Organization (WHO), and the United Nations High Commissioner for Refugees (UNHCR).

LOOKING AHEAD

On 10 February, Diplo, the Open Knowledge Foundation, and the Geneva Internet Platform will coorganise an online event, ‘Decoding the UN CSTD Working Group on Data Governance | Part 3’, which will review progress and prospects of the UN Multi-Stakeholder Working Group on Data Governance. Discussions will cover the status of parallel working tracks, ongoing consultations for input, and expectations for the group’s 2026 report drafting.

The 2026 Munich Security Conference (MSC) will be held 13–15 February in Munich, Germany, bringing together officials, experts, and diplomats to discuss international security and foreign policy challenges, among them the security implications of technological advances. Ahead of the main event, the MSC Kick-off on 9 February in Berlin will introduce key topics and present the annual Munich Security Report.

The 39th African Union Summit brings together Heads of State and Government of the African Union’s 55 member states in Addis Ababa to define continental priorities under Agenda 2063, Africa’s long-term development blueprint. While the official Summit theme for 2026 centres on water security and sustainable infrastructure, discussions will likely feature digital transformation and AI.

READING CORNER

The borderless dream of cyberspace is over. AI has instead intensified the relevance of geography through three dimensions: geopolitics (control of infrastructure and data), geoeconomics (the market power of tech giants), and geoemotions (societal trust and sentiment toward technology). Tech companies now act as unprecedented geopolitical players, centralizing power while states push for sovereignty and regulation. Success in the digital age will depend on mastering this new reality where technology actively redefines territory, power, and human emotion.

Do we really need frontier AI for everyday work? We’re bombarded with news about the latest frontier AI models and their ever-expanding capabilities. But the real question is whether these advances matter for most of us, most of the time.