17 – 24 October 2025

HIGHLIGHT OF THE WEEK

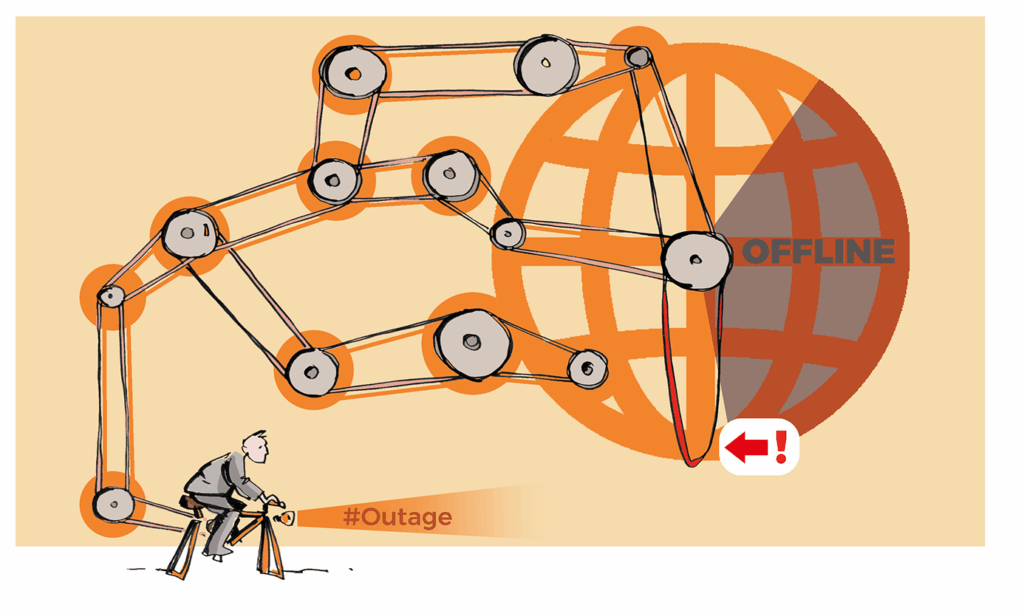

Beyond the blip: What the AWS outage reveals about cloud resilience

For a few hours on Monday, much of the internet flickered out of reach. What began as an issue in one Amazon Web Services (AWS) data centre — the US-East-1 region in Virginia — quickly cascaded into a global failure. A seemingly minor Domain Name System (DNS) configuration error triggered a chain reaction, knocking out the systems that handle traffic between servers.

The root cause was identified as a failure in the DNS resolution for internal endpoints, particularly affecting DynamoDB API endpoints. (Sidenote: DynamoDB is a cloud database that stores information for apps and websites, while API endpoints are the access points where these services read or write that data. During the AWS outage, these endpoints stopped working properly, preventing apps from retrieving or saving information.)

Because so many websites, apps and services — from social media platforms and games to banking and government portals — rely on AWS, the outage affected homes, offices, and governments worldwide.

AWS engineers rushed to isolate the fault, eventually restoring normal operations by late afternoon. But the damage had already been done. The outage exposed a fragile truth about today’s digital world: when one provider falters, much of the internet stumbles with it. An estimated one-third of the world’s online services run on AWS infrastructure.

The incident was more than a momentary blip; it highlighted several systemic risks in today’s digital infrastructure. First, the outage underscored the global dependence on a small number of hyperscale cloud providers. This dependence acts as a single point of failure: a disruption within one provider can disrupt thousands of services relying on it. Secondly, the root cause, being a DNS resolution error, revealed how one component in a complex system can be the catalyst for massive disruption. Thirdly, despite cloud providers offering multiple ‘availability zones’ for redundancy, the event demonstrated that a fault in one critical region can still have worldwide consequences.

The lesson here is clear: as cloud computing becomes ever more central to the digital economy, resilience and diversification cannot be afterthoughts. Yet few true alternatives exist at AWS’s scale beyond Microsoft Azure and Google Cloud, with smaller rivals from IBM to Alibaba, and fledgling European plays, far behind.

IN OTHER NEWS THIS WEEK

Child safety online: The global reckoning continues

The global reckoning over kids’ online safety continues. The last few weeks have shown that governments are moving beyond guiding tech companies and are considering direct regulation. The latest example comes from New Zealand, where a bill will be introduced in parliament to restrict social-media access for children under 16, requiring platforms to conduct age verification before allowing use.

A landmark trial on child safety online has just been given a start date. In January 2026, the Los Angeles County Superior Court will hear a consolidated case bringing together hundreds of claims from parents and school districts against Meta, Snap, TikTok, and YouTube. Plaintiffs allege that these platforms are addictive and expose young people to mental health risks, while providing ineffective parental controls and weak safety features. Meta founder Mark Zuckerberg, Instagram head Adam Mosseri, and Snap CEO Evan Spiegel have been ordered to testify in person, despite the companies’ arguments that it would be burdensome. The judge emphasised that direct testimony from CEOs is crucial to assess whether the companies were negligent in failing to take steps to mitigate harm.

Online gaming platforms, especially those popular with children, are also being re-examined. The platform Roblox (which allows user-generated worlds and chats) has come under fire for failing to protect its youngest users. In the USA, for example, the Florida Attorney General has issued criminal subpoenas accusing Roblox of being ‘a breeding ground for predators’ for children. In the Netherlands, the platform is facing an investigation by child-welfare authorities into how it safeguards young users. In Iraq, the government has banned Roblox nationwide, citing “sexual content, blasphemy and risks of online blackmail against minors.”

Social media and gaming aren’t the only fronts. AI chatbots are now under scrutiny for how they interact with minors. In Australia, the eSafety Commissioner has sent notices to four AI chatbot companies asking them to detail their child-protection steps — specifically, how they guard against exposure to sexual or self-harm material.

Meanwhile, Meta has announced new parental-control measures: parents will be able to disable their teens’ private chats with AI characters, block specific bots, and view what topics their teens are discussing, though not full transcripts. These rules are expected to roll out early next year in the USA, UK, Canada and Australia. These changes follow criticism that Meta’s AI-character chatbots enabled flirtatious or age-inappropriate conversations with minors.

For youth, the digital world is a primary space for social, emotional, and cognitive development, shaped by the content and interactions they find there. This new reality requires parents to look beyond simple screen time to understand the nature of their children’s online experiences. It also sends a clear message to tech companies about accountability and challenges regulators to create rules that are both enforceable and adaptable to rapid innovation.

AI, content, and the future of search

As AI continues to reshape how people access and interact with information, governments and tech companies are grappling with the opportunities—and dangers—of AI-driven content and search tools.

In Europe, the Dutch electoral watchdog recently warned voters against relying on AI chatbots for election information, highlighting concerns that such systems can inadvertently mislead citizens with inaccurate or biased content. Similarly, India proposed strict new IT rules requiring deepfakes and AI-generated content to be clearly labelled, aiming to curb misuse and increase transparency in the digital ecosystem.

At the same time, search engines—the traditional gateways to online knowledge—are experiencing a wave of innovation. OpenAI has unveiled its new browser, ChatGPT Atlas, which reimagines the way users interact with the web. Atlas introduces an ‘agent mode,’ a premium feature that can access a user’s laptop, click through websites, and explore the internet on their behalf, leveraging browsing history and user queries to provide guided, explainable results. Altman described it simply: ‘It’s using the internet for you.’ Two days later, Microsoft unveiled an equivalent offering—its Copilot Mode in Edge within the Microsoft Edge browser. The design, functions and timing were notably similar, underscoring how the major platforms are rapidly converging on this next-generation AI browser paradigm.

Meanwhile, traditional browser landscapes are also shifting under geopolitical pressures. In Russia, Apple has been directed to preinstall a Russian search engine by default on devices, signalling the increasing role of national regulation in shaping how users access information.

These developments arrive against a backdrop of broader calls for global caution on AI. The Superintelligence Statement, endorsed by leading researchers and technologists, urges careful oversight and governance of advanced AI systems to prevent unintended harms—echoing the concerns of regulators and governments worldwide.

Malware, spyware, and tensions

Recent developments in cybersecurity have underscored the growing sophistication of digital threats and the geopolitical tensions they generate. F5 Networks recently suffered a major breach involving a stealthy backdoor called BRICKSTORM, linked by cybersecurity firm Resecurity to the China-based threat actor UNC5221. The malware, a self-contained executable, targets F5’s widely used BIG-IP systems, allowing attackers to maintain persistent access, exfiltrate data, and move laterally across networks. With over 250,000 BIG-IP systems exposed online, the potential impact of this exploit is significant.

In a separate escalation, China has accused the USA of cyberattacks against its National Time Service Center, a critical infrastructure that maintains the country’s official time. According to China’s Ministry of State Security, the US National Security Agency allegedly exploited vulnerabilities in mobile messaging services between 2022 and 2024 to infiltrate NTSC systems, aiming to steal state secrets and map network infrastructure. China claims to have presented evidence of these actions, framing them as part of broader U.S. cyber operations worldwide.

Meanwhile, a landmark US federal court ruling has permanently barred the Israeli spyware company NSO Group from targeting WhatsApp users. The case relates to the 2019 Pegasus spyware incident, which compromised over 1,400 devices without user interaction. The injunction prohibits NSO from accessing WhatsApp’s systems, effectively restricting the company’s operations on the platform. While NSO maintains that the ruling does not affect its clients, WhatsApp emphasised that it now prevents the firm from targeting its users.

LAST WEEK IN GENEVA

151st Assembly of the IPU

The 151st Assembly of the IPU took place in Geneva, Switzerland, on 19-23 October 2025. Under the theme ‘Upholding humanitarian norms and supporting humanitarian action in times of crisis,’ the Assembly held its general debate and reviewed progress on previous resolutions.

Discussions also addressed the protection of victims of illegal international adoption, considered amendments to the IPU Statutes and Rules, and set priorities for upcoming committee work. The meeting provided a platform for dialogue on humanitarian, democratic, and governance issues among national parliaments.

16th Session of the UN Conference on Trade and Development (UNCTAD16)

The 16th UN Conference on Trade and Development (UNCTAD16) took place from 20–23 October 2025 in Geneva, bringing together world leaders, ministers, and experts under the theme ‘Shaping the future: Driving economic transformation for equitable, inclusive and sustainable development.’

Over four days and 40 high-level sessions, delegates discussed trade, investment, technology, and sustainability, setting UNCTAD’s direction for the next four years.

The opening ceremony featured UNCTAD Secretary-General Rebeca Grynspan, Swiss Federal Councillor Guy Parmelin, and Tatiana Valovaya, along with messages from Prime Minister Mia Amor Mottley, President José Ramos-Horta, Prime Minister Phạm Minh Chính, and UNGA President Annalena Baerbock.

A highlight was the keynote address by UN Secretary-General António Guterres and the launch of the Sevilla Forum on Debt, a new platform for dialogue on debt sustainability and innovation.

LOOKING AHEAD

The UN Convention against Cybercrime will be signed in Hanoi, Vietnam, on 25–26 October 2025. The two-day event will include a high-level opening and signing ceremony, co-chaired by Viet Nam’s Prime Minister and the UN Secretary-General, followed by plenary statements from member states and international organisations. A series of side events and roundtables will address topics such as AI-driven scams, online abuse, evidence collection in cryptocurrency investigations, and capacity-building for cybercrime prevention.

On 27 October, UNCTAD will host a webinar launching its Technical Note on Artificial Intelligence and Consumer Protection and a Checklist for consumer protection agencies deploying AI.

The dialogue will explore issues related to the interplay between AI, human agency, and human rights; ways to achieve safe, secure, and trustworthy AI; and the role of International Geneva in advancing the governance of such AI systems.

The Paris Peace Forum 2025 will take place on 29–30 October in Paris under the theme ‘New Coalitions for Peace, People, and the Planet.’ Five sessions will focus on digital and cyber issues, including cyber peace, digital media in conflict resolution, disinformation, multistakeholder leadership, and the launch of the Common Good Cyber Fund.

The AI for Good Impact Africa event will take place on 31 October in Johannesburg, coinciding with AI Expo Africa. Organised by ITU, it brings together innovators, policymakers, and stakeholders to showcase AI solutions for societal challenges, build local capacity, and promote international AI standards through keynotes, panels, pitching sessions, and hands-on workshops.

READING CORNER

AI is reshaping the justice system with unprecedented efficiency, but true progress depends on whether humanity is ready to balance innovation with responsibility and ethical judgement.