December 2025 and January 2026 in retrospect

This month’s newsletter looks back on December 2025 and January 2026 and explores the forces shaping the digital landscape in 2026:

WSIS+20 review: A close look at the outcome document and high-level review meeting, and what it means for global digital cooperation.

Child safety online: Momentum on bans continues, while landmark US trials examine platform addiction and responsibility.

Digital sovereignty: Governments are reassessing data, infrastructure, and technology policies to limit foreign exposure and build domestic capacity.

Grok Shock: Regulatory scrutiny hits Grok, X’s AI tool, after reports of non-consensual sexualised and deepfake content.

Geneva Engage Awards: Highlights from the 11th edition, recognising excellence in digital outreach and engagement in International Geneva.

Annual AI and digital forecast: We highlight the 10 trends and events we expect to shape the digital landscape in the year ahead.

Snapshot: The developments that made waves in December and January

Global digital governance

The USA has withdrawn from a wide range of international organisations, conventions and treaties it considers contrary to its interests, including dozens of UN bodies and non-UN entities. In the technology and digital governance space, it explicitly dropped two initiatives: the Freedom Online Coalition and the Global Forum on Cyber Expertise. The implications of withdrawing from UNCTAD and the UN Department of Economic and Social Affairs remain unclear, given their links to processes such as WSIS, follow-up to Agenda 2030, the Internet Governance Forum, and broader data-governance work.

Technologies

US President Trump signed a presidential proclamation imposing a 25% tariff on certain advanced computing and AI‑oriented chips, including high‑end products such as Nvidia’s H200 and AMD’s MI325X, under a national security review. Officials described the measure as a ‘phase one’ step aimed at strengthening domestic production and reducing dependence on foreign manufacturers, particularly those in Taiwan, while also capturing revenue from imports that do not contribute to US manufacturing capacity. The administration suggested that further actions could follow depending on how negotiations with trading partners and the industry evolve.

The USA and Taiwan announced a landmark semiconductor-focused trade agreement. Under the deal, tariffs on a broad range of Taiwanese exports will be reduced or eliminated, while Taiwanese semiconductor companies, including leading firms like TSMC, have committed to invest at least $250 billion in US chip manufacturing, AI, and energy projects, supported by an additional $250 billion in government-backed credit.

The protracted legal and political dispute over Dutch semiconductor manufacturer Nexperia, a Netherlands‑based firm owned by China’s Wingtech Technology, also continues. The dispute erupted in autumn 2025, when Dutch authorities briefly seized control of Nexperia, citing national security and concerns about potential technology transfers to China. Nexperia’s European management and Wingtech representatives are now squaring off in an Amsterdam court, which is deciding whether to launch a formal investigation into alleged mismanagement. The court is set to make a decision within four weeks.

Reports say Chinese scientists have built a prototype extreme ultraviolet lithography machine, a technology long dominated by ASML. This Dutch firm is the sole supplier of EUV systems and a major chokepoint in advanced chipmaking. EUV tools are essential for producing cutting-edge chips used in AI, high-performance computing and modern weapons by etching ultra-fine circuits onto silicon wafers. The prototype is reportedly already generating EUV light but has not yet produced working chips, and the effort is said to include former ASML engineers who reverse-engineered key components.

Canada has launched Phase 1 of the Canadian Quantum Champions Program as part of a $334.3 million Budget 2025 investment, providing up to $92 million in initial funding, up to $23 million each to Anyon Systems, Nord Quantique, Photonic and Xanadu, to advance fault-tolerant quantum computers and keep key capabilities in Canada, with progress assessed through a new National Research Council-led benchmarking platform.

The USA has reportedly paused implementation of its Tech Prosperity Deal with the UK, a pact agreed during President Trump’s September visit to London that aimed to deepen cooperation on frontier technologies such as AI and quantum and included planned investment commitments by major US tech firms. According to the Financial Times, the suspension reflects broader US frustration with UK positions on wider trade matters, with Washington seeking UK concessions on non-tariff barriers, especially regulatory standards for food and industrial goods, before moving the technology agreement forward.

At the 16th EU–India Summit in New Delhi, the EU and India moved into a new phase of cooperation by concluding a landmark Free Trade Agreement and launching a Security and Defence Partnership, signalling closer alignment amid global economic and geopolitical pressures. The trade deal aims to cut tariff and non-tariff barriers and strengthen supply chains, while the security track expands cooperation on areas such as maritime security, cyber and hybrid threats, counterterrorism, space and defence industrial collaboration.

South Korea and Italy have agreed to deepen their strategic partnership by expanding cooperation in high-technology fields, especially AI, semiconductors and space, with officials framing the effort as a way to boost long-term competitiveness through closer research collaboration, talent exchanges and joint development initiatives, even though specific programmes have not yet been detailed publicly.

Infrastructure

The EU adopted the Digital Networks Act, which aims to reduce fragmentation with limited spectrum harmonisation and an EU-wide numbering scheme for cross-border business services, while stopping short of a truly unified telecoms market. The main obstacle remains resistance from member states that want to retain control over spectrum management, especially for 4G, 5G and Wi-Fi, leaving the package as an incremental step rather than a structural overhaul despite long-running calls for deeper integration.

The Second International Submarine Cable Resilience Summit concluded with the Porto Declaration on Submarine Cable Resilience, which reaffirms the critical role of submarine telecommunications cables for global connectivity, economic development and digital inclusion. The declaration builds on the 2025 Abuja Declaration with further practical guidance and outlines non-binding recommendations to strengthen international cooperation and resilience — including streamlining permitting and repair, improving legal/regulatory frameworks, promoting geographic diversity and redundancy, adopting best practices for risk mitigation, enhancing cable protection planning, and boosting capacity-building and innovation — to support more reliable, inclusive global digital infrastructure.

Cybersecurity

Roblox is under formal investigation in the Netherlands, as the Autoriteit Consument & Markt (ACM) has opened a formal investigation to assess whether Roblox is taking sufficient measures to protect children and teenagers who use the service. The probe will examine Roblox’s compliance with the European Union’s Digital Services Act (DSA), which obliges online services to implement appropriate and proportionate measures to ensure safety, privacy and security for underage users, and could take up to a year.

Meta, which was under intense scrutiny by regulators and civil society over chatbots that previously permitted provocative or exploitative conversations with minors, is pausing teenagers’ access to its AI characters globally while it redesigns the experience with enhanced safety and parental controls. The company said teens will be blocked from interacting with certain AI personas until a revised platform is ready, guided by principles akin to a PG-13 rating system to limit exposure to inappropriate content.

ETSI has issued a new standard, EN 304 223, setting cybersecurity requirements for AI systems across their full lifecycle, addressing AI-specific threats like data poisoning and prompt injection, with additional guidance for generative-AI risks expected in a companion report.

The EU has proposed a new cybersecurity package to tighten supply-chain security, expand and speed up certification, streamline NIS2 compliance and reporting, and give ENISA stronger operational powers such as threat alerts, vulnerability management and ransomware support.

A group of international cybersecurity agencies has released new technical guidance addressing the security of operational technology (OT) used in industrial and critical infrastructure environments. The guidance, led by the UK’s National Cyber Security Centre (NCSC), provides recommendations for securely connecting industrial control systems, sensors, and other operational equipment that support essential services. According to the co-authoring agencies, industrial environments are being targeted by a range of actors, including cybercriminal groups and state-linked actors.

The UK has launched a Software Security Ambassadors Scheme led by the Department for Science, Innovation and Technology and the National Cyber Security Centre, asking participating organisations to promote a new Software Security Code of Practice across their sectors and improve secure development and procurement to strengthen supply-chain resilience.

British and Chinese security officials have agreed to establish a new cyber dialogue forum to discuss cyberattacks and manage digital threats, aiming to create clearer communication channels, reduce the risk of miscalculation in cyberspace, and promote responsible state behaviour in digital security.

Economic

EU ministers have urged faster progress toward the bloc’s 2030 digital targets, calling for stronger digital skills, wider tech adoption and simpler rules for SMEs and start-ups while keeping data protection and fundamental rights intact, alongside tougher, more consistent enforcement on online safety, illegal content, consumer protection and cyber resilience.

South Korea has approved legal changes to recognise tokenised securities and set rules for issuing and trading them within the regulated capital-market system, with implementation planned for January 2027 after a preparation period. The framework allows eligible issuers to create blockchain-based debt and equity products, while trading would run through licensed intermediaries under existing investor-protection rules.

Russia is keeping the ruble as the only legal payment method and continues to reject cryptocurrencies as money, but lawmakers are moving toward broader legal recognition of crypto as an asset, including a proposal to treat it as marital property in divorce cases, alongside limited, regulated use of crypto in foreign trade.

The UK plans to bring cryptoassets fully under its financial regulatory perimeter, with crypto firms regulated by the Financial Conduct Authority from 2027 under rules similar to those for traditional financial products, aiming to boost consumer protection, transparency and market confidence while supporting innovation and cracking down on illicit activity, alongside efforts to shape international standards through cooperation such as a UK–US taskforce.

Hong Kong’s proposed expansion of crypto licensing is drawing industry concern that stricter thresholds could force more firms into full licensing, raise compliance costs and lack a clear transition period, potentially disrupting businesses while applications are processed.

Poland’s effort to introduce a comprehensive crypto law has reached an impasse after the Sejm failed to overturn President Karol Nawrocki’s veto of a bill meant to align national rules with the EU’s MiCA framework. The government argued the reform was essential for consumer protection and national security, but the president rejected it as overly burdensome and a threat to economic freedom. In the aftermath, Prime Minister Donald Tusk has pledged to renew efforts to pass crypto legislation.

In Norway, Norges Bank has concluded that current conditions do not justify launching a central bank digital currency, arguing that Norway’s payment system remains secure, efficient and well-tailored to users. The bank maintains that the Norwegian krone continues to function reliably, supported by strong contingency arrangements and stable operational performance. Governor Ida Wolden Bache said the assessment reflects timing rather than a rejection of CBDCs, noting the bank could introduce one if conditions change or if new risks emerge in the domestic payments landscape.

The EU member states will introduce a new customs duty on low-value e-commerce imports, starting 1 July 2026. Under the agreement, a customs duty of €3 per item will be applied to parcels valued at less than €150 imported directly into the EU from third countries. The temporary duty is intended to bridge the gap until the EU Customs Data Hub, a broader customs reform initiative designed to provide comprehensive import data and enhance enforcement capacity, becomes fully operational in 2028.

Development

UNESCO expressed growing concern over the expanding use of internet shutdowns by governments seeking to manage political crises, protests, and electoral periods. Recent data indicate that more than 300 shutdowns have occurred across 54 countries over the past two years, with 2024 the most severe year since 2016. According to UNESCO, restricting online access undermines the universal right to freedom of expression and weakens citizens’ ability to participate in social, cultural, and political life. Access to information remains essential not only for democratic engagement but also for rights linked to education, assembly, and association, particularly during moments of instability. Internet disruptions also place significant strain on journalists, media organisations, and public information systems that distribute verified news.

The OECD says generative AI is spreading quickly in schools, but results are mixed: general-purpose chatbots can improve the polish of students’ work without boosting exam performance, and may weaken deep learning when they replace ‘productive struggle.’ It argues that education-specific AI tools designed around learning science, used as tutors or collaborative assistants, are more likely to improve outcomes and should be prioritised and rigorously evaluated.

The UK will trial AI tutoring tools in secondary schools, aiming for nationwide availability by the end of 2027, with teachers involved in co-design and testing and safety, reliability and National Curriculum alignment treated as core requirements. The initiative is intended to provide personalised support and help narrow attainment gaps, with up to 450,000 disadvantaged pupils in years 9–11 potentially benefiting each year, while positioning the tools as a supplement to, not a replacement for, classroom teaching.

Sociocultural

The EU has designated WhatsApp a Very Large Online Platform under the Digital Services Act (DSA) after it reported more than 51 million monthly users in the bloc, triggering tougher obligations to assess and mitigate systemic risks such as disinformation and to strengthen protections for minors and vulnerable users. The European Commission will directly supervise compliance, with potential fines of up to 6% of global annual turnover, and WhatsApp has until mid-May to align its policies and risk assessments with the DSA requirements.

The EU has issued its first DSt non-compliance decision against X, fining the platform €120 million for misleading paid ‘blue check’ verification, weak ad transparency due to an incomplete advertising repository, and barriers that restrict access to public data for researchers. X must propose fixes for the checkmark system within 60 working days and submit a broader plan on data access and advertising transparency within 90 days, or face further enforcement.

The EU has accepted binding commitments from TikTok under the DSA to make ads more transparent, including showing ads exactly as users see them, adding targeting and demographic details, updating its ad repository within 24 hours, and expanding tools and access for researchers and the public, with implementation deadlines ranging from two to twelve months.

WhatsApp is facing intensifying pressure from Russian authorities, who argue the service does not comply with national rules on data storage and cooperation with law enforcement, while Meta has no legal presence in Russia and rejects requests for user information. Officials are promoting state-backed alternatives, such as the national messaging app Max, and critics warn that targeting WhatsApp would curb private communications rather than address genuine security threats.

AI governance in December and January

National AI regulation

Vietnam. Vietnam’s National Assembly has passed the country’s first comprehensive AI law, establishing a risk management regime, sandbox testing, a National AI Development Fund and startup voucher schemes to balance strict safeguards with innovation incentives. The 35‑article legislation — largely inspired by EU and other models — centralises AI oversight under the government and will take effect in March 2026.

The UK. More than 100 UK parliamentarians from across parties are pushing the government to adopt binding rules on advanced AI systems, saying current frameworks lag behind rapid technological progress and pose risks to national and global security. The cross‑party campaign, backed by former ministers and figures from the tech community, seeks mandatory testing standards, independent oversight and stronger international cooperation — challenging the government’s preference for existing, largely voluntary regulation.

The USA. The US President Donald Trump has signed an executive order targeting what the administration views as the most onerous and excessive state-level AI laws. The White House argues that a growing patchwork of state rules threatens to stymie innovation, burden developers, and weaken US competitiveness.

To address this, the order creates an AI Litigation Task Force to challenge state laws deemed obstructive to the policy set out in the executive order – to sustain and enhance the US global AI dominance through a minimally burdensome national policy framework for AI. The Commerce Department is directed to review all state AI regulations within 90 days to identify those that impose undue burdens. It also uses federal funding as leverage, allowing certain grants to be conditioned on states aligning with national AI policy.

National plans and investments

Russia. Russia is advancing a nationwide plan to expand the use of generative AI across public administration and key sectors, with a proposed central headquarters to coordinate ministries and agencies. Officials see increased deployment of domestic generative systems as a way to strengthen sovereignty, boost efficiency and drive regional economic development, prioritising locally developed AI over foreign platforms.

Qatar. Qatar has launched Qai, a new national AI company designed to accelerate the country’s digital transformation and global AI footprint. Qai will provide high‑performance computing and scalable AI infrastructure, working with research institutions, policymakers and partners worldwide to promote the adoption of advanced technologies that support sustainable development and economic diversification.

The EU. The EU has advanced an ambitious gigafactory programme to strengthen AI leadership by scaling up infrastructure and computational capacity across member states. This involves expanding a network of AI ‘factories’ and antennas that provide high‑performance computing and technical expertise to startups, SMEs and researchers, integrating innovation support alongside regulatory frameworks like the AI Act.

Australia. Australia has sealed a USD 4.6 billion deal for a new AI hub in western Sydney, partnering with private sector actors to build an AI campus with extensive GPU-based infrastructure capable of supporting advanced workloads. The investment forms part of broader national efforts to establish domestic AI innovation and computational capacity.

Morocco. Morocco is preparing to unveil ‘Maroc IA 2030’, a national AI roadmap designed to structure the country’s AI ecosystem and strengthen digital transformation. The plan aims to add an estimated $10 billion to GDP by 2030, create tens of thousands of AI-related jobs, and integrate AI across industry and government, including modernising public services and strengthening technological autonomy. Central to the strategy is the launch of the JAZARI ROOT Institute, the core hub of a planned network of AI centres of excellence that will bridge research, regional innovation, and practical deployment; additional initiatives include sovereign data infrastructure and partnerships with global AI firms. Authorities also emphasise building national skills and trust in AI, with governance structures and legislative proposals expected to accompany implementation.

Capacity building initiatives

The USA. The Trump administration has unveiled a new initiative, branded the US Tech Force, an initiative aimed at rebuilding the US government’s technical capacity after deep workforce reductions, with a particular focus on AI and digital transformation.

According to the official TechForce.gov website, participants will work on high-impact federal missions, addressing large-scale civic and national challenges. The programme positions itself as a bridge between Silicon Valley and Washington, encouraging experienced technologists to bring industry practices into government environments. The programme reflects growing concern within the administration that federal agencies lack the in-house expertise needed to deploy and oversee advanced technologies, especially as AI becomes central to public administration, defence, and service delivery.

Taiwan. Taiwan’s government has set an ambitious goal to train 500,000 AI professionals by 2040 as part of its long-term AI development strategy, backed by a NT$100 billion (approximately US$3.2 billion) venture fund and a national computing centre initiative. President Lai Ching-te announced the target at a 2026 AI Talent Forum in Taipei, highlighting the need for broad AI literacy across disciplines to sustain national competitiveness, support innovation ecosystems, and accelerate digital transformation in small and medium-sized enterprises. The government is introducing training programmes for students and public servants and emphasising cooperation between industry, academia, and government to develop a versatile AI talent pipeline.

El Salvador. El Salvador has partnered with xAI to launch the world’s first nationwide AI-powered education programme, deploying the Grok model across more than 5,000 public schools to deliver personalised, curriculum-aligned tutoring to over one million students over the next two years. The initiative will support teachers with adaptive AI tools while co-developing methodologies, datasets and governance frameworks for responsible AI use in classrooms, aiming to close learning gaps and modernise the education system. President Nayib Bukele described the move as a leap forward in national digital transformation.

UN AI Resource Hub. The UN AI Resource Hub has gone live as a centralised platform aggregating AI activities and expertise across the UN system. Presented by the UN Inter-Agency Working Group on AI, the platform has been developed through the joint collaboration of UNDP, UNESCO and ITU. It enables stakeholders to explore initiatives by agency, country and SDGs. The hub supports inter-agency collaboration, capacity for UN member states, and enhanced coherence in AI governance and terminology.

Partnerships

Canada‑EU. Canada and the EU have expanded their digital partnership on AI and security, committing to deepen cooperation on trusted AI systems, data governance and shared digital infrastructure. This includes memoranda aimed at advancing interoperability, harmonising standards and fostering joint work on trustworthy digital services.

The International Network for Advanced AI Measurement, Evaluation and Science. The global network has strengthened cooperation on benchmarking AI governance progress, focusing on metrics that help compare national policies, identify gaps and support evidence‑based decision‑making in AI regulation internationally. This network includes Australia, Canada, the EU, France, Japan, Kenya, the Republic of Korea, Singapore, the UK and the USA. The UK has assumed the role of Network Coordinator.

BRICS. Talks on AI governance within the BRICS bloc have deepened as member states seek to harmonise national approaches and shared principles to ethical, inclusive and cooperative AI deployment. It is, however, still premature to talk about the creation of an AI-BRICS, Deputy Foreign Minister Sergey Ryabkov, Russia’s BRICS sherpa stated.

ASEAN-Japan. Japan and the Association of Southeast Asian Nations (ASEAN) have agreed to deepen cooperation on AI, formalised in a joint statement at a digital ministers’ meeting in Hanoi. The partnership focuses on joint development of AI models, aligning related legislation, and strengthening research ties to enhance regional technological capabilities and competitiveness amid global competition from the United States and China.

Pax Silica. A diverse group of nations has announced Pax Silica, a new partnership aimed at building secure, resilient, and innovation-driven supply chains for the technologies that underpin the AI era. These include critical minerals and energy inputs, advanced manufacturing, semiconductors, AI infrastructure and logistics. Analysts warn that diverging views may emerge if Washington pushes for tougher measures targeting China, potentially increasing political and economic pressure on participating nations. However, the USA, which leads the platform, clarified that the platform will focus on strengthening supply chains among its members rather than penalising non-members, like China.

Content governance

Italy. Italy’s antitrust authority has formally closed its investigation into the Chinese AI developer DeepSeek after the company agreed to binding commitments to make risks from AI hallucinations — false or misleading outputs — clearer and more accessible to users. Regulators stated that DeepSeek will enhance transparency, providing clearer warnings and disclosures tailored to Italian users, thereby aligning its chatbot deployment with local regulatory requirements. If these conditions aren’t met, enforcement action under Italian law could follow.

Spain. Spain’s cabinet has approved draft legislation aimed at curbing AI-generated deepfakes and tightening consent rules on the use of images and voices. The bill sets 16 as the minimum age for consenting to image use and prohibits the reuse of online images or AI-generated likenesses without explicit permission — including for commercial purposes — while allowing clear, labelled satire or creative works involving public figures. The reform reinforces child protection measures and mirrors broader EU plans to criminalise non-consensual sexual deepfakes by 2027. Prosecutors are also examining whether certain AI-generated content could qualify as child pornography under Spanish law.

Malta. The Maltese government is preparing tougher legal measures to tackle abuses of deepfake technology. Current legislation is under review with proposals to introduce penalties for the misuse of AI in harassment, blackmail, and bullying cases, building on existing cyberbullying and cyberstalking laws by extending similar protections to harms stemming from AI-generated content. Officials emphasise that while AI adoption is a national priority, robust safeguards against abusive use are essential to protect individuals and digital rights.

China. China’s cyberspace regulator has proposed new limits on AI ‘boyfriend’ and ‘girlfriend’ chatbots. Draft rules require platforms to intervene when users express suicidal or self-harm tendencies when interacting with emotionally interactive AI services, while strengthening protections for minors and restricting harmful content. The regulator defines the services as AI systems that simulate human personality traits and emotional interaction.

Note to readers: We’ve reported separately on the January 2026 backlash against Grok, following claims it was used to generate non-consensual sexualised and deepfake images.

Security

The UN. The UN has raised the alarm about AI-driven threats to child safety, highlighting how AI systems can accelerate the creation, distribution, and impact of harmful content, including sexual exploitation, abuse, and manipulation of children online. As smart toys, chatbots, and recommendation engines increasingly shape youth digital experiences, the absence of adequate safeguards risks exposing a generation to novel forms of exploitation and harm.

International experts. The second International AI Safety Report finds that AI capabilities continue to advance rapidly—with leading systems outperforming human experts in areas like mathematics, science and some autonomous software tasks—while performance remains uneven. Adoption is swift but uneven globally. Rising harms include deepfakes, misuse in fraud and non‑consensual content, and systemic impacts on autonomy and trust. Technical safeguards and voluntary safety frameworks have improved but remain incomplete, and effective multi‑layered risk management is still lacking.

The EU and the USA. The European Medicines Agency (EMA) and the US Food and Drug Administration (FDA) have released ten principles for good AI practice in the medicines lifecycle. The guidelines provide broad direction for AI use in research, clinical trials, manufacturing, and safety monitoring. The principles are relevant to pharmaceutical developers, marketing authorisation applicants, and holders, and will form the basis for future AI guidance in different jurisdictions.

From review to recalibration: What the WSIS+20 outcome means for global digital governance

The WSIS+20 review, conducted 20 years after the World Summit on the Information Society, concluded in December 2025 in New York with the adoption of a high-level outcome document by the UN General Assembly. The review assesses progress toward building a people-centred, inclusive, and development-oriented information society, highlights areas needing further effort, and outlines measures to strengthen international cooperation.

A major institutional decision was to make the Internet Governance Forum (IGF) a permanent UN body. The outcome also includes steps to strengthen its functioning: broadening participation—especially from developing countries and underrepresented communities—enhancing intersessional work, supporting national and regional initiatives, and adopting innovative and transparent collaboration methods. The IGF Secretariat is to be strengthened, sustainable funding ensured, and annual reporting on progress provided to UN bodies, including the Commission on Science and Technology for Development (CSTD).

Negotiations addressed the creation of a governmental segment at the IGF. While some member states supported this as a way to foster more dialogue among governments, others were concerned it could compromise the IGF’s multistakeholder nature. The final compromise encourages dialogue among governments with the participation of all stakeholders.

Beyond the IGF, the outcome confirms the continuation of the annual WSIS Forum and calls for the United Nations Group on the Information Society (UNGIS) to increase efficiency, agility, and membership.

WSIS action line facilitators are tasked with creating targeted implementation roadmaps linking WSIS action lines to SDGs and Global Digital Compact (GDC) commitments.

UNGIS is requested to prepare a joint implementation roadmap to strengthen coherence between WSIS and the Global Digital Compact, to be presented to CSTD in 2026. The Secretary-General will submit biennial reports on WSIS implementation, and the next high-level review is scheduled for 2035.

The document places closing digital divides at the core of the WSIS+20 agenda. It addresses multiple aspects of digital exclusion, including accessibility, affordability, quality of connectivity, inclusion of vulnerable groups, multilingualism, cultural diversity, and connecting all schools to the internet. It stresses that connectivity alone is insufficient, highlighting the importance of skills development, enabling policy environments, and human rights protection.

The outcome also emphasises open, fair, and non-discriminatory digital development, including predictable and transparent policies, legal frameworks, and technology transfer to developing countries. Environmental sustainability is highlighted, with commitments to leverage digital technologies while addressing energy use, e-waste, critical minerals, and international standards for sustainable digital products.

Human rights and ethical considerations are reaffirmed as fundamental. The document stresses that rights online mirror those offline, calls for safeguards against adverse impacts of digital technologies, and urges the private sector to respect human rights throughout the technology lifecycle. It addresses online harms such as violence, hate speech, misinformation, cyberbullying, and child sexual exploitation, while promoting media freedom, privacy, and freedom of expression.

Capacity development and financing are recognised as essential. The document highlights the need to strengthen digital skills, technical expertise, and institutional capacities, including in AI. It invites the International Telecommunication Union to establish an internal task force to assess gaps and challenges in financial mechanisms for digital development and to report recommendations to CSTD by 2027. It also calls on the UN Inter-Agency Working Group on AI to map existing capacity-building initiatives, identify gaps, and develop programs such as an AI capacity-building fellowship for government officials and research programmes.

Finally, the outcome underscores the importance of monitoring and measurement, requesting a systematic review of existing ICT indicators and methodologies by the Partnership on Measuring ICT for Development, in cooperation with action line facilitators and the UN Statistical Commission. The Partnership is tasked with reporting to CSTD in 2027. Overall, the CSTD, ECOSOC, and the General Assembly maintain a central role in WSIS follow-up and review.

The final text reflects a broad compromise and was adopted without a vote, though some member states and groups raised concerns about certain provisions.

Child safety online: Bans and trials

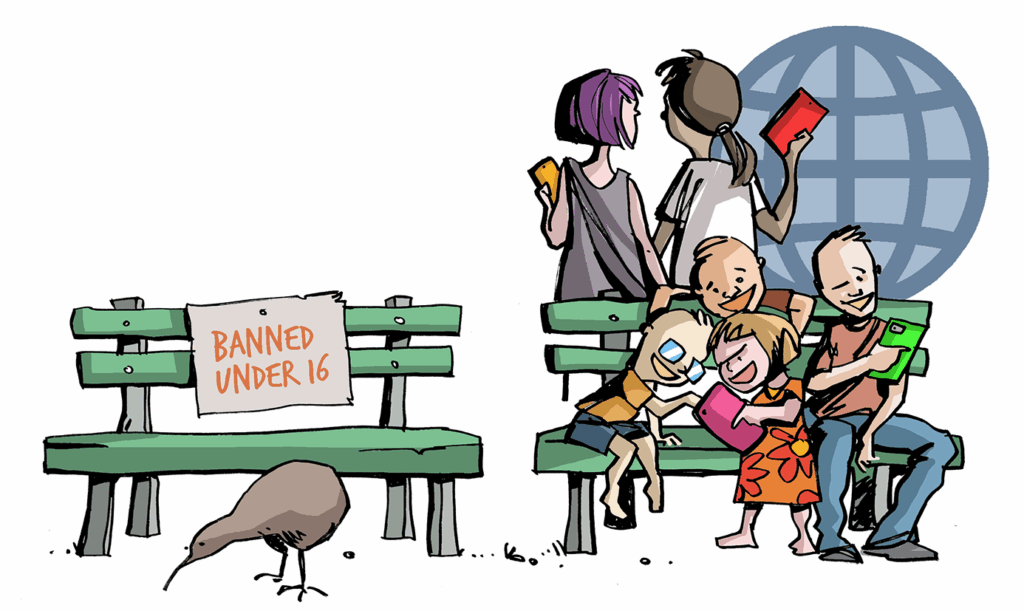

The momentum of social media bans for children

Australia made history in December as it began enforcing its landmark under-16 social media restrictions — the first nationwide rules of their kind anywhere in the world.

The measure — a new Social Media Minimum Age (SMMA) requirement under the Online Safety Act — obliges major platforms to take ‘reasonable steps’ to delete underage accounts and block new sign-ups, backed by AUD 49.5 million fines and monthly compliance reporting.

As enforcement began, eSafety Commissioner Julie Inman Grant urged families — particularly those in regional and rural Australia — to consult the newly published guidance, which explains how the age limit works, why it has been raised from 13 to 16, and how to support young people during the transition.

The new framework should be viewed not as a ban but as a delay, Grant emphasised, raising the minimum account age from 13 to 16 to create ‘a reprieve from the powerful and persuasive design features built to keep them hooked and often enabling harmful content and conduct.’

It has been almost two months since the ban—we continue to use the word ‘ban’ in the text, as it has already become part of the vernacular—took effect. Here’s what has happened in the meantime.

Teen reactions. The shift was abrupt for young Australians. Teenagers posted farewell messages on the eve of the deadline, grieving the loss of communities, creative spaces, and peer networks that had anchored their daily lives. Youth advocates noted that those who rely on platforms for education, support networks, LGBTQ+ community spaces, or creative expression would be disproportionately affected.

Workarounds and their limits. Predictably, workarounds emerged immediately. Some teens tried (and succeeded) to fool facial-age estimation tools by distorting their expressions; others turned to VPNs to mask their locations. However, experts note that free VPNs frequently monetise user data or contain spyware, raising new risks. And it might be in vain – platforms retain an extensive set of signals they can use to infer a user’s true location and age, including IP addresses, GPS data, device identifiers, time-zone settings, mobile numbers, app-store information, and behavioural patterns. Age-related markers — such as linguistic analysis, school-hour activity patterns, face or voice age estimation, youth-focused interactions, and the age of an account give companies additional tools to identify underage users.

Privacy and effectiveness concerns. Critics argue that the policy raises serious privacy concerns, since age-verification systems, whether based on government ID uploads, biometrics, or AI-based assessments, force people to hand over sensitive data that could be misused, breached, or normalised as part of everyday surveillance. Others point out that facial-age technology is least reliable for teenagers — the very group it is now supposed to regulate. Some question whether the fines are even meaningful, given that Meta earns roughly AUD 50 million in under two hours.

The limited scope of the rules has drawn further scrutiny. Dating sites, gaming platforms, and AI chatbots remain outside the ban, even though some chatbots have been linked to harmful interactions with minors. Educators and child-rights advocates argue that digital literacy and resilience would better safeguard young people than removing access outright. Many teens say they will create fake profiles or share joint accounts with parents, raising doubts about long-term effectiveness.

Industry pushback. Most major platforms have publicly criticised the law’s development and substance. They maintain that the law will be extremely difficult to enforce, even as they prepare to comply to avoid fines. Industry group NetChoice has described the measure as ‘blanket censorship,’ while Meta and Snap argue that real enforcement power lies with Apple and Google through app-store age controls rather than at the platform level.

Reddit has filed a High Court challenge of the ban, naming the Commonwealth of Australia and Communications Minister Anika Wells as defendants, and claiming that the law is applied to Reddit inaccurately. The platform holds that it is a platform for adults, and doesn’t have the traditional social media features that the government has taken issue with.

Government position. The government, expecting a turbulent rollout, frames the measure as consistent with other age-based restrictions (such as no drinking alcohol under 18) and a response to sustained public concern about online harms. Officials argue that Australia is playing a pioneering role in youth online safety — a stance drawing significant international attention.

International interest. This development has garnered considerable international attention. There is a growing club of countries seeking to ban minors from major platforms.

- The EU Parliament has proposed a minimum social media age of 16, allowing parental consent for users aged 13–15, and is exploring limits on addictive features such as autoplay and infinite scrolling.

- France’s National Assembly has advanced legislation to ban children under 15 from accessing social media, voting substantially in favour of a bill that would require platforms to block under‑15s and enforce age‑verification measures. The bill now goes to the Senate for approval, with targeted implementation before the next school year. The country’s health watchdog has highlighted research pointing to a range of documented negative effects of social media use on adolescent mental health, noting that online platforms amplify harmful pressures, cyberbullying and unrealistic beauty standards.

- Britain’s House of Lords has voted in favour of an amendment to the Children’s Wellbeing and Schools Bill that would ban children under 16 from accessing social media.

- Austria is considering banning children under 14 from using social media.

- Denmark and Norway are considering raising the minimum social media age to 15, with Denmark potentially banning under-15s outright and Norway proposing stricter age verification and data protections.

- Poland’s ruling coalition is currently drafting a law that would ban social media use for children under 15.

- Spain is considering prohibiting social media for under-16s. According to Spain’s president, the government will also draft legislation to hold social media executives personally responsible for hate speech on their platforms.

- Malaysia plans to introduce a ban on social media accounts for people under 16 starting in 2026.

- India’s Madras High Court urged the country’s federal government to consider an Australia-style ban. The state governments of Goa and Andhra Pradesh are exploring similar restrictions, considering proposals to bar social media use for children under 16 amid rising concern about online safety and youth well‑being

- In New Zealand, political debate has considered restrictions for minors, but no formal policy has been enacted.

- According to Australia’s Communications Minister, Anika Wells, officials from the EU, Fiji, Greece, and Malta have approached Australia for guidance, viewing the SMMA rollout as a potential model. Recent reporting suggests that Greece plans to prohobot social media use for children under 15.

All of these jurisdictions are now looking closely at Australia, watching for proof of concept — or failure.

The early results are in. On the enforcement metric — platform compliance and account takedowns — the law is functioning, with social media companies deactivating or restricting roughly 4.7 million accounts understood to belong to Australian users under 16 within the first month of enforcement.

However, on the behavioural outcome metric — whether under-16s are actually offline, safer, or replacing harmful patterns with healthier ones — the evidence remains inconclusive and evolving. The Australian government has also said it’s too early to declare the ban an unequivocal success.

The unresolved question. Young people retain access to group messaging tools, gaming services and video conferencing apps while they await eligibility for full social media accounts. But the question lingers: if access to large parts of the digital ecosystem remains open, what is the practical value of fencing off only one segment of the internet?

Platforms on trial(s)

In January 2026, a landmark trial opened in Los Angeles involving K.G.M., a 19-year-old plaintiff, and major social media companies. The case, first filed in July 2023, accuses platforms including Meta (Instagram and Facebook), YouTube (Google/Alphabet), Snapchat, and TikTok of intentionally designing their apps to be addictive, with serious consequences for young users’ mental health.

According to the complaint, features such as infinite scroll, algorithmic recommendations, and constant notifications contributed to compulsive use, exposure to harmful content, depression, anxiety, and even suicidal thoughts. The lawsuit also alleges that the platforms made it difficult for K.G.M. to avoid contact with strangers and predatory adults, despite parental restrictions. K.G.M.’s legal team argues that the companies knowingly optimised their platforms to maximise engagement at the expense of user well-being.

As the trial began, Snap Inc. and TikTok had already reached confidential settlements, leaving Meta and YouTube as the remaining defendants. Meta and YouTube deny intentionally causing harm, highlighting existing safety features, parental controls, and content filters.

Separately in federal court, Meta, Snap, YouTube and TikTok asked a judge to dismiss school districts’ lawsuits that seek damages for costs tied to student mental health challenges.

In both cases, the companies are arguing that Section 230 of US law shields them from liability, while the plaintiffs counter that their claims focus on allegedly addictive design features rather than user-generated content.

Legal experts and advocates are watching closely, noting that the outcomes could set a precedent for thousands of related lawsuits and ultimately influence corporate design practices.

From bytes to borders: How nations are coding their digital sovereignty

Governments have long debated controlling data, infrastructure, and technology within their borders. But there is a renewed sense of urgency, as geopolitical tensions are driving a stronger push to identify dependencies, build domestic capacity, and limit exposure to foreign technologies.

At the European level, France is pushing to make digital sovereignty measurable and actionable. Paris has proposed the creation of an EU Digital Sovereignty Observatory to map member states’ reliance on non-European technologies, from cloud services and AI systems to cybersecurity tools. Paired with a digital resilience index, the initiative aims to give policymakers a clearer picture of strategic dependencies and a stronger basis for coordinated action on procurement, investment, and industrial policy.

The bloc has, however, already started working on digital sovereignty. Just in January, the European Parliament adopted a resolution on European technological sovereignty and digital infrastructure. In the text, the Parliament calls for the development of a robust European digital public infrastructure (DPI) base layer grounded in open standards, interoperability, privacy- and security-by-design, and competition-friendly governance. Priority areas include semiconductors and AI chips, high-performance and quantum computing, cloud and edge infrastructure, AI gigafactories, data centres, digital identity and payments systems, and public-interest data platforms.

Also newly adopted is the Digital Networks Act, which frames sovereignty as the EU’s capacity to control, secure, and scale its critical connectivity infrastructure rather than as isolation from global markets. High-quality, secure digital networks are presented as a foundational enabler of Europe’s digital transformation, competitiveness, and security, with fragmentation of national markets seen as undermining the Union’s ability to act collectively and reduce dependencies. Satellite connectivity is explicitly identified as a core pillar of EU strategic autonomy, essential for broadband access in remote areas and for security, crisis management, defence, and other critical applications, prompting a shift toward harmonised, EU-level authorisation to strengthen resilience and avoid reliance on foreign providers.

The DNA complements the EU’s support for the IRIS2 satellite constellation, a planned multi-orbit constellation of 290 satellites designed to provide encrypted communications for citizens, governments and public agencies and reduce EU reliance on external providers. In mid-January, the timeline for IRIS2 has been moved, according to the EU Commissioner for Defence and Space, Andrius Kubilius.

The EU has also advanced its timeline for the IRIS2 satellite network, according to the EU Commissioner for Defence and Space, Andrius Kubilius. A planned multi-orbit constellation of 290 satellites, IRIS2 aims to begin initial government communication services by 2029, a year earlier than originally planned. The network is designed to provide encrypted communications for citizens, governments and public agencies. It also aims to reduce reliance on external providers, as Europe is ‘quite dependent on American services,’ per Kubilius.

The Commission is also ready to put money where the goal is: the Commission announced €307.3 million in funding to boost capabilities in AI, robotics, photonics, and other emerging technologies. A significant portion of this investment is tied to initiatives such as the Open Internet Stack, which seek to deepen European digital autonomy. The funding, open to businesses, academia, and public bodies, reflects a broader push to translate policy ambitions into concrete technological capacity.

There’s more in the pipeline. The Cloud and AI Development Act, a revision of the Chips Act and the Quantum Act, all due in 2026, will also bolster EU digital sovereignty, enhancing strategic autonomy across the digital stack.

Furthermore, the European Commission is preparing a strategy to commercialise European open-source software, alongside the Cloud and AI Development Act, to strengthen developer communities, support adoption across various sectors, and ensure market competitiveness. By providing stable support and fostering collaboration between government and industry, the strategy seeks to create an economically sustainable open-source ecosystem.

In Burkina Faso, the focus is on reducing reliance on external providers while consolidating national authority over core digital systems. The government has launched a Digital Infrastructure Supervision Centre to centralise oversight of national networks and strengthen cybersecurity monitoring. New mini data centres for public administration are being rolled out to ensure that sensitive state data is stored and managed domestically.

Sovereignty debates are also translating into decisions to limit, replace, or restructure the use of digital services provided by foreign entities. France has announced plans to phase out US-based collaboration platforms such as Microsoft Teams, Zoom, Google Meet, and Webex from public administration, replacing them with a domestically developed alternative, ‘Visio’.

The Dutch data protection authority has urged the government to act swiftly to protect the country’s digital sovereignty, after DigiD, the national digital identity system, appeared set for acquisition by a US company. The watchdog argued that the Netherlands relies heavily on a small group of non-European cloud and IT providers, and stresses that public bodies lack clear exit strategies if foreign ownership suddenly shifts.

In the USA, the TikTok controversy can also be seen through sovereignty angles: Rather than banning TikTok, authorities have pushed the platform to restructure its operations for the US market. A new entity will manage TikTok’s US operations, with user data and algorithms handled inside the US. The recommendation algorithm is meant to be trained only on US user data to meet American regulatory requirements.

In more security-driven contexts, the concept is sharper still. As Europe remains heavily dependent on both Chinese telecom vendors and US cloud and satellite providers, the European Commission proposed binding cybersecurity rules targeting critical ICT supply chains.

Russia’s Security Council has recently labelled services such as Starlink and Gmail as national security threats, describing them as tools for ‘destructive information and technical influence.’ These assessments are expected to feed into Russia’s information security doctrine, reinforcing the treatment of digital services provided by foreign companies not as neutral infrastructure but as potential vectors of geopolitical risk.

The big picture. The common thread is clear: Digital sovereignty is now a key consideration for governments worldwide. The approaches may differ, but the goal remains largely the same – to ensure that a nation’s digital future is shaped by its own priorities and rules. But true independence is hampered by deeply embedded global supply chains, prohibitive costs of building parallel systems, and the risk of stifling innovation through isolation. While the strategic push for sovereignty is clear, untangling from interdependent tech ecosystems will require years of investment, migration, and adaptation. The current initiatives mark the beginning of a protracted and challenging transition.

The Grok shock: How AI deepfakes triggered reactions worldwide

In January 2026, a regulatory firestorm engulfed Grok, the AI tool built into Elon Musk’s X platform, as reports surfaced that Grok was being used to produce non-consensual sexualised and deepfake images, including depictions of individuals undressed or in compromising scenarios without their consent.

Musk has suggested that users who use such prompts be held liable, a move criticised as shifting responsibility.

The backlash was swift and severe. The UK’s Ofcom launched an investigation under the Online Safety Act, to determine whether X has complied with its duties to protect people in the UK from content that is illegal in the country. UK Prime Minister Keir Starmer condemned the ‘disgusting’ outputs. The EU declared the content, especially involving children, had ‘no place in Europe.’ Southeast Asia acted decisively: Malaysia and Indonesia blocked Grok entirely, citing obscene image generation, and the Philippines swiftly followed suit on child-protection grounds.

Under pressure, X announced tightened controls on Grok’s image-editing capabilities. The platform said it had introduced technological safeguards to block the generation and editing of sexualised images of real people in jurisdictions where such content is illegal.

However, regulatory authorities signalled that this step, while positive, would not halt oversight.

In the UK, Ofcom emphasised that its formal investigation into X’s handling of Grok and the emergence of deepfake imagery will continue, even as it welcomes the platform’s policy changes. The regulator emphasised its commitment to understanding how the platform facilitated the proliferation to such content and to ensuring that corrective measures are implemented.

The UK Information Commissioner’s Office (ICO) opened a formal investigation into X and xAI over whether Grok’s processing of personal data complies with UK data protection law, namely core data protection principles—lawfulness, fairness, and transparency—and whether its design and deployment included sufficient built-in protections to stop the misuse of personal data for creating harmful or manipulated images.

Canada’s Privacy Commissioner widened an existing investigation into X Corp. and opened a parallel probe into xAI to assess whether the companies obtained valid consent for the collection, use, and disclosure of personal information to create AI-generated deepfakes, including sexually explicit content.

In France, the Paris prosecutor’s office confirmed that it will widen an ongoing criminal investigation into X to include complicity in spreading pornographic images of minors, sexually explicit deepfakes, denial of crimes against humanity and manipulation of an automated data processing system. The cybercrime unit of the Paris prosecutor has raided the French office of X as part of this expanded investigation. Musk and former CEO Linda Yaccarino have been summoned for voluntary interviews. X denied any wrongdoing and called the raid ‘abusive act of law enforcement theatre’ while Musk described it as a ‘political attack.’

The European Commission has opened a formal investigation into X under the bloc’s Digital Services Act (DSA). The probe focuses on whether the company met its legal obligations to mitigate risks from AI-generated sexualised deepfakes and other harmful imagery produced by Grok — especially those that may involve minors or non-consensual content.

Brazil’s Federal Public Prosecutor’s Office, the National Data Protection Authority and the National Consumer Secretariat — issued coordinated recommendations have issued recommendations to X to stop Grok from producing and disseminating sexualised deepfakes, warning that Brazil’s civil liability rules could apply if harmful outputs continued and that the platform should be disabled until safeguards were in place.

In India, the Ministry of Electronics and Information Technology (Meity) demanded the removal of obscene and unlawful content generated by the AI tool and required a report on corrective actions within 72 hours. The ministry also ordered the company to review Grok’s technical and governance framework. The deadline has since passed, and neither the ministry nor Grok has made any updates public.

Regulatory authorities in South Korea are examining whether Grok has violated personal data protection and safety standards by enabling the production of explicit deepfakes, and whether the matter falls within its legal remit.

Indonesia, Malaysia and the Philippines, however, have restored access after the platform introduced additional safety controls aimed at curbing the generation and editing of problematic content.

The red lines. The reaction was so immediate and widespread precisely because it struck two rather universal nerves: the profound violation of privacy through non-consensual sexual imagery—a moral line nearly everyone agrees cannot be crossed—combined with the unique perils of AI, a trigger for acute governmental sensitivity.

The big picture. Grok’s ongoing scrutiny shows that not all regulators are satisfied with the safeguards implemented so far, highlighting that remedies may need to be tailored to different jurisdictions.

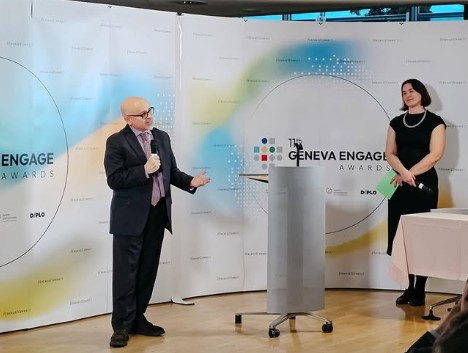

11th Geneva Engage Awards

Diplo and the Geneva Internet Platform (GIP) organised the 11th edition of the Geneva Engage Awards, recognising the efforts of International Geneva actors in digital outreach and online engagement.

This year’s theme, ‘Back to Basics: The Future of Websites in the AI Era,’ highlighted new practices in which users increasingly rely on AI assistants and AI-generated summaries that may not cite primary or the most relevant sources.

The opening segment of the event set the context for a shifting digital environment, exploring the transition from a search-based web to an answer-driven web and its implications for public engagement. It also offered a brief, transparent look at the logic behind this year’s award rankings, unpacking the metrics and mathematical models used to assess digital presence and accessibility. This led to the awards presentation, which recognised Geneva-based actors for their online engagement and influence.

The awards honoured organisations across three main categories: international organisations, NGOs, and permanent representations. The awards assessed efforts in social media engagement, web accessibility, and AI leadership, reinforcing Geneva’s role as a trusted source of reliable information as technology changes rapidly.

In the International Organisations category, the United Nations Conference on Trade and Development (UNCTAD) won first place. The United Nations Office at Geneva (UNOG) and the United Nations Office for the Coordination of Humanitarian Affairs (UNOCHA) were named runners-up for their strong digital presence and outreach.

Among non-governmental organisations, the International AIDS Society ranked first. It was followed by the Aga Khan Development Network (AKDN) and the International Union for Conservation of Nature (IUCN), both recognised as runners-up for their effective digital engagement.

In the Permanent Representations category, the Permanent Mission of the Republic of Indonesia to the United Nations Office and other international organisations in Geneva took first place. The Permanent Mission of the Republic of Rwanda and the Permanent Mission of France were named runners-up.

The Web Accessibility Award went to the Permanent Mission of Canada, while the Geneva AI Leadership Award was presented to the International Telecommunication Union (ITU).

Honourable mentions were awarded to the World Economic Forum (WEF), the Permanent Delegation of the European Union to the United Nations Office in Geneva, the World Health Organization (WHO), and the United Nations High Commissioner for Refugees (UNHCR).

After the ceremony, the focus shifted from recognition to exchange at a networking cocktail and a ‘knowledge bazaar.’ Participants circulated through interactive stations that translate abstract digital and AI concepts into tangible experiences. These included a guided walkthrough of what happens technically when a question is posed to an AI system; an exploration of the data and network analysis underpinning the Geneva Engage Awards, including a large-scale mapping of interconnections between Geneva-related websites; and discussions on the role of curated, human-enriched knowledge in feeding AI systems, with practical insights into how organisations can preserve and scale institutional expertise.

Other stations highlighted hands-on approaches to AI capacity-building through apprenticeships that emphasise learning by building AI agents, as well as the use of AI for post-event reporting. Together, these sessions showed how AI can transform fleeting discussions into structured, multilingual, and lasting knowledge.

Looking ahead: Our annual AI and digital forecast

As we enter the new year, we bring you our annual outlook on AI and digital developments, featuring insights from our Executive Director. Drawing on our coverage of digital policy over the past year on the Digital Watch Observatory, as well as our professional experience and expertise, we highlight the 10 trends and events we expect to shape the digital landscape in the year ahead.

Technologies. AI is becoming a commodity, affecting everyone—from countries competing for AI sovereignty to individual citizens. Equally important is the rise of bottom-up AI: in 2026, small to large language models will be able to run on corporate or institutional servers. Open-source development, a major milestone in 2025, is expected to become a central focus of future geostrategic competition.

Geostrategy. The good news is that, despite all geopolitical pressure, we still have an integrated global internet. However, digital fragmentation is accelerating, with continued fragmentation of filtering social media, other services and the other developments around three major hubs: the United States, China, and potentially the EU. Geoeconomics is becoming a critical dimension of this shift, particularly given the global footprint of major technology companies. And any fragmentation, including trade fragmentation and taxation fragmentation, will inevitably affect them. Equally important is the role of “geo-emotions”: the growing disconnect between public sentiment and industry enthusiasm. While companies remain largely optimistic about AI, public scepticism is increasing, and this divergence may carry significant political implications.

Governance. The core governance dilemma remains whether national representatives—parliamentarians domestically and diplomats internationally—are truly able to protect citizens’ digital interests related to data, knowledge, and cybersecurity. While there are moments of productive discussion and well-run events, substantive progress remains limited. One positive note is that inclusive governance, at least in principle, continues through multistakeholder participation, though it raises its own unresolved questions.

Security. The adoption of the Hanoi Cybercrime Convention at the end of the year is a positive development, and substantive discussions at the UN continue despite ongoing criticism of the institution. While it remains unclear whether these processes are making us more secure, they are expanding the governance toolbox. At the same time, attention should extend beyond traditional concerns—such as cyberwarfare, terrorism, and crime—to emerging risks associated with the interconnectivity to AI systems through APIs. These points of integration create new interdependencies and potential backdoors for cyberattacks.

Human rights. Human rights are increasingly under strain, with recent policy shifts by technology companies and growing transatlantic tensions between the EU and the United States highlighting a changing landscape. While debates continue to focus heavily on bias and ethics, deeper human rights concerns—such as the rights to knowledge, education, dignity, meaningful work, and the freedom to remain human rather than optimised—receive far less attention. As AI reshapes society, the human rights community must urgently revisit its priorities, grounding them in the protection of life, dignity, and human potential.

Economy. The traditional three-pillar framework comprising security, development, and human rights is shifting toward economic and security concerns, with human rights being increasingly sidelined. Technological and economic issues, from access to rare earths to AI models, are now treated as strategic security matters. This trend is expected to accelerate in 2026, making the digital economy a central component of national security. Greater attention should be paid to taxation, the stability of the global trade system, and how potential fragmentation or disruption of global trade could impact the tech sector.

Standards. The lesson from social media is clear: without interoperable standards, users get locked into single platforms. The same risk exists for AI. To avoid repeating these mistakes, developing interoperable AI standards is critical. Ideally, individuals and companies should build their own AI, but where that isn’t feasible, at a minimum, platforms should be interoperable, allowing seamless movement across providers such as OpenAI, Cloudy, or DeepSeek. This approach can foster innovation, competition, and user choice in the emerging AI-dominated ecosystem.

Content. The key issue for content in 2026 is the tension between governments and US tech, particularly regarding compliance with EU laws. At the core, countries have the right to set rules for content within their territories, reflecting their interests, and citizens expect their governments to enforce them. While media debates often focus on misuse or censorship, the fundamental question remains: can a country regulate content on its own soil? The answer is yes, and adapting to these rules will be a major source of tension going forward.

Development. Countries that are currently behind in AI aren’t necessarily losing. Success in AI is less about owning large models or investing heavily in hardware, and more about preserving and cultivating local knowledge. Small countries should invest in education, skills, and open-source platforms to retain and grow knowledge locally. Paradoxically, a slower entry into AI could be an advantage, allowing countries to focus on what truly matters: people, skills, and effective governance.

Environment. Concerns about AI’s impact on the environment and water resources persist. It is worth asking whether massive AI farms are truly necessary. Small AI systems could serve as extensions of these processes or as support for training and education, reducing the need for energy- and water-intensive platforms. At a minimum, AI development should prioritise sustainability and efficiency, mitigating the risk of large-scale digital waste while still enabling practical benefits.