5-12 December 2025

HIGHLIGHT OF THE WEEK

Under-16 social media use in Australia: A delay or a ban?

Australia made history on Wednesday as it began enforcing its landmark under-16 social media restrictions — the first nationwide rules of their kind anywhere in the world.

The measure — a new Social Media Minimum Age (SMMA) requirement under the Online Safety Act — obliges major platforms to take ‘reasonable steps’ to delete underage accounts and block new sign-ups, backed by AUD 49.5 million fines and monthly compliance reporting.

As enforcement began, eSafety Commissioner Julie Inman Grant urged families — particularly those in regional and rural Australia — to consult the newly published guidance, which explains how the age limit works, why it has been raised from 13 to 16, and how to support young people during the transition.

The new framework should be viewed not as a ban but as a delay, Grant emphasised, raising the minimum account age from 13 to 16 to create ‘a reprieve from the powerful and persuasive design features built to keep them hooked and often enabling harmful content and conduct.’

It has been a few days since the ban—we continue to use the word ‘ban’ in the text, as it has already become part of the vernacular—took effect. Here’s what has happened in the days since.

Teen reactions. The shift was abrupt for young Australians. Teenagers posted farewell messages on the eve of the deadline, grieving the loss of communities, creative spaces, and peer networks that had anchored their daily lives. Youth advocates noted that those who rely on platforms for education, support networks, LGBTQ+ community spaces, or creative expression would be disproportionately affected.

Workarounds and their limits. Predictably, workarounds emerged immediately. Some teens tried (and succeeded) to fool facial-age estimation tools by distorting their expressions; others turned to VPNs to mask their locations. However, experts note that free VPNs frequently monetise user data or contain spyware, raising new risks. And it might be in vain – platforms retain an extensive set of signals they can use to infer a user’s true location and age, including IP addresses, GPS data, device identifiers, time-zone settings, mobile numbers, app-store information, and behavioural patterns. Age-related markers — such as linguistic analysis, school-hour activity patterns, face or voice age estimation, youth-focused interactions, and the age of an account give companies additional tools to identify underage users.

Privacy and effectiveness concerns. Critics argue that the policy raises serious privacy concerns, since age-verification systems, whether based on government ID uploads, biometrics, or AI-based assessments, force people to hand over sensitive data that could be misused, breached, or normalised as part of everyday surveillance. Others point out that facial-age technology is least reliable for teenagers — the very group it is now supposed to regulate. Some question whether the fines are even meaningful, given that Meta earns roughly AUD 50 million in under two hours.

The limited scope of the rules has drawn further scrutiny. Dating sites, gaming platforms, and AI chatbots remain outside the ban, even though some chatbots have been linked to harmful interactions with minors. Educators and child-rights advocates argue that digital literacy and resilience would better safeguard young people than removing access outright. Many teens say they will create fake profiles or share joint accounts with parents, raising doubts about long-term effectiveness.

Industry pushback. Most major platforms have publicly criticised the law’s development and substance. They maintain that the law will be extremely difficult to enforce, even as they prepare to comply to avoid fines. Industry group NetChoice has described the measure as ‘blanket censorship,’ while Meta and Snap argue that real enforcement power lies with Apple and Google through app-store age controls rather than at the platform level.

Reddit has filed a High Court challenge of the ban, naming the Commonwealth of Australia and Communications Minister Anika Wells as defendants, and claiming that the law is applied to Reddit inaccurately. The platform holds that it is a platform for adults, and doesn’t have the traditional social media features that the government has taken issue with.

Government position. The government, expecting a turbulent rollout, frames the measure as consistent with other age-based restrictions (such as no drinking alcohol under 18) and a response to sustained public concern about online harms. Officials argue that Australia is playing a pioneering role in youth online safety — a stance drawing significant international attention.

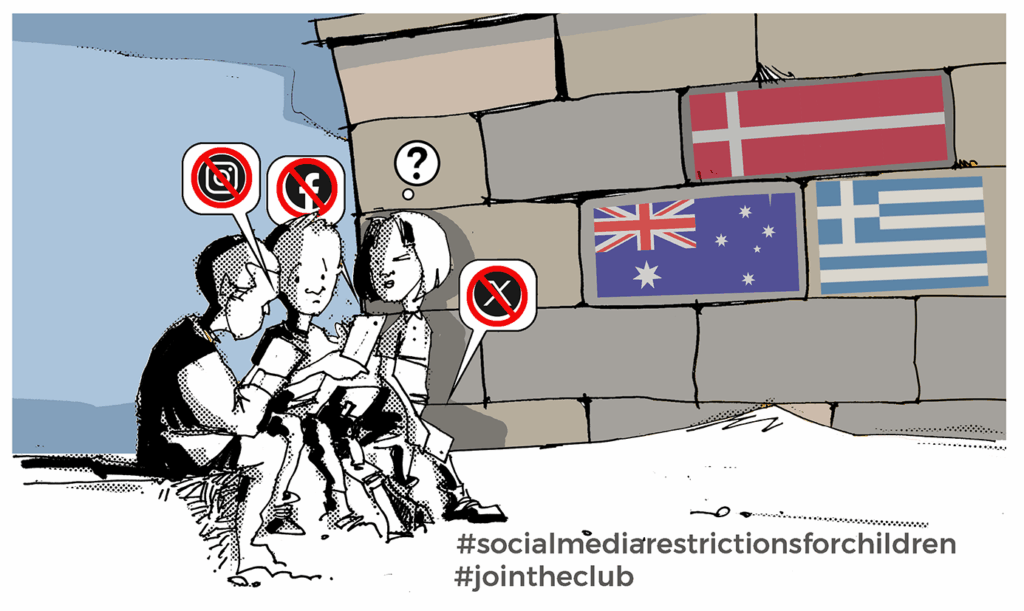

International interest. This development has garnered considerable international attention. As we previously reported, there is a small but growing club of countries seeking to ban minors from major platforms.

- The EU Parliament has proposed a minimum social media age of 16, allowing parental consent for users aged 13–15, and is exploring limits on addictive features such as autoplay and infinite scrolling.

- In France, lawmakers have suggested banning under-15s from social media and introducing a ‘curfew’ for older teens.

- Spain is considering parental authorisation for under-16s.

- Malaysia plans to introduce a ban on social media accounts for people under 16 starting in 2026.

- Denmark and Norway are considering raising the minimum social media age to 15, with Denmark potentially banning under-15s outright and Norway proposing stricter age verification and data protections.

- In New Zealand, political debate has considered restrictions for minors, but no formal policy has been enacted.

- According to Australia’s Communications Minister, Anika Wells, officials from the EU, Fiji, Greece, and Malta have approached Australia for guidance, viewing the SMMA rollout as a potential model.

All of these jurisdictions are now looking closely at Australia, watching for proof of concept — or failure.

The unresolved question. Young people are reminded that they retain access to group messaging tools, gaming services and video conferencing apps while they await eligibility for full social media accounts. But the question lingers: if access to large parts of the digital ecosystem remains open, what is the practical value of fencing off only one segment of the internet?

IN OTHER NEWS LAST WEEK

This week in AI governance

National regulations

Vietnam. Vietnam’s National Assembly has passed the country’s first comprehensive AI law, establishing a risk management regime, sandbox testing, a National AI Development Fund and startup voucher schemes to balance strict safeguards with innovation incentives. The 35‑article legislation — largely inspired by EU and other models — centralises AI oversight under the government and will take effect in March 2026.

The USA. The US President Donald Trump has signed an executive order targeting what the administration views as the most onerous and excessive state-level AI laws. The White House argues that a growing patchwork of state rules threatens to stymie innovation, burden developers, and weaken US competitiveness.

To address this, the order creates an AI Litigation Task Force to challenge state laws deemed obstructive to the policy set out in the executive order – to sustain and enhance the US global AI dominance through a minimally burdensome national policy framework for AI. The Commerce Department is directed to review all state AI regulations within 90 days to identify those that impose undue burdens. It also uses federal funding as leverage, allowing certain grants to be conditioned on states aligning with national AI policy.

The UK. More than 100 UK parliamentarians from across parties are pushing the government to adopt binding rules on advanced AI systems, saying current frameworks lag behind rapid technological progress and pose risks to national and global security. The cross‑party campaign, backed by former ministers and figures from the tech community, seeks mandatory testing standards, independent oversight and stronger international cooperation — challenging the government’s preference for existing, largely voluntary regulation.

National plans and investments

Russia. Russia is advancing a nationwide plan to expand the use of generative AI across public administration and key sectors, with a proposed central headquarters to coordinate ministries and agencies. Officials see increased deployment of domestic generative systems as a way to strengthen sovereignty, boost efficiency and drive regional economic development, prioritising locally developed AI over foreign platforms.

Qatar. Qatar has launched Qai, a new national AI company designed to accelerate the country’s digital transformation and global AI footprint. Qai will provide high‑performance computing and scalable AI infrastructure, working with research institutions, policymakers and partners worldwide to promote the adoption of advanced technologies that support sustainable development and economic diversification.

The EU. The EU has advanced an ambitious gigafactory programme to strengthen AI leadership by scaling up infrastructure and computational capacity across member states. This involves expanding a network of AI ‘factories’ and antennas that provide high‑performance computing and technical expertise to startups, SMEs and researchers, integrating innovation support alongside regulatory frameworks like the AI Act.

Australia. Australia has sealed a USD 4.6 billion deal for a new AI hub in western Sydney, partnering with private sector actors to build an AI campus with extensive GPU-based infrastructure capable of supporting advanced workloads. The investment forms part of broader national efforts to establish domestic AI innovation and computational capacity.

Partnerships

Canada‑EU. Canada and the EU have expanded their digital partnership on AI and security, committing to deepen cooperation on trusted AI systems, data governance and shared digital infrastructure. This includes memoranda aimed at advancing interoperability, harmonising standards and fostering joint work on trustworthy digital services.

The International Network for Advanced AI Measurement, Evaluation and Science. The global network has strengthened cooperation on benchmarking AI governance progress, focusing on metrics that help compare national policies, identify gaps and support evidence‑based decision‑making in AI regulation internationally. This network includes Australia, Canada, the EU, France, Japan, Kenya, the Republic of Korea, Singapore, the UK and the USA. The UK has assumed the role of Network Coordinator.

Trump allows Nvidia to sell chips to approved Chinese customers

The USA has decided to allow the sale of H200 chips to approved customers in China, a decision that marks a notable shift in export controls.

Under the new framework, sales of H200 chips will proceed, subject to conditions including licensing oversight by the US Department of Commerce and a revenue-sharing mechanism that directs 25% of the proceeds back to the US government.

The road ahead. The policy is drawing scrutiny from some US lawmakers and national security experts who caution that increased hardware access could strengthen China’s technological capabilities in sensitive domains.

Poland halts crypto reform as Norway pauses CBDC plans

Poland’s effort to introduce a comprehensive crypto law has reached an impasse after the Sejm failed to overturn President Karol Nawrocki’s veto of a bill meant to align national rules with the EU’s MiCA framework.

The government argued the reform was essential for consumer protection and national security, but the president rejected it as overly burdensome and a threat to economic freedom, citing expansive supervisory powers and website-blocking provisions. With the veto upheld, Poland remains without a clear domestic regulatory framework for digital assets. In the aftermath, Prime Minister Donald Tusk has pledged to renew efforts to pass crypto legislation.

In Norway, Norges Bank has concluded that current conditions do not justify launching a central bank digital currency, arguing that Norway’s payment system remains secure, efficient and well-tailored to users.

The bank maintains that the Norwegian krone continues to function reliably, supported by strong contingency arrangements and stable operational performance. Governor Ida Wolden Bache said the assessment reflects timing rather than a rejection of CBDCs, noting the bank could introduce one if conditions change or if new risks emerge in the domestic payments landscape.

Zooming out. Both cases highlight a cautious approach to digital finance in Europe: while Poland grapples with how much oversight is too much, Norway is weighing whether innovation should wait until the timing is right.

LAST WEEK IN GENEVA

On Wednesday (3 December), Diplo, UNEP, and Giga are co-organising an event at the Giga Connectivity Centre in Geneva, titled ‘Digital inclusion by design: Leveraging existing infrastructure to leave no one behind’. The event looked at realities on the ground when it comes to connectivity and digital inclusion, and at concrete examples of how community anchor institutions like posts, schools, and libraries can contribute significantly to advancing meaningful inclusion. There was also a call for policymakers at national and international levels to keep these community anchor institutions in mind when designing inclusion strategies or discussing frameworks, such as the GDC and WSIS+20.

Organisations and institutions are invited to submit event proposals for the second edition of Geneva Security Week. Submissions are open until 6 January 2026. Co-organised once again by the UN Institute for Disarmament Research (UNIDIR) and the Swiss Federal Department of Foreign Affairs (FDFA), Geneva Security Week 2026 will take place from 4 to 8 May 2026 under the theme ‘Advancing Global Cooperation in Cyberspace’.

LOOKING AHEAD

UN General Assembly High-level meeting on WSIS+20 review

Twenty years after the finalisation of the World Summit on the Information Society (WSIS), the WSIS+20 review process will take stock of the progress made in the implementation of WSIS outcomes and address potential ICT gaps and areas for continued focus, as well as address challenges, including bridging the digital divide and harnessing ICTs for development.

The overall review will be concluded by a two-day high-level meeting of the UN General Assembly (UNGA), scheduled to 16–17 December 2025. The meeting will consist of plenary meetings, which will include statements in accordance with General Assembly resolution 79/277 and the adoption of the draft outcome document.

Diplo and the Geneva Internet Platform (GIP) will provide just-in-time reporting from the meeting. Bookmark our dedicated web page; more details will be available soon.

READING CORNER

Human rights are no longer abstract ideals but living principles shaping how AI, data, and digital governance influence everyday life, power structures, and the future of human dignity in an increasingly technological world.