28 November-5 December 2025

HIGHLIGHT OF THE WEEK

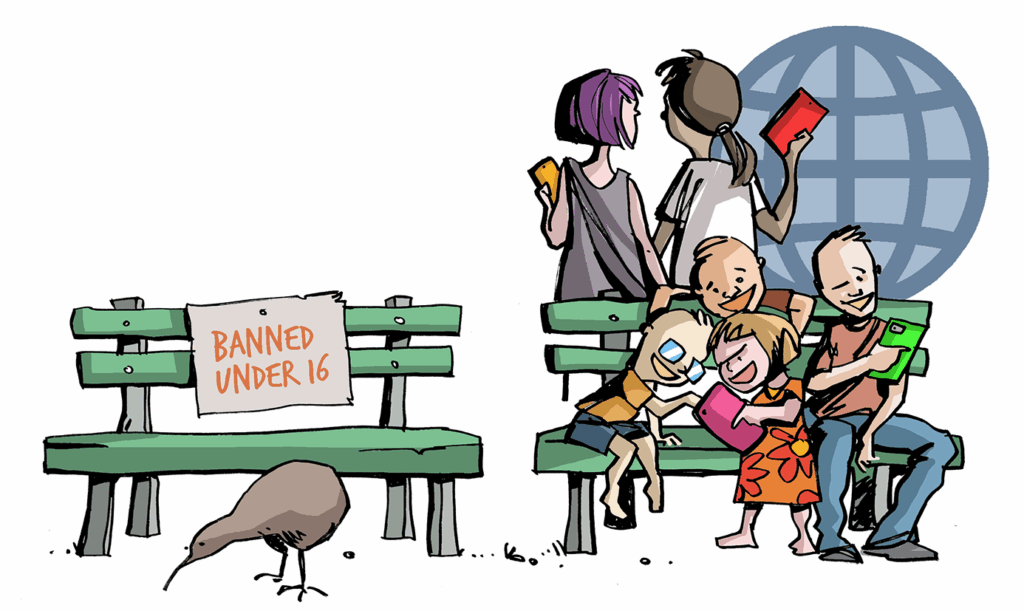

Australia’s social media ban: Making it work

Australia’s under-16 social-media ban is moving from legislation to enforcement, and the first signs of impact are already visible. Ahead of the 10 December deadline, Meta has begun blocking teen users, warning that accounts flagged as belonging to under-16s will be restricted or shut down. Those mistakenly removed can appeal by submitting a government-issued ID or a video selfie age check—a process that is already prompting complaints about privacy and accuracy. YouTube, meanwhile, has criticised the framework as unrealistic and potentially harmful, arguing that overly rigid age controls could push young people toward far less safe online spaces. However, the platform will, ultimately, comply with the ban.

The Australian government remains confident the world will follow its lead, framing the ban as a model for global child-safety regulation. But with implementation underway, many are asking a basic question: how will the ban actually work in practice?

Australia’s Online Safety Amendment (Social Media Minimum Age) Act 2024 bans anyone under 16 from creating or maintaining accounts on major social-media platforms.

- Companies such as Meta must take ‘reasonable steps’ to verify users’ ages or face fines of up to AUD$50 million.

- Platforms can choose from various verification methods, including government-issued ID checks, third-party age-assurance tools, facial-analysis systems, or data-based age inference.

- If an account appears to belong to someone under 16, platforms must restrict it, request verification, or close it. Users who are wrongly flagged can file appeals; however, the process varies.

- The law applies broadly to apps with social-networking features, while some smaller platforms fall outside the scope.

Overall, enforcement relies heavily on industry compliance and emerging age-verification technologies.

Public and expert reactions reflect this tension between intention and reality.

Supporters argue the ban protects children from cyberbullying, harmful content, and addictive platform design, while setting a global precedent for stricter regulation of Big Tech. Some mental-health professionals cautiously welcome reduced exposure to high-risk environments.

Critics warn that the policy may isolate teenagers, limit self-expression, and disproportionately harm vulnerable groups who rely on online communities. Age-verification trials reveal accuracy problems, with systems misclassifying teens and adults, raising concerns about wrongful account closures. Privacy advocates object to the increased collection of sensitive data, including IDs and facial images. Human rights groups say the ban restricts young people’s freedoms and may create a false sense of security. Tech companies publicly question the feasibility, but many have signalled compliance to avoid heavy fines. Meanwhile, many Australian teens reportedly plan to migrate to lesser-regulated apps, potentially exposing them to greater risks. Overall, the debate centres on safety versus autonomy, privacy, and effectiveness.

Yet signs of strain are already emerging. Teens are rapidly migrating to smaller or less regulated platforms, using VPNs, borrowing adult devices, or exploiting loopholes in verification systems. So what happens next? Will the government spend its time chasing teenagers across an ever-expanding maze of apps, mirrors, clones and VPNs? Because if the history of the internet teaches anything, it’s this: once something is banned, it rarely disappears — it simply moves, mutates, and comes back wearing a different username.

IN OTHER NEWS LAST WEEK

This week in AI governance

Australia. Australia has unveiled a new National AI Plan designed to harness AI for economic growth, social inclusion and public-sector efficiency — while emphasising safety, trust and fairness in adoption. The plan mobilises substantial investment: hundreds of millions of AUD are channelled into research, infrastructure, skills development and programmes to help small and medium enterprises adopt AI; the government also plans to expand nationwide access to the technology.

Practical steps include establishing a national AI centre, supporting AI adoption among businesses and nonprofits, enhancing digital literacy through schools and community training, and integrating AI into public service delivery.

Part of the planned steps is the establishment of the AI Safety Institute (AISI), which we wrote about last week.

Uzbekistan. Uzbekistan has announced the launch of the ‘5 million AI leaders’ project to develop its domestic AI capabilities. As part of this plan, the government will integrate AI-focused curricula into schools, vocational training and universities; train 4.75 million students, 150,000 teachers and 100,000 public servants; and launch large-scale competitions for AI startups and talent.

The programme also includes building high-performance computing infrastructure (in partnership with a major tech company), establishing a national AI transfer office abroad, and creating state-of-the-art laboratories in educational institutions — all intended to accelerate adoption of AI across sectors.

The government frames this as central to modernising public administration and positioning Uzbekistan among the world’s top 50 AI-ready countries.

The country will also adopt Rules and principles of ethics in the development and use of artificial intelligence technologies, a framework which will introduce unified standards for developers, implementers and users across the country. Developers must ensure algorithmic transparency, safeguard personal data, assess risks and avoid harmful use; users must comply with legislation, respect rights, and handle data responsibly. Any harm to human rights, national security or the environment will trigger legal liability.

Belgium. Belgium joins a growing number of countries and public-sector organisations that have restricted or blocked China’s DeepSeek over security concerns. All Belgian federal government officials must cease using DeepSeek, effective 1 December, and all instances of DeepSeek must be removed from official devices.

The move follows a warning from Centre for Cybersecurity Belgium, which identified serious data-protection risks associated with the tool and flagged its use as problematic for handling sensitive government information.

Canada. Canada has formally adopted the world’s first national standard for accessible and equitable AI with the release of CAN-ASC-6.2 – Accessible and Equitable Artificial Intelligence Systems. The standard aims to ensure AI systems are designed to be accessible, inclusive and fair — in particular for people with disabilities — embedding accessibility and equity throughout the AI lifecycle. It provides guidance for organisations and developers on how to prevent exclusion, guarantee equitable benefits, and avoid discriminatory or exclusionary system designs. The standard was developed with input from a diverse committee, including persons with disabilities and members of equity-deserving groups. Its publication marks a major step toward ensuring that AI boosts social inclusion and digital accessibility, rather than reinforcing inequality.

The EU. The European Commission has launched a formal antitrust investigation into whether Meta’s new restrictions on AI providers’ access to WhatsApp violate EU competition rules.

Under a Meta policy introduced in October 2025, AI companies are barred from using the WhatsApp Business Solution if AI is their primary service, although limited support functions, such as automated support, remain allowed. The policy will take effect for existing AI providers on 15 January 2026 and has already been applied to newcomers since 15 October 2025.

The Commission fears this could shut out third-party AI assistants from reaching users in the European Economic Area (EEA), while Meta’s own Meta AI would continue to operate on the platform.

The probe—covering the entire EEA except Italy, which has been conducting its own investigation since July—will examine whether Meta is abusing its dominant position in breach of Article 102 TFEU and Article 54 of the EEA Agreement.

European regulators say outcomes could guide future oversight as generative AI becomes woven into essential communications. The case signals growing concern about the concentration of power in rapidly evolving AI ecosystems.

Google DeepMind CEO Demis Hassabis stated that ‘AGI, probably the most transformative moment in human history, is on the horizon’.

Revision 2 of the WSIS+20 outcome document released

A revised version of the WSIS+20 outcome document, Revision 2, was published on 3 December by the co-facilitators of the intergovernmental process.

Revision 2 introduces several noteworthy changes compared to Revision 1. In the introduction, new commitments include catalysing women’s economic agency and highlighting the importance of applying a human-centric approach throughout the lifecycle of digital technologies.

The section on digital divides is strengthened through a shift in title from bridging to closing digital divides, a new recognition that such divides pose particular challenges for developing countries, an explicit call to integrate accessibility-by-design in digital development, and a clarification that the internet and digital services need to become both fully accessible and affordable.

In the digital economy section, previous language about governments’ concerns with safeguarding employment rights and welfare has been removed. The section on social and economic development now includes new language on the need for greater international cooperation to promote digital inclusion and digital literacy, including capacity building and financial mechanisms.

Environmental provisions are expanded: Revision 2 introduces new language emphasising responsible mining and processing practices for critical mineral resources (although it removes a reference to equitable access to such resources), and it brings back a paragraph on e-waste, restoring calls for improved data gathering, collaboration on safe and efficient waste management, and sharing of technology and best practices.

Several changes also appear in areas related to security, financing, and AI. The section on building confidence and security in the use of ICTs clarifies that such efforts must be consistent with international human rights law (not just human rights), and it restores language from the Zero Draft recognising the need to counter violence occurring or amplified by technology, as well as hate speech, discrimination, misinformation, cyberbullying, and child sexual exploitation and abuse, together with commitments to establish robust risk-mitigation and redress measures.

The paragraph on future financial mechanisms for digital development is revised to clarify that a potential task force would examine such mechanisms, and that the Secretary-General would consider establishing it within existing mandates and resources and in coordination with WSIS Action Line facilitators and other relevant UN entities. It also notes that the task force would build on and complement ongoing financing initiatives and mechanisms involving all stakeholders.

In the AI section, requests to establish an AI research programme and an AI capacity-building fellowship are now directed to the UN Inter-Agency Working Group on AI, with the fellowship explicitly dedicated to increasing AI research expertise.

Several changes were made to the paragraphs on the Internet Governance Forum (IGF). Revision 2 adds language specifying that, in making the IGF a permanent UN forum, its secretariat would continue to be ensured by UN DESA, and that the Forum should have a stable and sustainable basis with appropriate staffing and resources, in accordance with UN budgetary procedures. This is reflected in a strengthened request for the Secretary-General to submit a proposal to the General Assembly to ensure sustainable funding for the Forum through a mix of core UN funding and voluntary contributions (whereas previous language only asked for proposals on future funding). In the Follow-up section, the request for the Secretary-General’s report on WSIS follow-up – which also incorporates updates on the Global Digital Compact (GDC) implementation – is now set on a biennial basis, with a clear request for both the CSTD and ECOSOC to consider the report, marking a notable shift in the follow-up and review framework.

UN launches Digital Cooperation Portal to accelerate GDC action

The UN has launched the Digital Cooperation Portal, a new platform designed to accelerate collective action on the Global Digital Compact (GDC). The portal maps digital initiatives, connects partners worldwide, and tracks progress on key priorities, including AI governance, digital public infrastructure, human rights online, and inclusive digital economies.

An integrated AI Toolbox allows users to explore AI applications that help them analyse, connect, and enhance their digital cooperation projects. By submitting initiatives, stakeholders can increase visibility, support global coordination, and join a growing network working toward an inclusive digital future.

The Portal is open to all stakeholders from September 2024 through the GDC’s high-level review in 2027.

LAST WEEK IN GENEVA

On Wednesday (3 December), Diplo, UNEP, and Giga are co-organising an event at the Giga Connectivity Centre in Geneva, titled ‘Digital inclusion by design: Leveraging existing infrastructure to leave no one behind’. The event looked at realities on the ground when it comes to connectivity and digital inclusion, and at concrete examples of how community anchor institutions like posts, schools, and libraries can contribute significantly to advancing meaningful inclusion. There was also a call for policymakers at national and international levels to keep these community anchor institutions in mind when designing inclusion strategies or discussing frameworks, such as the GDC and WSIS+20.

Organisations and institutions are invited to submit event proposals for the second edition of Geneva Security Week. Submissions are open until 6 January 2026. Co-organised once again by the UN Institute for Disarmament Research (UNIDIR) and the Swiss Federal Department of Foreign Affairs (FDFA), Geneva Security Week 2026 will take place from 4 to 8 May 2026 under the theme ‘Advancing Global Cooperation in Cyberspace’.

LOOKING AHEAD

OHCHR will hold its consultation on the rights-compatible use of digital tools in stakeholder engagement on 8–9 December, focusing on where technology can support engagement processes and where human involvement remains essential. The outcomes will feed into OHCHR’s report to the Human Rights Council in 2026.

The Inter-Parliamentary Union is organising ‘Navigating health misinformation in the age of AI’, a webinar that brings together parliamentarians, experts, and civil society to explore how misinformation and AI intersect to shape access to essential health services, particularly for women, children and adolescents.

Registration is open for the WSIS+20 virtual consultation on revision 2 of the draft outcome document, to be held on 8 December. The session, organised by the Informal Multistakeholder Sounding Board, will gather targeted, paragraph-based input by stakeholders and provide process updates ahead of the General Assembly’s high-level meeting. Informal negotiations on Rev.2 will continue on 9, 10 and 11 December.

READING CORNER

Rapid advances in AI are reshaping global development, raising urgent questions about whether all countries are prepared to benefit, or risk falling further behind.

Gaming and professional esports are rapidly emerging as powerful tools of global diplomacy, revealing how digital competition and shared virtual worlds can connect cultures, influence international relations, and empower new generations to shape the narratives that transcend traditional borders.

This month’s edition takes you from Washington to Geneva, COP30 to the WSIS+10 negotiations — tracing the major developments that are reshaping AI policy, online safety, and the resilience of the digital infrastructure we rely on every day.