November 2025 in retrospect

This month’s edition takes you from Washington to Geneva, COP30 to the WSIS+20 negotiations — tracing the major developments that are reshaping AI policy, online safety, and the resilience of the digital infrastructure we rely on every day.

Here’s what we unpacked in this edition.

Is the AI bubble about to burst? — Will the AI bubble burst? Is AI now ‘too big to fail’? Will the US government bail out AI giants – and what would that mean for the global economy?

The global struggle to govern AI — Governments are racing to define rules, from national AI strategies to emerging global frameworks. We outline the latest moves

WSIS+20 Rev 1 highlights — A look inside the document now guiding negotiations among UN member states ahead of the high-level meeting of the General Assembly on 16–17 December 2025.

Code meets climate — What the UN member states discussed in terms of AI and digital at COP30.

Child safety online — From Australia to the EU, governments are rolling out new safeguards to protect children from online harms. We examine their approaches.

Digital draught — The Cloudflare outage exposed fragile dependencies in the global internet. We unpack what caused it — and what the incident reveals about digital resilience.

Last month in Geneva — Catch up on the discussions, events, and takeaways shaping international digital governance.

Snapshot: The developments that made waves in November

GLOBAL GOVERNANCE

France and Germany hosted a Summit on European Digital Sovereignty in Berlin to accelerate Europe’s digital independence. They presented a roadmap with seven priorities: simplifying regulation (including delaying some AI Act rules), ensuring fair cloud and digital markets, strengthening data sovereignty, advancing digital commons, expanding open-source digital public infrastructure, creating a Digital Sovereignty Task Force, and boosting frontier AI innovation. Over €12 billion in private investment was pledged. A major development accompanying the summit was the launch of the European Network for Technological Resilience and Sovereignty (ETRS) to reduce reliance on foreign technologies—currently over 80%—through expert collaboration, technology-dependency mapping, and support for evidence-based policymaking.

TECHNOLOGIES

The Dutch government has suspended its takeover of Nexperia, a Netherlands-based chipmaker owned by China’s Wingtech, following constructive talks with Chinese authorities. China has also begun releasing stockpiled chips to ease the shortage.

Baidu unveiled two in-house AI chips, the M100 for efficient inference on mixture-of-experts models (due early 2026) and the M300 for training trillion-parameter multimodal models (2027). It also outlined clustered architectures (Tianchi256 in H1 2026; Tianchi512 in H2 2026) to scale inference via large interconnects.IBM unveiled two quantum chips: Nighthawk (120 qubits, 218 tunable couplers) enabling ~30% more complex circuits, and Loon, a fault-tolerance testbed with six-way connectivity and long-range couplers.

INFRASTRUCTURE

Six EU states — Austria, France, Germany, Hungary, Italy and Slovenia — have jointly urged that the Digital Networks Act (DNA) be reconsidered, arguing that core elements of the proposal — including harmonised telecom-style regulation, network-fee dispute mechanisms and broader merger rules — should instead remain under national control.

CYBERSECURITY

Roblox will roll out mandatory facial age estimation (starting December in select countries, expanding globally in January) and segment users into strict age bands to block chats with adult strangers. Under-13s remain barred from private messages unless parents opt in.

Eurofiber confirmed a breach of its French ATE customer platform and ticketing system via third-party software, saying services stayed up and banking data was safe.

The FCC is set to vote on rescinding January rules under CALEA Section 105 that required major carriers to harden networks against unauthorised access and interception, measures adopted after the Salt Typhoon cyber-espionage campaign exposed telecom vulnerabilities.

The UK plans a Cyber Security and Resilience Bill to harden critical national infrastructure and the wider digital economy against rising cyber threats. About 1,000 essential service providers (health, energy, IT) would face strengthened standards, with potential expansion to 200+ data centres.

ECONOMIC

The UAE completed its first government transaction using the Digital Dirham, a CBDC pilot under the Central Bank’s Financial Infrastructure Transformation programme. In addition, the UAE’s central bank approved Zand AED, the country’s first regulated, multi-chain AED-backed stablecoin, issued by licensed bank Zand.

The Czech National Bank created a $1 million digital-assets test portfolio, holding bitcoin, a USD stablecoin, and a tokenised deposit, to gain hands-on experience with operations, security, and AML, with no plan for active investing.

Romania completed its first EU Digital Identity Wallet (EUDIW) real-money pilot, with Banca Transilvania and BPC enabling a cardholder to authenticate a purchase via the wallet instead of SMS OTP or card readers.

The European Commission has opened a DMA probe into whether Google Search unfairly demotes news publishers via its ‘site reputation abuse’ policy, which can lower rankings for outlets hosting partner content.

In terms of digital strategies, the European Commission’s 2030 Consumer Agenda outlines a plan to enhance protection, trust, and competitiveness while simplifying regulations for businesses.

Turkmenistan passed its first comprehensive virtual assets law, effective 1 Jan 2026, legalising crypto mining and permitting exchanges under strict state registration.

HUMAN RIGHTS

The Council of the EU has adopted new measures to accelerate the handling of cross-border data protection complaints, with harmonised admissibility criteria and strengthened procedural rights for both citizens and companies. A simplified cooperation process for straightforward cases will also reduce administrative burdens and enable faster resolutions.

India has begun enforcing its Digital Personal Data Protection Act 2023 through newly approved rules that set up initial governance structures, including a Data Protection Board, while granting organisations extra time to meet full compliance obligations.

LEGAL

OpenAI is resisting a narrowed legal demand from The New York Times for 20 million ChatGPT conversations—part of the Times’ lawsuit over alleged misuse of its content, warning that sharing the data could expose sensitive information and set far-reaching precedents for how AI platforms handle user privacy, data retention, and legal accountability.

A US judge let the Authors Guild’s lawsuit against OpenAI proceed, rejecting dismissal and allowing claims that ChatGPT’s summaries unlawfully replicate authors’ tone, plot, and characters.

Ireland’s media regulator has opened its first DSA investigation into X, probing whether users have accessible appeals and clear outcomes when content-removal requests are refused.

In a setback for the FTC, a US judge ruled that Meta does not currently wield monopoly power in social networking, scuttling a bid that could have forced the divestiture of Instagram and WhatsApp.

SOCIOCULTURAL

The European Commission launched the Culture Compass for Europe, a framework to put culture at the core of EU policy, foster identity and diversity, and bolster creative sectors.

China’s cyberspace regulators launched a crackdown on AI deepfakes impersonating public figures in livestream shopping, ordering platform cleanups and marketer accountability.

DEVELOPMENT

West and Central African ministers adopted the Cotonou Declaration to accelerate digital transformation by 2030, targeting a Single African Digital Market, widespread broadband, interoperable digital infrastructure, and harmonised rules for cybersecurity, data governance, and AI. The initiative emphasises human capital and innovation, aiming to equip 20 million people with digital skills, create two million digital jobs, and boost African-led AI and regional digital infrastructure.

ITU’s Measuring digital development: Facts and Figures 2025 report finds that while global connectivity continues to expand—with nearly 6 billion people online in 2025—2.2 billion still remain offline, predominantly in low- and middle-income countries. Major gaps persist in connection quality, data usage, affordability, and digital skills, leaving many unable to fully benefit from the digital world.

Switzerland has formally associated to Horizon Europe, Digital Europe, and Euratom R&T, giving Swiss researchers EU-equivalent status to lead projects and win funding across all pillars from 1 January 2025.

Uzbekistan now grants full legal validity to personal data on the my.gov.uz public services portal, equating it with paper documents (effective 1 November). Citizens can access, share, and manage records entirely online.

This month in AI and data governance

Australia. Australia has unveiled a new National AI Plan designed to harness AI for economic growth, social inclusion and public-sector efficiency — while emphasising safety, trust and fairness in adoption. The plan mobilises substantial investment: hundreds of millions of AUD are channelled into research, infrastructure, skills development and programmes to help small and medium enterprises adopt AI; the government also plans to expand nationwide access to the technology.

Practical steps include establishing a national AI centre, supporting AI adoption among businesses and nonprofits, enhancing digital literacy through schools and community training, and integrating AI into public service delivery.

To ensure responsible use, the government will establish the AI Safety Institute (AISI), a national centre tasked with consolidating AI safety research, coordinating standards development, and advising both government and industry on best practices. The Institute will assess the safety of advanced AI models, promote resilience against misuse or accidents, and serve as a hub for international cooperation on AI governance and research.

The report highlights Bangladesh’s relative strengths: a growing e-government infrastructure and generally high public trust in digital services. However, it also candidly maps structural challenges: uneven connectivity and unreliable power supply beyond major urban areas, a persistent digital divide (especially gender and urban–rural), limited high-end computing capacity, and insufficient data protection, cybersecurity and AI-related skills in many parts of society.

As part of its roadmap, the country plans to prioritise governance frameworks, capacity building, and inclusive deployment — especially ensuring that AI supports public-sector services in health, education, justice and social protection.

Belgium. Belgium joins a growing number of countries and public-sector organisations that have restricted or blocked China’s DeepSeek over security concerns. All Belgian federal government officials must cease using DeepSeek, effective 1 December, and all instances of DeepSeek must be removed from official devices.

Russia. At Russia’s premier AI conference (AI Journey), President Vladimir Putin announced the formation of a national AI task force, framing it as essential for minimising dependence on foreign AI. The plan includes building data centres (even powered by small-scale nuclear power) and using these to host generative AI models that protect national interests. Putin also argued that only domestically developed models should be used in sensitive sectors — like national security — to prevent data leakage.

Singapore. Singapore has launched a Global AI Assurance Sandbox, now open to companies worldwide that want to run real-world pilot tests for AI systems.

This sandbox is guided by 11 governance principles aligned with international standards — including NIST’s AI Risk Management Framework and ISO/IEC 42001. By doing this, Singapore hopes to bridge the gap between fragmented national AI regulations and build shared benchmarks for safety and trust.

The EU. A big political storm is brewing in the EU. The European Commission has rolled out what it calls the Digital Omnibus, a package of proposals aimed at simplifying its digital lawbook — a move welcomed by some as needed to improve the competitiveness of the EU’s digital actors, and criticised by others over potentially negative implications in areas such as digital rights. The package consists of the Digital Omnibus Regulation Proposal and the Digital Omnibus on AI Regulation Proposal.

On a separate, but related note, the European Commission has launched an AI whistle-blower tool, providing a secure and confidential channel for individuals across the EU to report suspected breaches of the AI Act, including unsafe or high‑risk AI deployments. With the launch of the tool, the EU aims to close gaps in the enforcement of the EU AI Act, increase the accountability of developers and deployers, and foster a culture of responsible AI usage across member states. The tool is also intended to foster transparency, allowing regulators to react faster to potential violations without relying just on audits or inspections.

What’s making waves in the EU? The Digital Omnibus on AI Regulation Proposal delays the implementation of ‘high-risk’ rules under the EU’s AI Act until 2027, giving Big Tech more time before stricter oversight takes effect. The entry into force of high-risk AI rules will now align with the availability of support tools, giving companies up to 16 months to comply. SMEs and small mid-cap companies will benefit from simplified documentation, broader access to regulatory sandboxes, and centralised oversight of general-purpose AI systems through the AI Office.

Cybersecurity reporting is also being simplified with a single-entry interface for incidents under multiple laws, while privacy rules are being clarified to support innovation without weakening protections under the GDPR. Cookie rules will be modernised to reduce repetitive consent requests and allow users to manage preferences more efficiently.

Data access will be enhanced through the consolidation of EU data legislation via the Data Union Strategy, targeted exemptions for smaller companies, and new guidance on contractual compliance. The measures aim to unlock high-quality datasets for AI and strengthen Europe’s innovation potential, while saving businesses billions and improving regulatory clarity.

The Digital Omnibus Regulation Proposal has implications for data protection in the EU. Proposed changes to the General Data Protection Regulation (GDPR) would redefine the definition of personal data, weakening the safeguards on when companies can use it — especially for AI training. Meanwhile, cookie-consent is being simplified into a ‘one click’ model that lasts up to six months.

Privacy and civil rights groups expressed concern that the proposed GDPR changes disproportionately benefit large technology firms. A coalition of 127 organisations has issued a public warning that this could become ‘the biggest rollback of digital fundamental rights in EU history.’

These proposals must go through the EU’s co-legislative process — Parliament and Council will debate, amend, and negotiate them. Given the controversy (support from industry, pushback from civil society), the final outcome could look very different from the Commission’s initial proposal.

The UK. The UK government has launched a major AI initiative to drive national AI growth, combining infrastructure investment, business support, and research funding. An immediate £150 million GPU deployment in Northamptonshire kicks off a £18 billion programme over five years to build sovereign AI capacity. Through an advanced-market commitment of £100 million, the state will act as a first customer for domestic AI hardware startups, helping de-risk innovation and boost competitiveness.

The plan includes AI Growth Zones, with a flagship site in South Wales expected to create over 5,000 jobs, and expanded access to high-performance computing for universities, startups, and research organisations. A dedicated £137 million “AI for Science” strand will accelerate breakthroughs in drug discovery, clean energy, and advanced materials, ensuring AI drives both economic growth and public value outcomes.

The USA. The shadow of regulation-limiting politics looms large in the USA. Trump-aligned Republicans have again pushed for a moratorium on state-level AI regulation. The idea is to block states from passing their own AI laws, arguing that a fragmented regulatory landscape would hinder innovation. One version of the proposal would tie federal broadband funding to states’ willingness to forego AI rules — effectively punishing any state that tries to regulate. Yet this pushback isn’t unopposed: more than 260 state lawmakers from across the US, Republican and Democrat alike, have decried the moratorium.

The President has formally established the Genesis Mission by Executive Order on 24 November 2025, tasking the US Department of Energy (DOE) with leading a nationwide AI-driven scientific research effort. The Mission will build a unified ‘American Science and Security Platform,’ combining the DOE’s 17 national laboratories’ supercomputers, federal scientific datasets that have accumulated over decades, and secure high-performance computing capacity — creating what the administration describes as ‘the world’s most complex and powerful scientific instrument ever built.’

Under the plan, AI will generate ‘scientific foundation models’ and AI agents capable of automating experiment design, running simulations, testing hypotheses and accelerating discoveries in key strategic fields: biotechnology, advanced materials, critical minerals, quantum information science, nuclear fission and fusion, space exploration, semiconductors and microelectronics.

The initiative is framed as central to energy security, technological leadership and national competitiveness — the administration argues that despite decades of rising research funding, scientific output per dollar has stagnated, and AI can radically boost research productivity within a decade.

To deliver on these ambitions, the Executive Order sets a governance structure: the DOE Secretary oversees implementation; the Assistant to the President for Science and Technology will coordinate across agencies; and DOE may partner with private sector firms, academia and other stakeholders to integrate data, compute, and infrastructure.

UAE and Africa. The AI for Development Initiative has been announced to advance digital infrastructure across Africa, backed by a US$1 billion commitment from the UAE. According to official statements, the initiative plans to channel resources to sectors such as education, agriculture, climate adaptation, infrastructure and governance, helping African governments to adopt AI-driven solutions even where domestic AI capacity remains limited.

Though full details remain to be seen (e.g. selection of partner countries, governance and oversight mechanisms), the scale and ambition of the initiative signal the UAE’s aspiration to act not just as an AI adoption hub, but as a regional and global enabler of AI-enabled development.

Uzbekistan. Uzbekistan has announced the launch of the ‘5 million AI leaders’ project to develop its domestic AI capabilities. As part of this plan, the government will integrate AI-focused curricula into schools, vocational training and universities; train 4.75 million students, 150,000 teachers and 100,000 public servants; and launch large-scale competitions for AI startups and talent.

The programme also includes building high-performance computing infrastructure (in partnership with a major tech company), establishing a national AI transfer office abroad, and creating state-of-the-art laboratories in educational institutions — all intended to accelerate adoption of AI across sectors.

The government frames this as central to modernising public administration and positioning Uzbekistan among the world’s top 50 AI-ready countries.

Is the AI bubble about to burst?

The AI bubble is inflating to the point of bursting. There are five causes of the current situation and five future scenarios that inform how to prevent or deal with a potential burst.

The frenzy of AI investment did not happen in a vacuum. Several forces have contributed to our tendency toward overvaluation and unrealistic expectations.

1st cause: The media hype machine. AI has been framed as the inevitable future of humanity. This narrative has created a powerful Fear of Missing Out (FOMO), prompting companies and governments to invest heavily in AI, often without a sober reality check.

2nd cause: Diminishing returns on computing power and data. The dominant, simple formula of the past few years has been: More compute (read: more Nvidia GPUs) + more data = better AI. This belief has led to massive AI factories: hyper-scale data centres and an alarming electricity and water footprint. Simply stacking more GPUs now yields incremental improvements.

3rd cause: Large language models’ (LLMs’) logical and conceptual limits. LLMs are encountering structural limitations that cannot be resolved simply by scaling data and compute. Despite the dominant narrative of imminent superintelligence, many leading researchers are sceptical that today’s LLMs can simply be ‘grown’ into human-level Artificial General Intelligence (AGI).

4th cause: Slow AI transformation. Most AI investments are still based on potential, not on realised, measurable value. The technology is advancing faster than society’s ability to absorb it. Previous AI winters in the 1970s and late 1980s followed periods of over-promising and under-delivering, leading to sharp cuts in funding and industrial collapse.

5th cause: Massive cost discrepancies. The latest wave of open-source models has shown that open-source models, at a few million dollars, can match or beat models costing hundreds of millions. This raises questions about the efficiency and necessity of current proprietary AI spending.

Five scenarios outline how the current hype may resolve.

1st Scenario: The rational pivot (the textbook solution). A market correction could push AI development away from the assumption that more compute automatically yields better models. Instead, the field would shift toward smarter architectures, deeper integration with human knowledge and institutions, and smaller, specialised, often open-source systems. Policy is already moving this way: the US AI Action Plan frames open-weight models as strategic assets. Yet this pivot faces resistance from entrenched proprietary models, dependence on closed data, and unresolved debates about how creators of human knowledge should be compensated.

2nd Scenario: ‘Too big to fail’ (the 2008 bailout playbook). Another outcome treats major AI companies as essential economic infrastructure. Industry leaders already warn of “irrationality” in current investment levels, suggesting that a weak quarter from a key firm could rattle global markets. In this scenario, governments provide implicit or explicit backstops—cheap credit, favourable regulation, or public–private infrastructure deals—on the logic that AI giants are systemically important.

3rd Scenario: Geopolitical justification (China ante portas). Competition with China could become the dominant rationale for sustained public investment. China’s rapid progress, including low-cost open models like DeepSeek R1, is already prompting “Sputnik moment” comparisons. Support for national champions is then framed as a matter of technological sovereignty, shifting risk from investors to taxpayers.

4th Scenario: AI monopolisation (the Wall Street gambit). If smaller firms fail to monetise, AI capabilities could consolidate into a handful of tech giants, mirroring past monopolisation in search, social media, and cloud. Nvidia’s dominance in AI hardware reinforces this dynamic. Open-source models slow but do not prevent consolidation.

5th Scenario: AI winter and new digital toys. Finally, a mild AI winter could emerge as investment cools and attention turns to new frontiers—quantum computing, digital twins, immersive reality. AI would remain a vital infrastructure but no longer the centre of speculative hype.

The next few years will show whether AI becomes another over-priced digital toy – or a more measured, open, and sustainable part of our economic and political infrastructure.

This text was adapted from Dr Jovan Kurbalija’s article Is the AI bubble about to burst? Five causes and five scenarios. Read the original article below.

This text outlines five causes and five scenarios around the AI bubble and potential burst.

Recalibrating the digital agenda: Highlights from the WSIS+20 Rev 1 document

A revised version of the WSIS+20 outcome document – Revision 1 – was published on 7 November by the co-facilitators of the intergovernmental process. The document will serve as the basis for continued negotiations among UN member states ahead of the high-level meeting of the General Assembly on 16–17 December 2025.

While maintaining the overall structure of the Zero Draft released in August, Revision 1 introduces several changes and new elements.

The new text includes revised – and in several places stronger – language emphasising the need to close rather than bridge digital divides, presented as multidimensional challenges that must be addressed to achieve the WSIS vision.

At the same time, some issues were deprioritised: for instance, references to e-waste and the call for global reporting standards on environmental impacts were removed from the environment section.

Several new elements also appear. In the enabling environments section, states are urged to take steps towards avoiding or refraining from unilateral measures inconsistent with international law.

There is also a new recognition of the importance of inclusive participation in standard-setting.

The financial mechanisms section invites the Secretary-General to consider establishing a task force on future financial mechanisms for digital development, with outcomes to be reported to the UNGA at its 81st session.

The internet governance section now includes a reference to the NetMundial+10 Guidelines.

Language on the Internet Governance Forum (IGF) remains largely consistent with the Zero Draft, including with regard to making the forum a permanent one and requesting the Secretary-General to make proposals concerning the IGF’s future funding. New text invites the IGF to further strengthen the engagement of governments and other stakeholders from developing countries in discussions on internet governance and emerging technologies.

Several areas saw shifts in tone. Language in the human rights section has been softened in parts (e.g. references to surveillance safeguards and threats to journalists now being removed).

And there is a change in how the interplay between WSIS and the GDC is framed – the emphasis is now on alignment between WSIS and GDC processes rather than integration. For instance, if the GDC-WSIS joint implementation roadmap was initially requested to ‘integrate GDC commitments into the WSIS architecture’, it should now ‘aim to strengthen coherence between WSIS and GDC processes’. Corresponding adjustments are also reflected in the roles of the Economic and Social Council and the Commission on Science and Technology for Development.

What’s next? Registration is now open for the next WSIS+20 virtual stakeholder consultation, scheduled for Monday, 8 December 2025, to gather feedback on the revised draft outcome document (Rev2). Participants must register by Sunday, 7 December at 11:59 p.m. EST.

An initial Rev2 response and framework document will guide the session and will be posted as soon as it is available.

This consultation forms part of the preparations for the High-level Meeting of the General Assembly on the overall review of the implementation of the outcomes of the World Summit on the Information Society (WSIS+20), which will take place on 16 and 17 December 2025.

Code meets climate: AI and digital at COP30

COP30, the 30th annual UN climate meeting, officially wrapped up last Friday, 21 November. As the dust settles in Belém, we take a closer look at the outcomes with implications for digital technologies and AI.

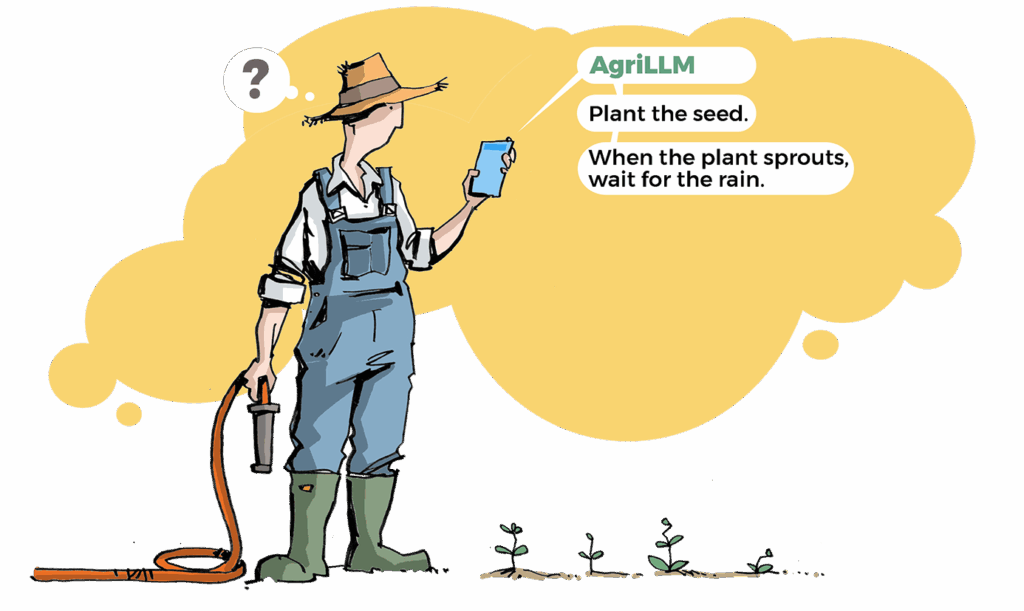

In agriculture, momentum is clearly building. Brazil and the UAE unveiled AgriLLM, the first open-source large language model designed specifically for agriculture, developed with support from international research and innovation partners. The goal is to give governments and local organisations a shared digital foundation to build tools that deliver timely, locally relevant advice to farmers. Alongside this, the AIM for Scale initiative aims to provide digital advisory services, including climate forecasts and crop insights, to 100 million farmers.

Cities and infrastructure are also stepping deeper into digital transformation. Through the Infrastructure Resilience Development Fund, insurers, development banks, and private investors are pooling capital to finance climate-resilient infrastructure in emerging economies — from clean energy and water systems to the digital networks needed to keep communities connected and protected during climate shocks.

The most explicit digital agenda surfaced under the axis of enablers and accelerators. Brazil and its partners launched the world’s first Digital Infrastructure for Climate Action, a global initiative to help countries adopt open digital public goods in areas such as disaster response, water management, and climate-resilient agriculture. The accompanying innovation challenge is already backing new solutions designed to scale.

The Green Digital Action Hub was also launched and will help countries measure and reduce the environmental footprint of technology, while expanding access to tools that use technology for sustainability.

Training and capacity building received attention through the new AI Climate Institute, which will help the Global South develop and deploy AI applications suited to local needs — particularly lightweight, energy-efficient models.

The Nature’s Intelligence Studio, grounded in the Amazon, will support nature-inspired innovation and introduce open AI tools that help match real-world sustainability challenges with bio-based solutions.

Finally, COP30 marked a first by placing information integrity firmly on the climate action agenda. With mis- and disinformation recognised as a top global risk, governments and partners launched a declaration and new multistakeholder process aimed at strengthening transparency, shared accountability, and public trust in climate information — including the digital platforms that shape it.

The big picture. Across all strands, COP30 sent a clear message: the digital layer of climate action is not optional — it is embedded in the core delivery of climate action.

From Australia to the EU: New measures shield children from online harms

The bans on under‑16s are advancing globally, and Australia has gone the farthest in this effort. Regulators there have now widened the scope of the ban to include platforms like Twitch, which is classified as age-restricted due to its social interaction features. Meta has begun notifying Australian users believed to be under 16 that their Facebook and Instagram accounts will be deactivated starting 4 December, a week before the law officially takes effect on 10 December.

To support families through the transition, the government has established a Parent Advisory Group, bringing together organisations representing diverse households to help carers guide children on online safety, communication, and safe ways to connect digitally.

The ban has already provoked opposition. Major social media platforms have criticised the ban, but have signalled they will comply, with YouTube being the latest to fall in line. However, the ban is now constitutionally challenged in the High Court by two 15‑year-olds, backed by the advocacy group Digital Freedom Project. They argue the law unfairly limits under‑16s’ ability to participate in public debate and political expression, effectively silencing young voices on issues that affect them directly.

Malaysia also plans to ban social media accounts for people under 16 starting in 2026. The Cabinet approved the measure to protect children from online harms such as cyberbullying, scams, and sexual exploitation. Authorities are considering approaches such as electronic age verification using ID cards or passports, although the exact enforcement date has not been set.

EU lawmakers have proposed similar protections. The European Parliament adopted a non-legislative report calling for a harmonised EU minimum age of 16 for social media, video-sharing platforms, and AI companions, with access for 13–16-year-olds allowed only with parental consent. Lawmakers back the Commission’s work on an EU age-verification app and the European digital identity wallet (eID), but stress that age-assurance must be accurate and privacy-preserving and does not absolve platforms from designing services that are safe by default.

Beyond age restrictions, the EU is strengthening broader safeguards. Member states have agreed on a Council position for a regulation to prevent and combat child sexual abuse online, setting out concrete, enforceable obligations for online providers. Platforms will have to carry out formal risk assessments to identify how their services could be used to disseminate child sexual abuse material (CSAM) or to solicit children, and then put in place mitigation measures — from safer default privacy settings for children and user reporting tools, to technical safeguards. Member states will designate coordinating and competent national authorities that can review those risk assessments, force providers to implement mitigations and, where necessary, impose penalty payments for non-compliance.

Importantly, the Council introduces a three-tier risk classification for online services (high, medium, low). Services judged high-risk — determined against objective criteria such as service type — can be required not only to apply stricter mitigations but also to contribute to the development of technologies to reduce those risks. Search engines can be ordered to delist results; competent authorities may require the removal or blocking of access to CSAM. The position keeps and aims to make permanent an existing temporary exemption that allows providers (for example, messaging services) to voluntarily scan content for CSAM — an exemption currently due to expire on 3 April 2026.

To operationalise and coordinate enforcement, the regulation foresees the creation of a new EU agency — the EU Centre on Child Sexual Abuse. The Centre will process and assess information reported by providers, operate a database of provider reports and a database of child-sexual-abuse indicators that companies can use for voluntary detection activities, help victims request removal or disabling of access to material depicting them, and share relevant information with Europol and national law enforcement. The Centre’s seat is not yet decided; that will be negotiated with the European Parliament. With the Council’s position agreed, formal trilogue negotiations with Parliament can begin (the Parliament adopted its position in November 2023).

The European Parliament’s report also addresses everyday online risks, calling for bans on the most harmful addictive practices (infinite scroll, autoplay, reward loops, pull-to-refresh) and for default disabling of other addictive features for minors; they urge outlawing engagement-based recommender algorithms for minors and extending clear DSA rules to online video platforms. Gaming features that mimic gambling — loot boxes, in-app randomised rewards, pay-to-progress mechanics — should be banned. The report also tackles commercial exploitation, urging prohibitions on platforms offering financial incentives for kidfluencing (children acting as influencers).

MEPs singled out generative AI risks — deepfakes, companionship chatbots, AI agents and AI-powered nudity apps that create non-consensual manipulated imagery — calling for urgent legal and ethical action. Rapporteur Christel Schaldemose framed the measures as drawing a clear line: platforms are ‘not designed for children’ and the experiment of letting addictive, manipulative design target minors must end.

A new multilateral initiative is also underway: the eSafety Commissioner (Australia), Ofcom (UK) and the European Commission’s DG CNECT will cooperate to protect children’s rights, safety and privacy online.

The regulators will enforce online safety laws, require platforms to assess and mitigate risks to children, promote privacy-preserving technologies such as age verification, and partner with civil society and academia to keep regulatory approaches grounded in real-world dynamics.

A new trilateral technical group will be established to explore how age-assurance systems can work reliably and interoperably, strengthening evidence for future regulatory action.

The overall goal is to support children and families in using the internet more safely and confidently — by fostering digital literacy, critical thinking and by making online platforms more accountable.

Digital draught: When the cloud goes offline

The culprit was an internal misconfiguration. A routine permissions change in a ClickHouse database led to a malformed ‘feature file’ used by Cloudflare’s Bot Management tool. That file unexpectedly doubled in size and, when pushed across Cloudflare’s global network, exceeded built‑in limits — triggering cascading failures.

As engineers rushed to isolate the bad file, traffic slowly returned. By mid‑afternoon, Cloudflare halted propagation, replaced the corrupted file, and rebooted key systems; full network recovery followed hours later.

The bigger picture. The incident is not isolated. Only last month, Microsoft Azure suffered a multi-hour outage that disrupted enterprise clients across Europe and the US, while Amazon Web Services (AWS) experienced intermittent downtime affecting streaming platforms and e-commerce sites. These events, combined with the Cloudflare blackout, underscore the fragility of global cloud infrastructure.

The outage comes at a politically sensitive moment in Europe’s cloud policy debate. Regulators in Brussels are already probing AWS and Microsoft Azure to determine whether they should be designated as ‘gatekeepers’ under the EU’s Digital Markets Act (DMA). These investigations aim to assess whether their dominance in cloud infrastructure gives them outsized control — even though, technically, they don’t meet the Act’s usual size thresholds.

This recurring pattern highlights a major vulnerability in the modern internet, one born from an overreliance on a handful of critical providers. When one of these central pillars stumbles, whether from a misconfiguration, software bug, or regional issue, the effects ripple outward. The very concentration of services that enables efficiency and scale also creates single points of failure with cascading consequences.

Last month in Geneva: Developments, events and takeaways

The digital governance scene has been busy in Geneva in November. Here’s what we have tried to follow.

CERN unveils AI strategy to advance research and operations

CERN has approved a comprehensive AI strategy to guide its use across research, operations, and administration. The strategy unites initiatives under a coherent framework to promote responsible and impactful AI for science and operational excellence.

It focuses on four main goals: accelerating scientific discovery, improving productivity and reliability, attracting and developing talent, and enabling AI at scale through strategic partnerships with industry and member states.

Common tools and shared experiences across sectors will strengthen CERN’s community and ensure effective deployment.

Implementation will involve prioritised plans and collaboration with EU programmes, industry, and member states to build capacity, secure funding, and expand infrastructure. Applications of AI will support high-energy physics experiments, future accelerators, detectors, and data-driven decision-making.

The UN Commission on Science and Technology for Development (CSTD) 2025–2026 inter-sessional panel

The UN Commission on Science and Technology for Development (CSTD) held its 2025–2026 inter-sessional panel on 17 November at the Palais des Nations in Geneva. The agenda focused on science, technology and innovation in the age of AI, with expert contributions from academia, international organisations, and the private sector. Delegations also reviewed progress on WSIS implementation ahead of the WSIS+20 process, and received updates on the implementation of the Global Digital Compact (GDC) and ongoing data governance work within the dedicated CSTD working group. The findings and recommendations of the panel will be considered at the twenty-ninth session of the Commission in 2026.

Fourth meeting of the UN CSTD multi-stakeholder working group on data governance at all levels

The CSTD’s multi-stakeholder working group on data governance at all levels met for the fourth time from 18 to 19 November. The programme began with opening remarks and the formal adoption of the agenda. The UNCTAD secretariat then provided an overview of inputs submitted since the last session, highlighting emerging areas of alignment and divergence among stakeholders.

The meeting moved into substantive deliberations organised around four tracks covering key dimensions of data governance: principles applicable at all levels; interoperability between systems; sharing the benefits of data; and enabling safe, secure and trusted data flows, including across borders. These discussions are designed to explore practical approaches, existing challenges, and potential pathways toward consensus.

Following a lunch break, delegates reconvened for a full afternoon plenary to continue track-based exchanges, with opportunities for interaction among member states, the private sector, civil society, academia, the technical community, and international organisations.

The second day focused on the Working Group’s upcoming milestones. Delegations considered the outline, timeline, and expectations for the progress report to the General Assembly, as well as the process for selecting the next Chair of the Working Group. The session concluded with agreement on the scheduling of future meetings and any additional business raised by participants.

Innovations Dialogue 2025: Neurotechnologies and their implications for international peace and security

On 24 November, UNIDIR hosted its Innovations Dialogue on neurotechnologies and their implications for international peace and security in Geneva and online. Experts from neuroscience, law, ethics, and security policy discussed developments such as brain-computer interfaces and cognitive enhancement tools, exploring both their potential applications and the challenges they present, including ethical and security considerations. The event included a poster exhibition on responsible use and governance approaches.

14th UN Forum on Business and Human Rights

The 14th UN Forum on Business and Human Rights was held from 24 to 26 November in Geneva and online, under the theme ‘Accelerating action on business and human rights amidst crises and transformations.’ The forum addressed key issues, including safeguarding human rights in the age of AI and exploring human rights and platform work in the Asia-Pacific region amid the ongoing digital transformation. Additionally, a side event took a closer look at the labour behind AI.