October 2025 in retrospect

This month’s stories trace the shifting rules of the digital world — from child protection online to the global tug-of-war over rare earths, AI and cybercrime.

Here’s what we unpacked in this edition.

New rules for the digital playground — Countries are tightening online safety measures and redefining digital boundaries for young users.

The global struggle to steer AI — We examined how states and tech giants are competing to shape AI’s future.

Rare-earth respite — From trade corridors to export bans, nations are recalibrating their approach to securing the minerals that underpin the digital economy.

When the cloud falters — What the AWS and Microsoft outages reveal about resilience, dependence, and the risks of an increasingly centralised internet.

Promise and peril: The world signs on to the UN’s new cybercrime treaty in Hanoi — A milestone moment for global digital policy, but one raising deep questions about rights, jurisdiction, and enforcement.

Last month in Geneva — Catch up on the discussions, events, and takeaways shaping international digital governance.

Snapshot: The developments that made waves in October

GLOBAL GOVERNANCE

The Co-Facilitators of the WSIS+20 process released Revision 1 of the outcome document for the twenty-year review of the implementation of the World Summit on the Information Society, reflecting the input and proposals gathered from stakeholders and member states throughout the consultative process to date.

ARTIFICIAL INTELLIGENCE

Chinese President Xi Jinping reiterated China’s proposal to establish a global AI body, titled World Artificial Intelligence Cooperation Organisation (WAICO), to cooperate on development strategies, governance rules and technological standards.

The European Commission is considering pausing or easing certain elements of the EU AI Act, the bloc’s flagship legislation designed to regulate high-risk AI systems. The potential adjustments could include delayed compliance deadlines, softer obligations on high-risk applications, and a phased approach to enforcement.

The European Commission is evaluating whether ChatGPT should be classified as Very Large Online Search Engines (VLOSE) under the Digital Services Act (DSA), a move that would trigger extensive transparency, audit, and risk-assessment duties and potentially overlap with forthcoming AI Act requirements.

The Superintelligence Statement, endorsed by leading researchers and technologists, urges careful oversight and governance of advanced AI systems to prevent unintended harms—echoing the concerns of regulators and governments worldwide.

TECHNOLOGIES

The 2025 Nobel Prize in Physics was awarded to John Clarke, Michel H. Devoret, and John M. Martinis for proving that key quantum effects—tunnelling and energy quantisation—can occur in hand-sized superconducting circuits, advancing fundamental physics and laying foundations for modern quantum technologies.

UNESCO has approved the world’s first global framework on the ethics of neurotechnology, setting new standards to ensure that advances in brain science respect human rights and dignity.

The US–Korea Science and Technology Roadmap broadens cooperation on AI, quantum, 6G, research security, and civil space, aiming for pro-innovation policy alignment and “rusted exports.

US President Donald Trump and Chinese President Xi Jinping signed a deal that effectively rolls back Beijing’s recent export controls on rare earth elements and other critical minerals.

The US–Japan Framework for Securing the Supply of Critical Minerals and Rare Earths commits both nations to joint mining, processing, and stockpiling—an effort to reduce reliance on China and strengthen Indo-Pacific supply-chain resilience.

G7 energy ministers agreed to establish a critical minerals production alliance, backed by more than two dozen new investments and partnerships.

China’s commerce ministry signalled a softened position in the Nexperia case and moved to issue case-by-case exemptions to its export curbs on Nexperia products, while the Netherlands indicated it could suspend its emergency control measures if normal supplies resume.

The White House confirmed the most advanced AI chips remain off-limits to Chinese buyers. US officials also signalled they may block sales of scaled-down models, further tightening export controls. China is reportedly tightening customs checks on Nvidia’s China-market AI processors at major ports, underscoring how export controls and counter-measures are now a permanent feature of the supply chain.

INFRASTRUCTURE

The European Commission has approved the creation of the Digital Commons European Digital Infrastructure Consortium, bringing together France, Germany, the Netherlands, and Italy to develop open, interoperable digital systems that enhance Europe’s sovereignty and support governments and businesses.

CYBERSECURITY

The UN Convention against Cybercrime, the first global treaty to tackle cybercrime, was opened for signature in a high-level ceremony during which 72 countries signed.

Brazil is advancing its first national cybersecurity law, the Cybersecurity Legal Framework, to centralise oversight and bolster protections for citizens and businesses amid recent high-profile cyberattacks on hospitals and personal data.

Discord confirmed a cyberattack on a third-party age-verification provider that exposed official ID images and personal data of approximately 70,000 users, including partial credit card details and customer service chats, although no full card numbers, passwords, or broader activity were compromised; the platform itself remained secure.

Australia’s Critical Infrastructure Annual Risk Review, released by the Department of Home Affairs, warns of growing vulnerabilities in essential sectors like energy, healthcare, banking, aviation, and digital systems due to geopolitical tensions, supply chain disruptions, cyber threats, climate risks, physical sabotage, malicious insiders, and eroding public trust.

The International Committee of the Red Cross (ICRC) and the Geneva Academy have released a joint report analysing the application of international humanitarian law (IHL) to civilian involvement in cyber and digital activities during armed conflicts, drawing on global research and expert input.

Denmark, currently holding the EU Council presidency, has withdrawn its proposal to require scanning of private encrypted messages for child sexual abuse material (CSAM), abandoning the so-called ‘Chat Control’ proposal. Instead, Denmark will now support a voluntary regime for CSAM detection.

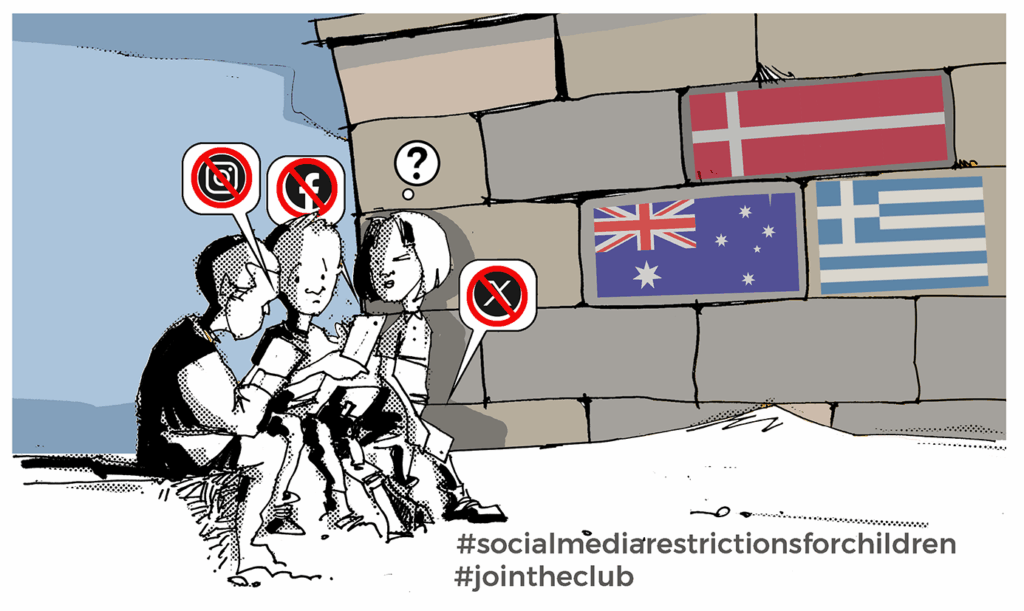

A growing number of countries are moving to ban minors from major platforms, with Denmark preparing to bar under-15s, and New Zealand and Australia under-16s.

The Danish EU Council presidency advanced the Jutland Declaration, making age verification central to online safety and backing an EU-wide system linked to the European Digital Identity Wallet, and EU ministers endorsed a common verification layer while allowing each country to set its own age limit.

ECONOMIC

A coalition of African, Caribbean and Pacific states led by Barbados has submitted a proposal to the World Trade Organization (WTO) seeking to extend the longstanding moratorium on customs duties applied to electronic transmissions.

France’s National Assembly has approved raising its digital services tax on major US tech giants like Google, Apple, Meta, and Amazon from 3% to 6%, despite Economy Minister Roland Lescure’s warnings of potential US trade retaliation and labelling the hike ‘disproportionate’.

French lawmakers narrowly approved centrist MP Jean-Paul Matteï’s amendment to expand the wealth tax to ‘unproductive assets’ like luxury goods, property, and digital currencies, passing 163-150 in the National Assembly with backing from socialists and far-right members.

Japan’s Financial Services Agency will support a pilot by MUFG, Sumitomo Mitsui, and Mizuho Bank to jointly issue yen-backed stablecoins for corporate payments.

HUMAN RIGHTS

UNESCO’s Office for the Caribbean has launched a regional survey on gender and AI to document online violence, privacy concerns, and algorithmic bias affecting women and girls, with results feeding into a regional policy brief.

A UN briefing warns of the profound human rights violations from a 48-hour nationwide telecommunications shutdown in Afghanistan, which severely disrupted access to healthcare, emergency services, banking, education, and daily communications, exacerbating the population’s existing vulnerabilities.

Tanzania imposed a nationwide internet shutdown during its tense general election, severely disrupting connectivity and blocking platforms like X, WhatsApp, and Instagram, as confirmed by NetBlocks, hampering journalists, election observers, and citizens from sharing updates amid reports of protests and unrest; the government deployed the army, raising alarms over information control.

France’s Défenseur des Droits ruled that Meta’s Facebook algorithm indirectly discriminates by gender in job advertising, violating anti-discrimination laws, following a complaint by Global Witness and women’s rights groups Fondation des Femmes and Femmes Ingénieures.

Privacy advocates have proposed a US Internet Bill of Rights to safeguard digital freedoms against expanding online censorship laws like the UK’s Online Safety Act, the EU’s Digital Services Act, and US bills such as KOSA and the STOP HATE Act, which they argue undermine civil liberties by enabling government and corporate speech control under safety pretexts.

LEGAL

A landmark US federal court ruling has permanently barred the Israeli spyware company NSO Group from targeting WhatsApp users, prohibiting NSO from accessing WhatsApp’s systems, effectively restricting the company’s operations on the platform.

An Advocate General of the CJEU has opined that national competition authorities can lawfully seize employee emails during investigations without prior judicial approval, provided a strict legal framework and effective safeguards are in place.

Australia’s Albanese Government has rejected introducing a Text and Data Mining Exception to its copyright laws, prioritising protections for local creators despite the tech sector’s demands for an AI exemption that would allow unlicensed use of copyrighted materials.

A UK court largely sided with Stability AI in its legal battle with Getty Images, ruling that the company’s use of Getty’s images to train its AI models did not violate copyright or trademark law.

SOCIOCULTURAL

Singapore’s Parliament passed the Online Safety (Relief and Accountability) Bill, establishing a new Online Safety Commission with powers to issue takedown and account‑restriction orders for harmful online content.

The European Commission has issued preliminary findings that Meta and TikTok breached transparency and researcher-access obligations under the DSA, specifically by making it difficult for users to flag illegal content and limiting independent scrutiny of platform data.

In the Netherlands, a court has granted Meta until 31 December 2025 to implement simpler opt-out options for algorithm-driven timelines on Facebook and Instagram, following an earlier ruling that Meta’s timeline design breached DSA obligations.

Australia will require streaming services with over 1 million local subscribers to invest at least 10% of their local spending in new Australian content, including drama, children’s shows, documentaries, arts, and educational programs.

DEVELOPMENT

The UAE’s Artificial Intelligence, Digital Economy, and Remote Work Applications Office, in partnership with Google, launched the ‘AI for All’ initiative on 29 October 2025, to provide comprehensive AI skills training across the country through 2026, targeting students, teachers, university learners, government employees, SMEs, creatives, and content creators.

KEPSA and Microsoft launched the Kenya AI Skilling Alliance (KAISA) in Nairobi, a national platform uniting the government, academia, private sector, and partners to foster inclusive and responsible AI adoption amid Kenya’s AI ecosystem fragmentation.

The UN Development Programme (UNDP) has expanded its Blockchain Academy, in partnership with the Algorand Foundation, to train 24,000 personnel worldwide from UNDP, UN Volunteers, and the UN Capital Development Fund (UNCDF), enhancing blockchain knowledge and application for sustainable development goals.

New rules for the digital playground

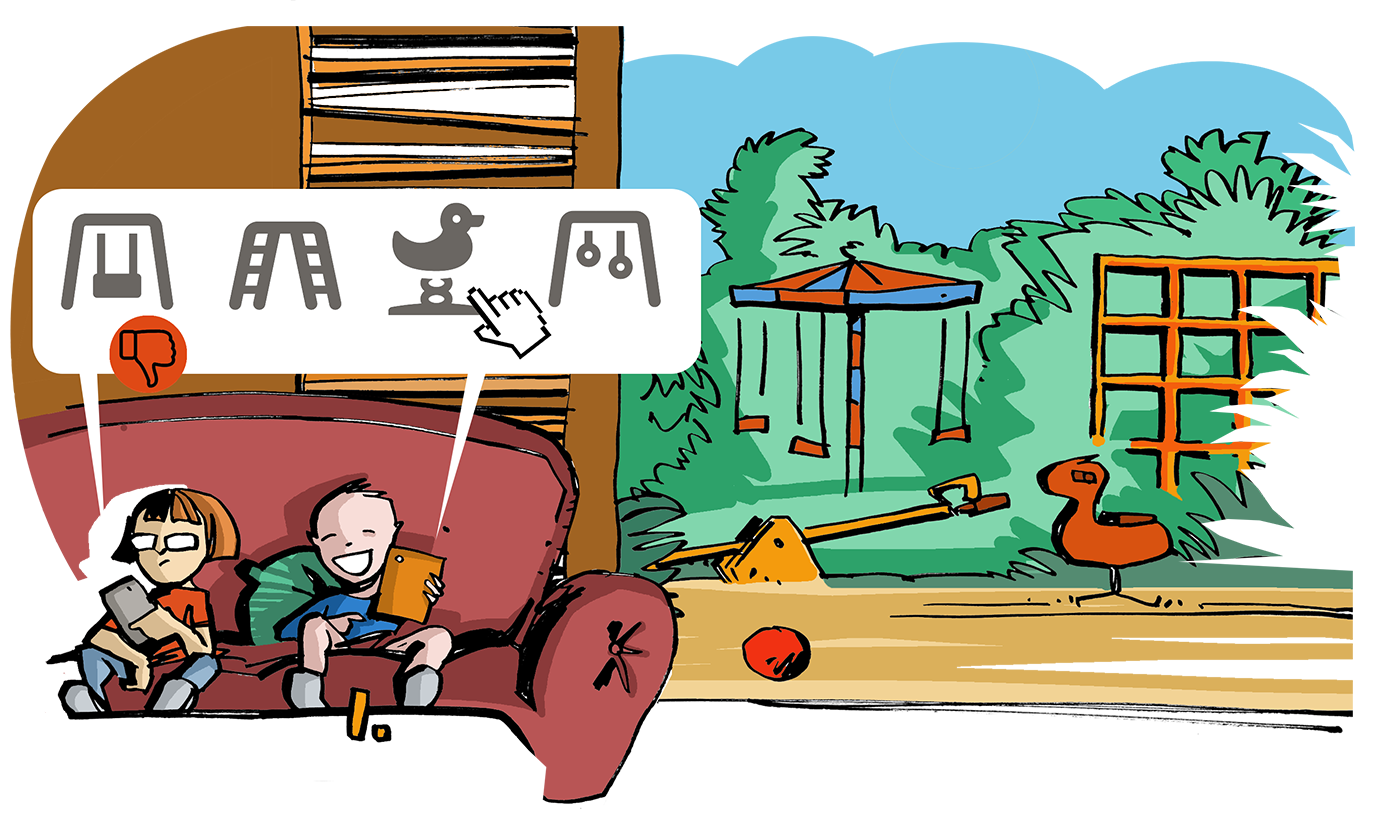

A new wave of digital protectionism is taking shape around the world — this time in the name of children’s safety.

A small but growing club of countries is seeking to ban minors from major platforms. Denmark is joining the trend by preparing to ban social media for users under 15. The government has yet to release full details, but the move reflects a growing recognition across many countries that the costs of children’s unrestricted access to social media — from mental health issues to exposure to harmful content — are no longer acceptable.

New Zealand will also introduce a bill in parliament to restrict social media access for children under 16, requiring platforms to conduct age verification before allowing use.

For inspiration, Copenhagen and Wellington do not have to look far. Australia has already outlined one of the most detailed blueprints for a nationwide ban on under-16s, set to take effect on 10 December 2025. The law requires platforms to verify users’ ages, remove underage accounts, and block re-registrations. Platforms will also need to communicate clearly with affected users, although questions remain, including whether deleted content will be restored when a user turns 16.

Despite having publicly articulated their opposition, Meta Platforms (owner of Facebook/Instagram), TikTok, and Snap Inc. announced that they will comply with the Australian law. The tech firms argue that the ban may inadvertently push youth toward less-regulated corners of the internet, and raise practical concerns over the enforcement of reliable age verification.

The Australian eSafety Commissioner has formally notified major social media platforms, including Facebook, Instagram, TikTok, Snapchat, and YouTube, that they must comply with new minimum age restrictions effective from 10 December.

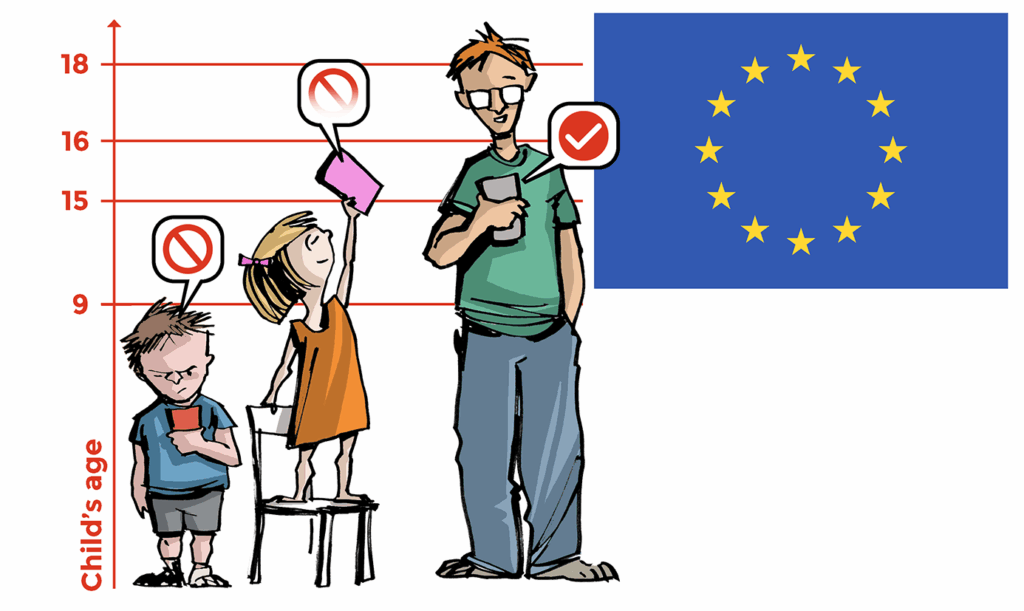

In the EU, the Danish presidency tabled the Jutland Declaration, a political push for stronger protections that puts age verification at the centre of online safety for minors. The declaration argues that platforms accessible to minors must ensure ‘a high level of privacy, safety, and security’ and that effective age verification is ‘one of the essential tools’ to curb exposure to illegal or harmful content and manipulative design. It explicitly backs EU-wide solutions tied to the European Digital Identity (EUDI) Wallet, positioned as a way to verify age with minimal data disclosure, and calls out addictive features (infinite scroll, autoplay, streaks) and risky monetisation (certain loot boxes, micro-transactions) for tighter controls. The text also stresses ‘safety-by-design’ defaults, support for parents and teachers, and meaningful participation of children in the policy process.

Two days later, ministers backed the Danish plan in part: countries rallied behind the common EU layer for age verification but resisted a single EU-wide age limit. The emerging compromise is to standardise ‘how to verify’ at the EU level while leaving ‘what age to set’ to national law, avoiding fragmentation of technical systems while respecting domestic choices. Pilots for the EU approach are expanding in several member states and align with the EUDI Wallet rollout due by the end of 2026.

However, Denmark has officially abandoned its push within the EU to mandate the scanning of private messages on encrypted platforms – a proposal often referred to as ‘Chat Control.’ Officials cited mounting political and public resistance, particularly concerns over privacy and surveillance, as the catalyst for reversal. The measure had aimed to require tech platforms to automatically detect child sexual abuse material (CSAM) in private, end-to-end encrypted communications. Instead, Denmark will now support a voluntary regime for CSAM detection, relinquishing the previous insistence on compulsory scanning.

Adding a global dimension, on 7 November, the European Commission, Australia’s eSafety Commissioner and the UK’s Ofcom committed to strengthening child online safety. The three regulators agreed specifically to establish a technical cooperation group on age-assurance solutions (methods to verify age safely), share evidence, conduct independent research, and hold platforms accountable for design and moderation decisions that affect children. While each region retains its own law-making path, the agreement signals a growing trans-national alignment around how children are protected in digital spaces.

Regulatory pressure is rising in parallel. The European Commission sent fresh DSA information requests to major platforms, including Snapchat, YouTube, Apple and Google, probing how they keep minors away from harmful content and how their age-assurance systems work. The probe follows the Commission’s final DSA guidelines on protecting minors issued in July, which spell out ‘appropriate and proportionate’ measures for services accessible to minors (Article 28(1) DSA), from default privacy safeguards to risk mitigation for recommender systems. Violations can lead to substantial fines.

Lawsuits are also picking up. In Italy, families have launched legal action against Facebook, Instagram, and TikTok, claiming that the platforms failed to protect minors from exploitative algorithms and inappropriate content.

Across the Atlantic, New York City has filed a sweeping lawsuit against major social media platforms, accusing them of deliberately designing features that addict children and harm their mental health.

A landmark trial on child safety online has been given a start date. In January 2026, the Los Angeles County Superior Court will hear a consolidated case bringing together hundreds of claims from parents and school districts against Meta, Snap, TikTok, and YouTube. Plaintiffs allege that these platforms are addictive and expose young people to mental health risks, while providing ineffective parental controls and weak safety features. Meta founder Mark Zuckerberg, Instagram head Adam Mosseri, and Snap CEO Evan Spiegel have been ordered to testify in person, despite the companies’ arguments that it would be burdensome. The judge emphasised that direct testimony from CEOs is crucial to assess whether the companies were negligent in failing to take steps to mitigate harm.

Online gaming platforms, especially those popular with children, are also being re-examined. The platform Roblox (which allows user-generated worlds and chats) has come under fire for failing to protect its youngest users. In the USA, for example, the Florida Attorney General has issued criminal subpoenas accusing Roblox of being ‘a breeding ground for predators’ for children. In the Netherlands, the platform is facing an investigation by child-welfare authorities into how it safeguards young users. In Iraq, the government has banned Roblox nationwide, citing “sexual content, blasphemy and risks of online blackmail against minors.”

Social media and gaming aren’t the only fronts. AI chatbots are now under scrutiny for how they interact with minors. In Australia, the eSafety Commissioner has sent notices to four AI chatbot companies asking them to detail their child-protection steps — specifically, how they guard against exposure to sexual or self-harm material.

Meanwhile, Meta has announced new parental-control measures: parents will be able to disable their teens’ private chats with AI characters, block specific bots, and view what topics their teens are discussing, though not full transcripts. These rules are expected to roll out early next year in the USA, UK, Canada and Australia. These changes follow criticism that Meta’s AI-character chatbots enabled flirtatious or age-inappropriate conversations with minors.

The big picture. For youth, the digital world is a primary space for social, emotional, and cognitive development, shaped by the content and interactions they find there. This new reality requires parents to look beyond simple screen time to understand the nature of their children’s online experiences. It also sends a clear message to tech companies about accountability and challenges regulators to create rules that are both enforceable and adaptable to rapid innovation.

Together, these developments suggest that the era of self-regulation for social media may be drawing to a close. The global debate is not about whether the digital playground needs guardians, but about the final design of its safety features. As governments weigh bans, lawsuits, and surveillance mandates, they struggle to balance two imperatives: protecting children from harm while safeguarding fundamental rights to privacy and free expression.

The global struggle to steer AI

The world is incessantly debating the future and governance of AI. Here are some of the latest moves in the space.

Regulatory moves

Italy has made history as the first member state in the EU to pass its own national AI law, going beyond the framework of the EU’s Artificial Intelligence Act. From 10 October, the law comes into effect, introducing sector-specific rules across health, justice, work, and public administration. Among its provisions: transparency obligations, criminal penalties for misuse of AI (such as harmful deepfakes), new oversight bodies, and protections for minors (e.g. parental consent if under 14).

However, reports indicate that the European Commission is considering pausing or easing certain elements of the EU AI Act, the bloc’s flagship legislation designed to regulate high-risk AI systems. The potential adjustments could include delayed compliance deadlines, softer obligations on high-risk applications, and a phased approach to enforcement – likely in response to lobbying from US authorities and tech companies.

Adding another layer of regulatory complexity, the European Commission is evaluating whether ChatGPT should be classified as Very Large Online Search Engines (VLOSE) under the Digital Services Act (DSA). This designation would bring obligations, including systemic-risk assessments, transparency reporting, independent audits, and researcher access to data, potentially creating overlapping regulatory requirements alongside the AI Act.

Meanwhile, across the Atlantic, California has signed into law a bold transparency and whistleblower regime aimed at frontier AI developers – those deploying large, compute-intensive models. Under SB 53 (the Transparency in Frontier Artificial Intelligence Act), companies must publish safety protocols, monitor risks, and disclose ‘critical safety incidents.’ Crucially, employees who believe there is a catastrophic risk (even without full proof) are shielded from retaliation. California also adopted the first dedicated ‘AI chatbot safety’ law, adding disclosure and safety expectations for conversational systems.

In Asia, at the Asia-Pacific Economic Cooperation (APEC) 2025 summit, Chinese President Xi Jinping reiterated his proposal to establish a global AI body to coordinate AI development and governance internationally. This body, tentatively titled World Artificial Intelligence Cooperation Organization, is not referenced in the APEC Artificial Intelligence (AI) Initiative (2026-2030).

A push for AI sovereignty

In Brussels, the European Commission is simultaneously strategising for digital sovereignty – trying to break the EU’s dependence on foreign AI infrastructure. Its new ‘Apply AI’ strategy aims to channel €1 billion into deploying European AI platforms, integrating them into public services (health, defence, industry), and supporting local tech innovation. The Commission also launched an ‘AI in Science’ initiative to solidify Europe’s position at the forefront of AI research, through a network called RAISE.

Japan will prioritise home-grown AI technology in its new national strategy, aiming to strengthen national security and reduce dependence on foreign systems. The government says developing domestic expertise is essential to prevent overreliance on US and Chinese AI models.

AI, content, and the future of search

As AI continues to reshape how people access and interact with information, governments and tech companies are grappling with the opportunities—and dangers—of AI-driven content and search tools.

In Europe, the Dutch electoral watchdog recently warned voters against relying on AI chatbots for election information, highlighting concerns that such systems can inadvertently mislead citizens with inaccurate or biased content.

Similarly, India proposed strict new IT rules requiring deepfakes and AI-generated content to be clearly labelled, aiming to curb misuse and increase transparency in the digital ecosystem.

At the same time, search engines—the traditional gateways to online knowledge—are experiencing a wave of innovation. OpenAI has unveiled its new browser, ChatGPT Atlas, which reimagines the way users interact with the web. Atlas introduces an ‘agent mode,’ a premium feature that can access a user’s laptop, click through websites, and explore the internet on their behalf, leveraging browsing history and user queries to provide guided, explainable results. Altman described it simply: ‘It’s using the internet for you.’ Two days later, Microsoft unveiled an equivalent offering—its Copilot Mode in Edge within the Microsoft Edge browser. The design, functions and timing were notably similar, underscoring how the major platforms are rapidly converging on this next-generation AI browser paradigm.

The bottom line. Ultimately, every new law and international proposal faces the same, almost paradoxical challenge: how to establish a rulebook for a technology that is continually rewriting its own capabilities by the month. The fundamental question is: Can any governance framework ever be agile enough?

Rare-earth respite: The battle for strategic resources marches on

Rare-earth respite: The battle for strategic resources marches on

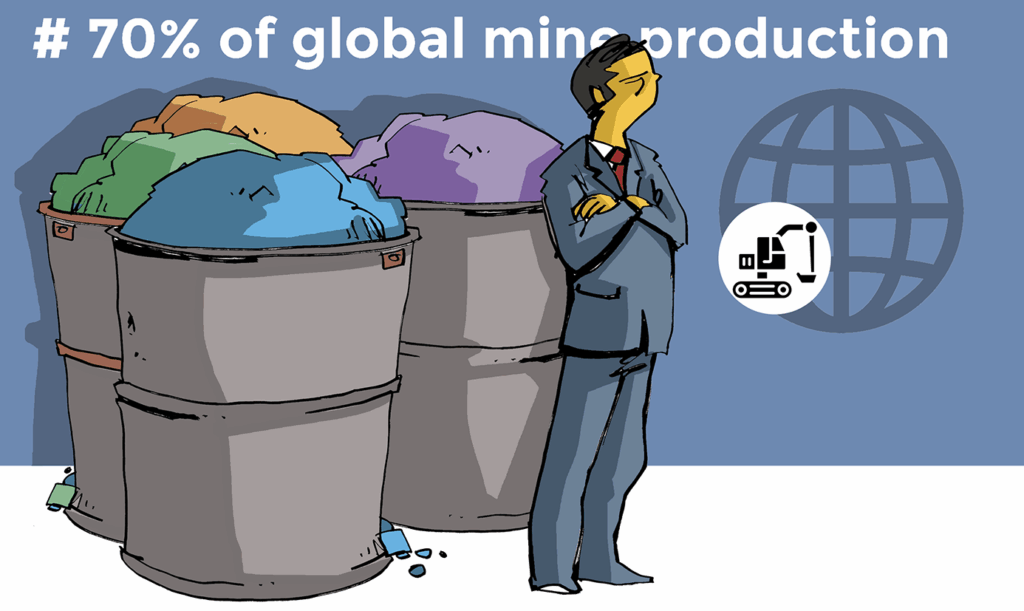

The beginning of October saw China tighten its grip on the global tech supply chain by significantly expanding its restrictions on its rare earth exports. The new rules no longer focus solely on raw minerals — they now encompass processed materials, manufacturing equipment, and even the expertise used to refine and recycle rare earths.

Exporters must seek government approval not only to ship these elements, but also for any product that contains them at a level exceeding 0.1%. Licences will be denied if the end users are involved in weapons production or military applications. Semiconductors won’t be spared either — chipmakers will now face intrusive case-by-case scrutiny, with Beijing demanding full visibility into tech specifications and end users before granting approval.

China is also sealing off human expertise. Engineers and companies in China are prohibited from participating to rare earth projects abroad unless the government explicitly permits it.

The geopolitical context in which this development took place is central: with US–China tensions elevated, Beijing has been signalling its leverage —dominance over the minerals powering advanced technologies.

By way of background. China currently controls up to 70% of global rare earth extraction, roughly 85% of refining, and about 90% of alloy and magnet production. That position has long served as strategic leverage, which some Chinese analysts argue remains intact despite the current deal.

In late October, Trump and Xi announced a breakthrough on rare-earth minerals. ‘All of the rare earth is settled, and that’s for the world,’ Trump said, signalling the removal of one of the last major bottlenecks in global supply chains. The meeting also paved the way for tariff reductions and the renewal of agricultural trade.

Under the agreement, China will suspend the global implementation of expansive restrictions announced on 9 October and issue general export licenses for rare earths, gallium, germanium, antimony, and graphite, ensuring continued access for US buyers and their suppliers. The move is a de facto removal of controls China has tightened since 2023 and follows Trump’s declaration that there is now ‘no roadblock at all’ on rare earth flows.

The pause offers breathing room for global industries that rely on the minerals underpinning everything from electric vehicles and smartphones to advanced defence systems and renewable energy technologies. Yet it does little to ease the structural concerns driving diversification efforts.

In Europe, the European Commission is preparing a plan, potentially ready within weeks, to reduce reliance on Chinese critical minerals. Nearly all of Europe’s supply of 17 rare earth minerals currently comes from China, a dependence that Brussels views as unsustainable despite Beijing’s temporary concessions. The emerging EU strategy is expected to mirror the REPowerEU energy diversification blueprint, with a focus on recycling, stockpiling, and developing alternative supply routes.

The EU, however, is signalling frustration at mixed signals from Washington and Beijing on the rare‑earth front. Brussels remains uncertain about how far China’s newly stated concessions will apply to European firms, and observers warn that the bloc lacks near-term leverage to sway Beijing’s policy. This uncertainty underscores Europe’s tricky balancing act: pushing for supplier diversification while remaining dependent on them.

Similar momentum is building among G7 governments. Meeting in Toronto, G7 energy ministers agreed to establish a critical minerals production alliance, backed by more than two dozen new investments and partnerships. The group – which consists of Canada, France, Germany, Italy, Japan, the UK and the USA – also agreed to channel up to C$20.2 million into international collaboration in research and development of the commodities. US Energy Secretary Chris Wright framed the G7 alignment as a commitment to ‘establish our own ability to mine, process, refine and create the products’. These moves ‘send the world a very clear message’, Canadian Energy Minister Tim Hodgson said, adding ‘We are serious about reducing market concentration and dependencies’.

Beyond the US–China–Europe triangle, Moscow has also entered the race. Russian President Vladimir Putin has ordered the government to draw up a roadmap for increasing Russia’s rare-earth mineral production and strengthening transportation links with China and North Korea by 1 December. Russia claims reserves of hundreds of millions of metric tonnes of critical minerals but currently supplies only a small fraction of global demand – a gap it now seeks to close.

In Southeast Asia, Malaysia is charting its own course. The government confirmed it will maintain its ban on raw rare-earth exports despite the US–China deal, signalling continued emphasis on domestic value-added processing. Taipei has downplayed the impact of Beijing’s rare-earth curbs, saying Taiwan can source most of its needs elsewhere.

What’s next? The truce on export controls may calm markets in the short term. However, with the G7 forging new alliances, Europe accelerating its diversification, and Beijing determined to maintain its control over rare earths as a strategic leverage, the global contest over critical minerals is only deepening. The underlying contest for supply chain resilience is far from over.

When the cloud falters: What the AWS and Microsoft outages reveal about cloud resilience

In the span of just ten days, two of the world’s largest cloud service providers — Amazon Web Services (AWS) and Microsoft Azure — suffered major outages that rippled across the globe, underscoring just how fragile the digital backbone of modern life has become.

For a few hours on Monday, much of the internet flickered out of reach. What began as an issue in one Amazon Web Services (AWS) data centre — the US-East-1 region in Virginia — quickly cascaded into a global failure. A seemingly minor Domain Name System (DNS) configuration error triggered a chain reaction, knocking out the systems that handle traffic between servers.

The root cause was identified as a failure in the DNS resolution for internal endpoints, particularly affecting DynamoDB API endpoints. (Sidenote: DynamoDB is a cloud database that stores information for apps and websites, while API endpoints are the access points where these services read or write that data. During the AWS outage, these endpoints stopped working properly, preventing apps from retrieving or saving information.)

Because so many websites, apps and services — from social media platforms and games to banking and government portals — rely on AWS, the outage affected homes, offices, and governments worldwide.

AWS engineers rushed to isolate the fault, eventually restoring normal operations by late afternoon. But the damage had already been done. The outage exposed a fragile truth about today’s digital world: when one provider falters, much of the internet stumbles with it. An estimated one-third of the world’s online services run on AWS infrastructure.

On 29 October 2025, a widespread outage affecting Microsoft’s cloud services disrupted major websites and services worldwide, including Heathrow Airport, NatWest, Asda, M&S, Starbucks, and Minecraft. The problem, also traced to Azure’s DNS configuration, lasted several hours before services gradually returned to normal.

According to tracking data from Downdetector, thousands of reports from multiple countries surfaced as websites and online services failed for several hours. Microsoft said the root cause lay with its Azure cloud‑computing platform, attributing the degradation to DNS issues and an inadvertent configuration change.

Taken together, the two outages point to a deeper systemic vulnerability. They highlighted several systemic risks in today’s digital infrastructure.

First, the outage underscored the global dependence on a small number of hyperscale cloud providers. This dependence acts as a single point of failure: a disruption within one provider can disrupt thousands of services relying on it.

Secondly, the root cause, being a DNS resolution error, revealed how one component in a complex system can be the catalyst for massive disruption.

Thirdly, despite cloud providers offering multiple ‘availability zones’ for redundancy, the event demonstrated that a fault in one critical region can still have worldwide consequences.

The lesson here is clear: as cloud computing becomes ever more central to the digital economy, resilience and diversification cannot be afterthoughts. Yet few true alternatives exist at AWS’s at Azure’s scale beyond Google Cloud, with smaller rivals from IBM to Alibaba, and fledgling European plays, far behind.

Promise and peril: The world signs on to the UN’s new cybercrime treaty in Hanoi

The first global treaty aimed at preventing and responding to cybercrime, the UN Convention against Cybercrime, was opened for signature in a high-level ceremony co-hosted by the Government of Viet Nam and the UN Secretariat on 25 and 26 October.

At the close of the two-day signing window, 72 states had signed the convention in Hanoi — a strong initial show of support that underlines the perceived need for better cross-border cooperation on issues ranging from ransomware and online fraud to child sexual exploitation and trafficking. The convention establishes a framework for harmonising criminal law, standardising investigative powers, and expediting mutual legal assistance and the exchange of electronic evidence.

But the ceremony was not without controversy. Human rights groups and civil society coalitions issued public warnings in the run-up to the signing, warning that vague definitions of cybercrime and expansive investigative powers could enable governments to justify surveillance or suppress legitimate online activities, such as journalism, activism, or cybersecurity research. Without precise legal limits and independent oversight, measures like data interception, compelled decryption, or real-time monitoring could erode privacy and freedom of expression.

Major tech companies and several rights organisations urged stronger, clearer safeguards and precise drafting to prevent misuse. UNODC, the UN office that led the negotiations, responded by pointing to built-in safeguards and to provisions meant to support capacity building for lower-resourced states.

Practically, the signing is only the procedural first step — the treaty will enter into force only 90 days after 40 states ratify it under their domestic procedures, a requirement that will determine how quickly the Convention becomes operational.

Observers following the aftermath focus on three immediate questions: which signatories will move quickly to ratify, how individual countries will transpose Convention obligations into national law (and whether they will embed robust human rights safeguards), and what mechanisms will govern technical cooperation and cross-border evidence sharing in practice.

The Hanoi signing marks a milestone: it creates a legal anchor for deeper international cooperation on cybercrime — provided implementation respects fundamental rights and rule-of-law principles.

Last month in Geneva: Developments, events and takeaways

The digital governance scene has been busy in Geneva in October. Here’s what we have tried to follow.

Geneva Peace Week 2025

The 2025 edition of Geneva Peace Week will bring together peacebuilders, policymakers, academics, and civil society to discuss and advance peacebuilding initiatives. The programme covers a wide range of topics, including conflict prevention, humanitarian response, environmental peacebuilding, and social cohesion. Sessions this year will explore new technologies, cybersecurity, and AI, including AI-fueled polarisation, AI for decision-making in fragile contexts, responsible AI use in peacebuilding, and digital approaches to supporting the voluntary and dignified return of displaced communities.

GESDA 2025 Summit

The GESDA 2025 Summit brings together scientists, diplomats, policymakers, and thought leaders to explore the intersection of science, technology, and diplomacy. Held at CERN in Geneva with hybrid participation, the three-day programme features sessions on emerging scientific breakthroughs, dual-use technologies, and equitable access to innovation. Participants will engage in interactive discussions, workshops, and demonstrations to examine how frontier science can inform global decision-making, support diplomacy, and address challenges such as climate change and sustainable development.

151st Assembly of the IPU

The 151st Assembly of the IPU took place in Geneva, Switzerland, on 19-23 October 2025. Under the theme ‘Upholding humanitarian norms and supporting humanitarian action in times of crisis,’ the Assembly held its general debate and reviewed progress on previous resolutions.

Discussions also addressed the protection of victims of illegal international adoption, considered amendments to the IPU Statutes and Rules, and set priorities for upcoming committee work. The meeting provided a platform for dialogue on humanitarian, democratic, and governance issues among national parliaments.

16th Session of the UN Conference on Trade and Development (UNCTAD16)

The 16th UN Conference on Trade and Development (UNCTAD16) took place from 20–23 October 2025 in Geneva, bringing together world leaders, ministers, and experts from 170 countries under the theme ‘Shaping the future: Driving economic transformation for equitable, inclusive and sustainable development.’

Over four days and 40 high-level sessions, delegates discussed trade, investment, technology, and sustainability, setting UNCTAD’s direction for the next four years.

Digital transformation was a prominent feature throughout the conference. Delegates highlighted that while technological progress opens new opportunities, it also risks deepening inequalities.

The event concluded with the adoption of the Geneva Consensus for a Just and Sustainable Economic Order. The agreement reaffirmed commitments to fairer and more inclusive global development, placing a strong emphasis on narrowing digital divides.

The Geneva Consensus calls on UNCTAD to help developing countries strengthen digital infrastructure, build skills, and create policy frameworks to harness the digital economy.

Concrete initiatives were announced: Switzerland pledged CHF 4 million to support UNCTAD’s e-commerce and digital economy work, while a new partnership with the Digital Cooperation Organization will advance digital data measurement, women’s participation, and SME support.

ICRC and Geneva Academy publish joint report on civilian involvement in cyber activities during conflicts

The International Committee of the Red Cross (ICRC) and the Geneva Academy of International Humanitarian Law and Human Rights have jointly released a report examining how international humanitarian law (IHL) applies to civilian participation in cyber and other digital activities during armed conflicts. The report is based on extensive global research and expert consultations conducted within the framework of their initiative.

The publication addresses key legal issues, including the protection of civilians and technology companies during armed conflict, and the circumstances under which such protections may be at risk. It further analyses the IHL obligations of civilians, such as individuals engaging in hacking, when directly involved in hostilities, as well as the responsibilities of states to safeguard civilians and civilian infrastructure and to ensure compliance with IHL by populations under their control.

The ICRC and Geneva Academy report also offers practical recommendations for governments, technology companies, and humanitarian organisations aimed at limiting civilian involvement in hostilities, minimising harm, and supporting adherence to international humanitarian law.