Human rights impact assessments throughout AI lifecycle

9 Dec 2021 08:30h - 10:00h

Event report

Human rights impact assessments present a mechanism used to identify and mitigate the risks to the rights of people affected by data processing. The European General Data Protection Regulation (GDPR) requires that such assessments be undertaken prior to high-risk data processing. The application of artificial intelligence (AI) poses varied risks to human rights. For example, some machine learning systems have exhibited bias, due to the quality of data they were trained on. AI is also being used to make decisions that have legal effect, for example, visa processing, credit scoring and allocation of resources by the government. This panel, which brought together experts versed with developments in the European Union, discussed human rights impact assessments as a mechanism for governing AI.The session considered emerging AI governance frameworks such as the OECD principles, the GDPR, the EU AI Act, the Committee on Artificial Intelligence (CAHAI) of the Council of Europe and the UN Guiding Principles on Business and Human Rights.

The speakers noted that countries that have adopted AI principles are moving towards AI practice, hence the need for impact assessments. Taking the history of Europe, Mr Kristian Bartholin (Secretary to the CAHAI, the Council of Europe) recalled that the region had a history of impact assessments from privacy impact assessments prior to the GDPR, data protection impact assessments during GDPR, and now human rights impact assessments in the age of AI. The broadening of impact assessments to include all human rights stemmed from the realisation that data protection impact assessments were limited to personal data. AI processes both personal and non-personal data at a scale that still impacts fundamental human rights, such as non-discrimination. It was, therefore, noted that human rights impact assessments were not the exclusive practice for lawyers, but required domain expertise from technologists, migration experts, and other professionals with knowledge about the subject matter where AI was applied.

From an industry perspective, Ms Cornelia Kutterer (Senior Director, Microsoft) spoke of the dynamics of applying principles such as the EU approach in a business setting. She explained that even prior to the EU laws, companies were developing their own standards on responsible AI. Besides companies, organisations such as UNESCO were also involved in setting norms on ethical AI.

However, as was witnessed a few months ago, the emerging norms were already facing difficulties. Access Now, together with other civil society organisations, were contesting the deployment of biometric systems until laws were in place. Mr Daniel Leufer (Policy Analyst, Access Now) explained that the current approach to human rights impact assessments permitted AI that was adverse to human rights. He, therefore, called for further prescription of what was permissible AI, stating, ‘Our approach is related to the interplay between the system, human beings, and their rights and obligations under international law.’

A key takeaway from the discussion was a shift from thinking about AI in terms of technology alone, but also in terms of the humans whose lives are affected by AI decisions. Any AI governance mechanism should, therefore, not only take into consideration the economic impact but also, and more importantly, the impact on fundamental human rights.

By Grace Mutung’u

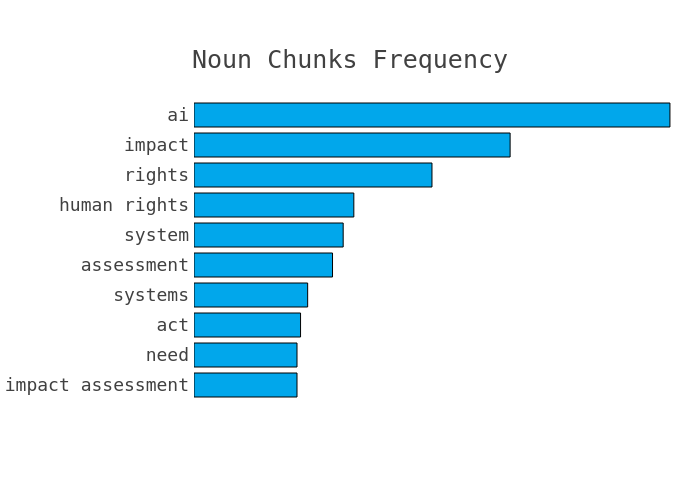

Session in numbers and graphs

Automated summary

Diplo’s AI Lab experiments with automated summaries generated from the IGF sessions. They will complement our traditional reporting. Please let us know if you would like to learn more about this experiment at ai@diplomacy.edu. The automated summary of this session can be found at this link.Related topics

Related event

Internet Governance Forum (IGF) 2021

6 Dec 2021 10:00h - 10 Dec 2021 18:00h

Katowice, Poland and Online